this post was submitted on 28 May 2024

1062 points (96.9% liked)

Fuck AI

2086 readers

308 users here now

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

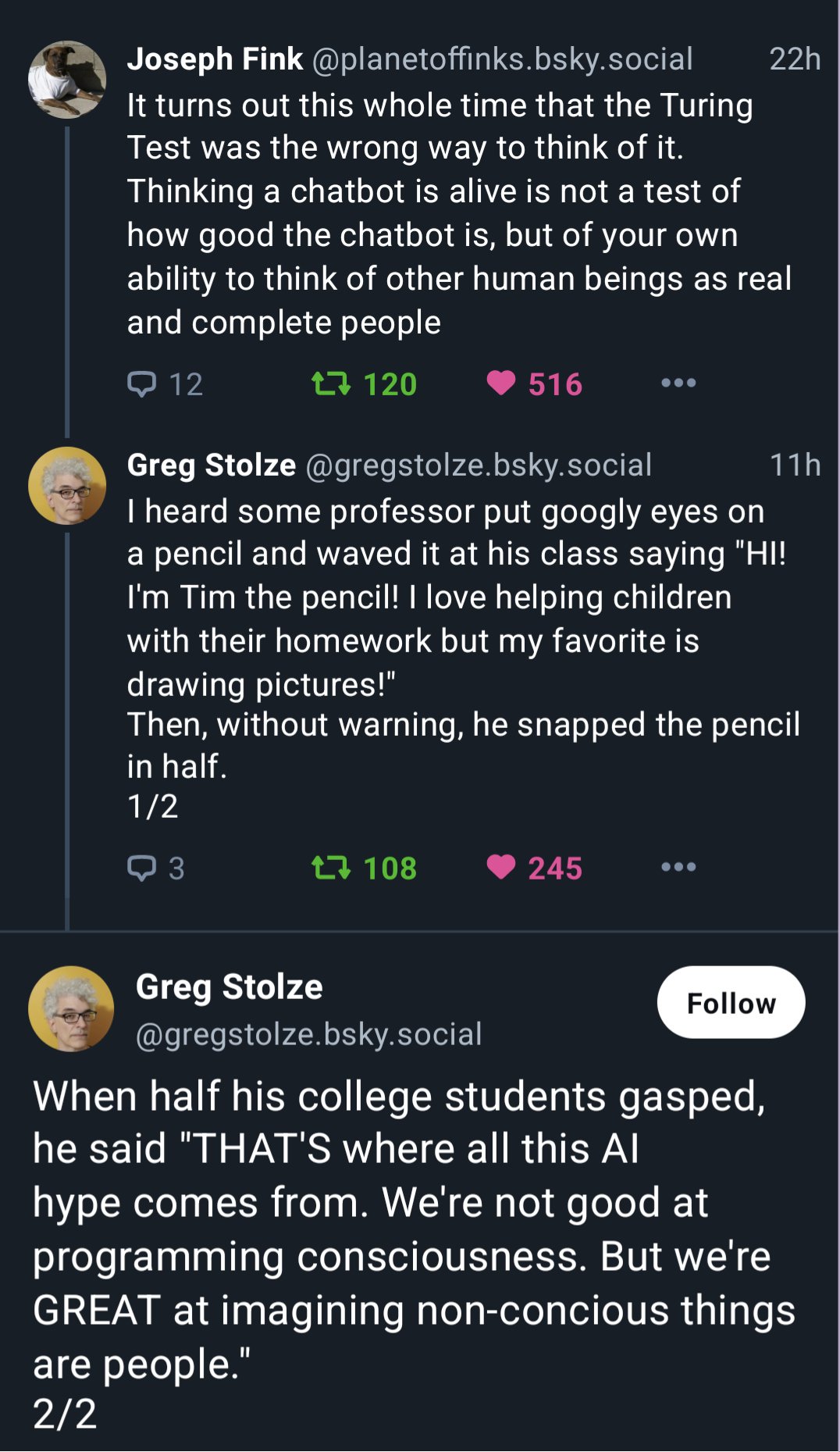

How would we even know if an AI is conscious? We can't even know that other humans are conscious; we haven't yet solved the hard problem of consciousness.

Does anybody else feel rather solipsistic or is it just me?

I doubt you feel that way since I'm the only person that really exists.

Jokes aside, when I was in my teens back in the 90s I felt that way about pretty much everyone that wasn't a good friend of mine. Person on the internet? Not a real person. Person at the store? Not a real person. Boss? Customer? Definitely not people.

I don't really know why it started, when it stopped, or why it stopped, but it's weird looking back on it.

Andrew Tate has convinced a ton of teenage boys to think the same, apparently. Kinda ironic.

Puberty is rough, for some people it’s your body going “mate, mate, mate” and not much else gets through for 4-5 years, or like 8 maybe.

I’m about the same age. (Xellennials or some shit like that, apparently). And at the time, there was also a big movement in media and culture to sell more shit to people our age (we’d also been slammed toy and cereal ads as kids in the 80s). MTV was switching to all reality bullshit and Clinton was boinking anything that moved. We were doomed to only think about ourselves.

The problem is that a bunch of them never outgrew it, or made it their “brand” like Tate and his ilk.

A Cicero a day and your solipsism goes away.

Rigour is important, and at the end of the day we don't really know anything. However this stuff is supposed to be practical; at a certain arbitrary point you need to say "nah, I'm certain enough of this statement being true that I can claim that it's true, thus I know it."

Descartes has entered the chat

Edo ergo caco. Caco ergo sum! [/shitty joke]

Serious now. Descartes was also trying to solve solipsism, but through a different method: he claims at least some sort of knowledge ("I doubt thus I think; I think thus I am"), and then tries to use it as a foundation for more knowledge.

What I'm doing is different. I'm conceding that even radical scepticism, a step further than solipsism, might be actually correct, and that true knowledge is unobtainable (solipsism still claims that you can know that yourself exist). However, that "we'll never know it" is pointless, even if potentially true, because it lacks any sort of practical consequence. I learned this from Cicero (it's how he handles, for example, the definition of what would be a "good man").

Note that this matter is actually relevant in this topic. We're dealing with black box systems, that some claim to be conscious; sure, they do it through insane troll logic, but the claim could be true, and we would have no way to know it. However, for practical matters: they don't behave as conscious systems, why would we treat them as such?

I'm either too high or not high enough, and there's only one way to find out

Try both.

I don't smoke but I get you guys. Plenty times I've had a blast discussing philosophy with people who were high.

Underrated joke

13 year old me after watching Vanilla Sky:

We don't even know what we mean when we say "humans are conscious".

Also I have yet to see a rebuttal to "consciousness is just an emergent neurological phenomenon and/or a trick the brain plays on itself" that wasn't spiritual and/or cooky.

Look at the history of things we thought made humans humans, until we learned they weren't unique. Bipedality. Speech. Various social behaviors. Tool-making. Each of those were, in their time, fiercely held as "this separates us from the animals" and even caused obvious biological observations to be dismissed. IMO "consciousness" is another of those, some quirk of our biology we desperately cling on to as a defining factor of our assumed uniqueness.

To be clear LLMs are not sentient, or alive. They're just tools. But the discourse on consciousness is a distraction, if we are one day genuinely confronted with this moral issue we will not find a clear binary between "conscious" and "not conscious". Even within the human race we clearly see a spectrum. When does a toddler become conscious? How much brain damage makes someone "not conscious"? There are no exact answers to be found.

I've defined what I mean by consciousness - a subjective experience, quaila. Not simply a reaction to an input, but something experiencing the input. That can't be physical, that thing experiencing. And if it isn't, I don't see why it should be tied to humans specifically, and not say, a rock. An AI could absolutely have it, since we have no idea how consciousness works or what can be conscious, or what it attaches itself to. And I also see no reason why the output needs to 'know' that it's conscious, a conscious LLM could see itself saying absolute nonsense without being able to affect its output to communicate that it's conscious.

I'd say that, in a sense, you answered your own question by asking a question.

ChatGPT has no curiosity. It doesn't ask about things unless it needs specific clarification. We know you're conscious because you can come up with novel questions that ChatGPT wouldn't ask spontaneously.

My brain came up with the question, that doesn't mean it has a consciousness attached, which is a subjective experience. I mean, I know I'm conscious, but you can't know that just because I asked a question.

I find it easier to believe that everything is conscious than it is to believe that some matter became conscious.

And beings like us are conscious on many levels, what we commonly call our "consciousness" is only one of them. We are not singular, we are walking communities.

It wasn't that it was a question, it was that it was a novel question. It's the creativity in the question itself, something I have yet to see any LLM be able to achieve. As I said, all of the questions I have seen were about clarification ("Did you mean Anne Hathaway the actress or Anne Hathaway, the wife of William Shakespeare?") They were not questions like yours which require understanding things like philosophy as a general concept, something they do not appear to do, they can, at best, regurgitate a definition of philosophy without showing any understanding.

Let's try to skip the philosophical mental masturbation, and focus on practical philosophical matters.

Consciousness can be a thousand things, but let's say that it's "knowledge of itself". As such, a conscious being must necessarily be able to hold knowledge.

In turn, knowledge boils down to a belief that is both

LLMs show awful logical reasoning*, and their claims are about things that they cannot physically experience. Thus they are unable to justify beliefs. Thus they're unable to hold knowledge. Thus they don't have conscience.

*Here's a simple practical example of that:

And get down to the actual masturbation! Am I right? Of course I am.

Should'n've called it "mental masturbation"... my bad.

By "mental masturbation" I mean rambling about philosophical matters that ultimately don't matter. Such as dancing around the definitions, sophism, and the likes.

Scientists cannot physically experience a black hole, or the surface of the sun, or the weak nuclear force in atoms. Does that mean they don't have knowledge about such things?

Seems a valid answer. It doesn't "know" that any given Jane Etta Pitt son is. Just because X -> Y doesn't mean given Y you know X. There could be an alternative path to get Y.

Also "knowing self" is just another way of saying meta-cognition something it can do to a limit extent.

Finally I am not even confident in the standard definition of knowledge anymore. For all I know you just know how to answer questions.

That sounds like an AI that has no context window. Context windows are words thrown into to the prompt after the user's prompt is done to refine the response. The most basic is "feed the last n-tokens of the questions and response in to the window". Since the last response talked about Jane Ella Pitt, the AI would then process it and return with 'Brad Pitt' as an answer.

The more advanced versions have context memories (look up RAG vector databases) that learn the definition of a bunch of nouns and instead of the previous conversation, it sees the word "aglat" and injects the phrase "an aglat is the plastic thing at the end of a shoelace" into the context window.

I did this as two separated conversations exactly to avoid the "context" window. It shows that the LLM in question (ChatGPT 3.5, as provided by DDG) has the information necessary to correctly output the second answer, but lacks the reasoning to do so.

If I did this as a single conversation, it would only prove that it has a "context" window.

So if I asked you something at two different times in your life, the first time you knew the answer, and the second time you had forgotten our first conversation, that proves you are not a reasoning intelligence?

Seems kind of disingenuous to say "the key to reasoning is memory", then set up a scenario where an AI has no memory to prove it can't reason.

You're anthropomorphising it as if it was something able to "forget" information, like humans do. It isn't - the info is either present or absent in the model, period.

But let us pretend that it is able to "forget" info. Even then, those two prompts were not sent on meaningfully "different times" of the LLM's "life" [SIC]; one was sent a few seconds after another, in the same conversation.

And this test can be repeated over and over and over if you want, in different prompt orders, to show that your implicit claim is bollocks. The failure to answer the second question is not a matter of the model "forgetting" things, but of being unable to handle the information to reach a logic conclusion.

I'll link again this paper because it shows that this was already studied.

The one being at least disingenuous here is you, not me. More specifically:

You just said they were different conversations to avoid the context window.

|You’re anthropomorphising it

I was referring to you and your memory in that statement comparing you to an it. Are you not something to be anthropomorphed?

|But let us pretend that it is able to “forget” info.

That is literally all computers do all day. Read info. Write info. Override info. Don't need to pretend a computer can do something they has been doing for the last 50 years.

|Those two prompts were not sent on meaningfully “different times”

If you started up two minecraft games with different seeds, but "at the exact same time", you would get two different world generations. Meaningfully “different times” is based on the random seed, not chronological distance. I dare say that is close to anthropomorphing AI to think it would remember something a few seconds ago because that is how humans work.

|And this test can be repeated over and over and over if you want

|I’ll link again this paper because it shows that this was already studied.

You linked to a year old paper showing that it already is getting the A->B, B->A thing right 30% of the time. Technology marches on, this was just what I was able to find with a simple google search

|In no moment I said or even implied that the key to reasoning is memory

|LLMs show awful logical reasoning ... Thus they’re unable to hold knowledge.

Oh, my bad. Got A->B B->A backwards. You said since they can't reason, they have no memory.