this post was submitted on 07 Jul 2024

1248 points (95.0% liked)

Fuck AI

1646 readers

116 users here now

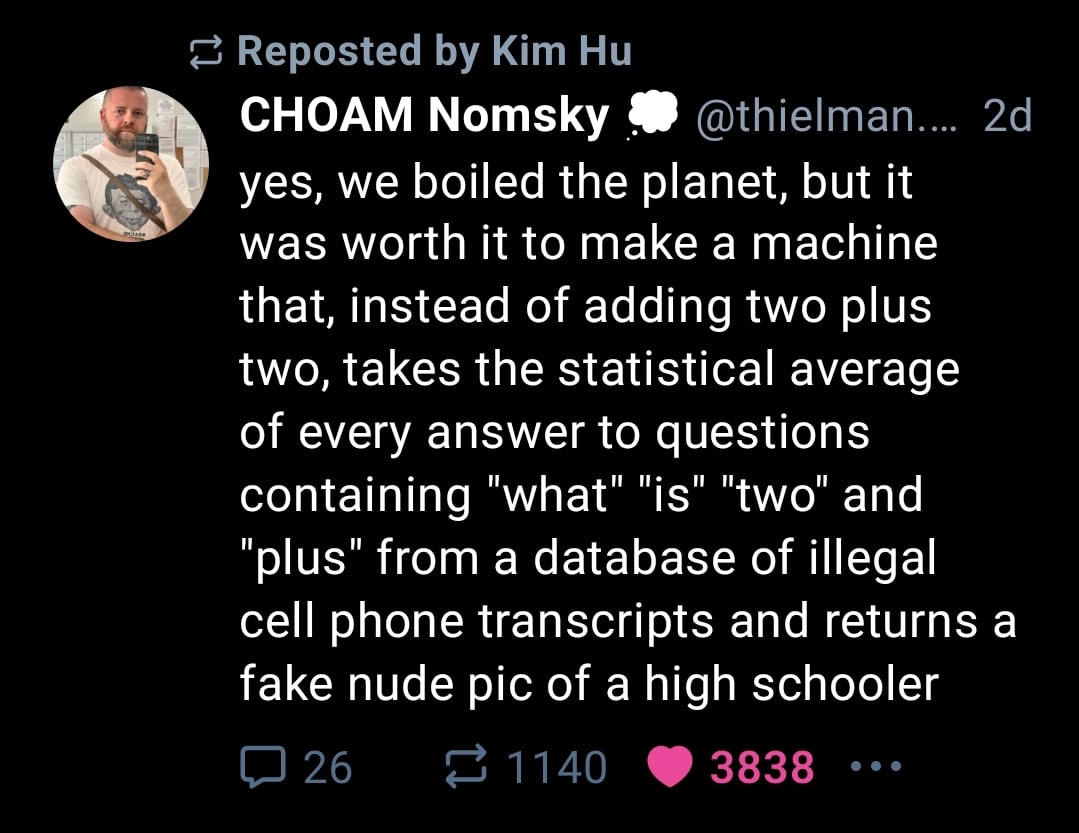

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

founded 10 months ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Sure, there are costs that are too high for anything.

This is the part where it breaks down though. There's nothing inherently immoral about AI. It's not the concept of AI you have problems with. It's the implementation. I hate a lot of the implementation, too. Shoehorning an AI into everything, using AI to justify a reduction in labor, that all sucks. The tool itself, though? Pretty fuckin awesome.

Are we comparing this to cancer research still? If so that's a bit of a WILD statement. It's pretty close to the COVID vaccine denial mentality - because it was made using something I don't like/fully understand, it must be bad.

Ok let's go back to drugs, then. If we were making your organic, free trade heroin, but called it beroin so that we're not piggybacking off the heroin market, we're good? No, that doesn't make sense. Heroin will fuck up someone's life regardless of what you call it, how it was produced, eetc.There's (virtually) no legitimate, useful application of heroin. Probably not one we'd ever see the production of broadly okayed.

Conversely, you've already agreed that there are ethical uses and applications of AI. It doesn't matter what the name is, it's the same technology. AI has become the term for this technology, just like heroin has become the term for that drug, and it doesn't matter what else you want to call it, everyone already knows what you mean. It doesn't matter what you call it, its uses are still the same. It's impact is still the same.

So yeah, if you just have a problem with, say, cancer researchers using AI, and would rather them use, idk, AGI or any of the other alternative names, I think you're missing the point.

I'm not saying they should do research at all, just take steps to separate yourselves from the awful practices practiced by the bug players right now.

We should be able to talk about advances in cancer research without having to have a discussion about how AI is going overall, including the shitty actors.

And, to be fair, most of the good projects you are defending do differentiate themselves very publicly about the differences and how they are more responsible. All I'm saying is that is a good thing. Companies should be scrambling to distance themselves from openAI, copilot, and whatever else the big tech companies have created.