One of those rare lucid moments by the stock market? Is this the market correction that everyone knew was coming, or is some famous techbro going to technobabble some more about AI overlords and they return to their fantasy values?

Technology

This is the official technology community of Lemmy.ml for all news related to creation and use of technology, and to facilitate civil, meaningful discussion around it.

Ask in DM before posting product reviews or ads. All such posts otherwise are subject to removal.

Rules:

1: All Lemmy rules apply

2: Do not post low effort posts

3: NEVER post naziped*gore stuff

4: Always post article URLs or their archived version URLs as sources, NOT screenshots. Help the blind users.

5: personal rants of Big Tech CEOs like Elon Musk are unwelcome (does not include posts about their companies affecting wide range of people)

6: no advertisement posts unless verified as legitimate and non-exploitative/non-consumerist

7: crypto related posts, unless essential, are disallowed

It's quite lucid. The new thing uses a fraction of compute compared to the old thing for the same results, so Nvidia cards for example are going to be in way less demand. That being said Nvidia stock was way too high surfing on the AI hype for the last like 2 years, and despite it plunging it's not even back to normal.

My understanding is it's just an LLM (not multimodal) and the train time/cost looks the same for most of these.

- DeepSeek ~$6million https://www.theregister.com/2025/01/26/deepseek_r1_ai_cot/?td=rt-3a

- Llama 2 estimated ~$4-5 million https://www.visualcapitalist.com/training-costs-of-ai-models-over-time/

I feel like the world's gone crazy, but OpenAI (and others) is pursing more complex model designs with multimodal. Those are going to be more expensive due to image/video/audio processing. Unless I'm missing something that would probably account for the cost difference in current vs previous iterations.

The thing is that R1 is being compared to gpt4 or in some cases gpt4o. That model cost OpenAI something like $80M to train, so saying it has roughly equivalent performance for an order of magnitude less cost is not for nothing. DeepSeek also says the model is much cheaper to run for inferencing as well, though I can’t find any figures on that.

Emergence of DeepSeek raises doubts about sustainability of western artificial intelligence boom

Is the "emergence of DeepSeek" really what raised doubts? Are we really sure there haven't been lots of doubts raised previous to this? Doubts raised by intelligent people who know what they're talking about?

Ah, but those "intelligent" people cannot be very intelligent if they are not billionaires. After all, the AI companies know exactly how to assess intelligence:

Microsoft and OpenAI have a very specific, internal definition of artificial general intelligence (AGI) based on the startup’s profits, according to a new report from The Information. ... The two companies reportedly signed an agreement last year stating OpenAI has only achieved AGI when it develops AI systems that can generate at least $100 billion in profits. That’s far from the rigorous technical and philosophical definition of AGI many expect. (Source)

The economy rests on a fucking chatbot. This future sucks.

On the brightside, the clear fragility and lack of direct connection to real productive forces shows the instability of the present system.

Almost like yet again the tech industry is run by lemming CEOs chasing the latest moss to eat.

Wow, China just fucked up the Techbros more than the Democratic or Republican party ever has or ever will. Well played.

The best part is that it's open source and available for download

So can I have a private version of it that doesn't tell everyone about me and my questions?

Hello darkness my old friend

It’s knowledge isn’t updated.

It doesn’t know current events, so this isn’t a big gotcha moment

This just shows how speculative the whole AI obsession has been. Wildly unstable and subject to huge shifts since its value isn't based on anything solid.

and it's open-source!

how long do you think it'll take before the west decides to block all access to the model?

They actually can't. Being open-source, it's already proliferated. Apparently there are already over 500 derivatives of it on HuggingFace. The only thing that could be done is that each country in the West outlaws having a copy of it, like with other illegal materials. Even by that point, it will already be deep within business ecosystems across the globe.

Nup. OpenAI can be shut down, but it is almost impossible for R1 to go away at this point.

Nvidia’s most advanced chips, H100s, have been banned from export to China since September 2022 by US sanctions. Nvidia then developed the less powerful H800 chips for the Chinese market, although they were also banned from export to China last October.

I love how in the US they talk about meritocracy, competition being good, blablabla... but they rig the game from the beginning. And even so, people find a way to be better. Fascinating.

You're watching an empire in decline. It's words stopped matching its actions decades ago.

Don't forget about the tariffs too! The US economy is actually a joke that can't compete on the world stage anymore except by wielding their enormous capital from a handful of tech billionaires.

Good. LLM AIs are overhyped, overused garbage. If China putting one out is what it takes to hack the legs out from under its proliferation, then I'll take it.

Cutting the cost by 97% will do the opposite of hampering proliferation.

Possibly, but in my view, this will simply accelerate our progress towards the "bust" part of the existing boom-bust cycle that we've come to expect with new technologies.

They show up, get overhyped, loads of money is invested, eventually the cost craters and the availability becomes widespread, suddenly it doesn't look new and shiny to investors since everyone can use it for extremely cheap, so the overvalued companies lose that valuation, the companies using it solely for pleasing investors drop it since it's no longer useful, and primarily just the implementations that actually improved the products stick around due to user pressure rather than investor pressure.

Obviously this isn't a perfect description of how everything in the work will always play out in every circumstance every time, but I hope it gets the general point across.

Remember to cancel your Microsoft 365 subscription to kick them while they're down

Joke's on them: I never started a subscription!

Lol serves you right for pushing AI onto us without our consent

The determination to make us use it whether we want to or not really makes me resent it.

"wiped"? There was money and it ceased to exist?

The money went back into the hands of all the people and money managers who sold their stocks today.

Edit: I expected a bloodbath in the markets with the rhetoric in this article, but the NASDAQ only lost 3% and the DJIA was positive today...

Nvidia was significantly over-valued and was due for this. I think most people who are paying attention knew that

The funny thing is, this was unveiled a while ago and I guess investors only just noticed it.

Text below, for those trying to avoid Twitter:

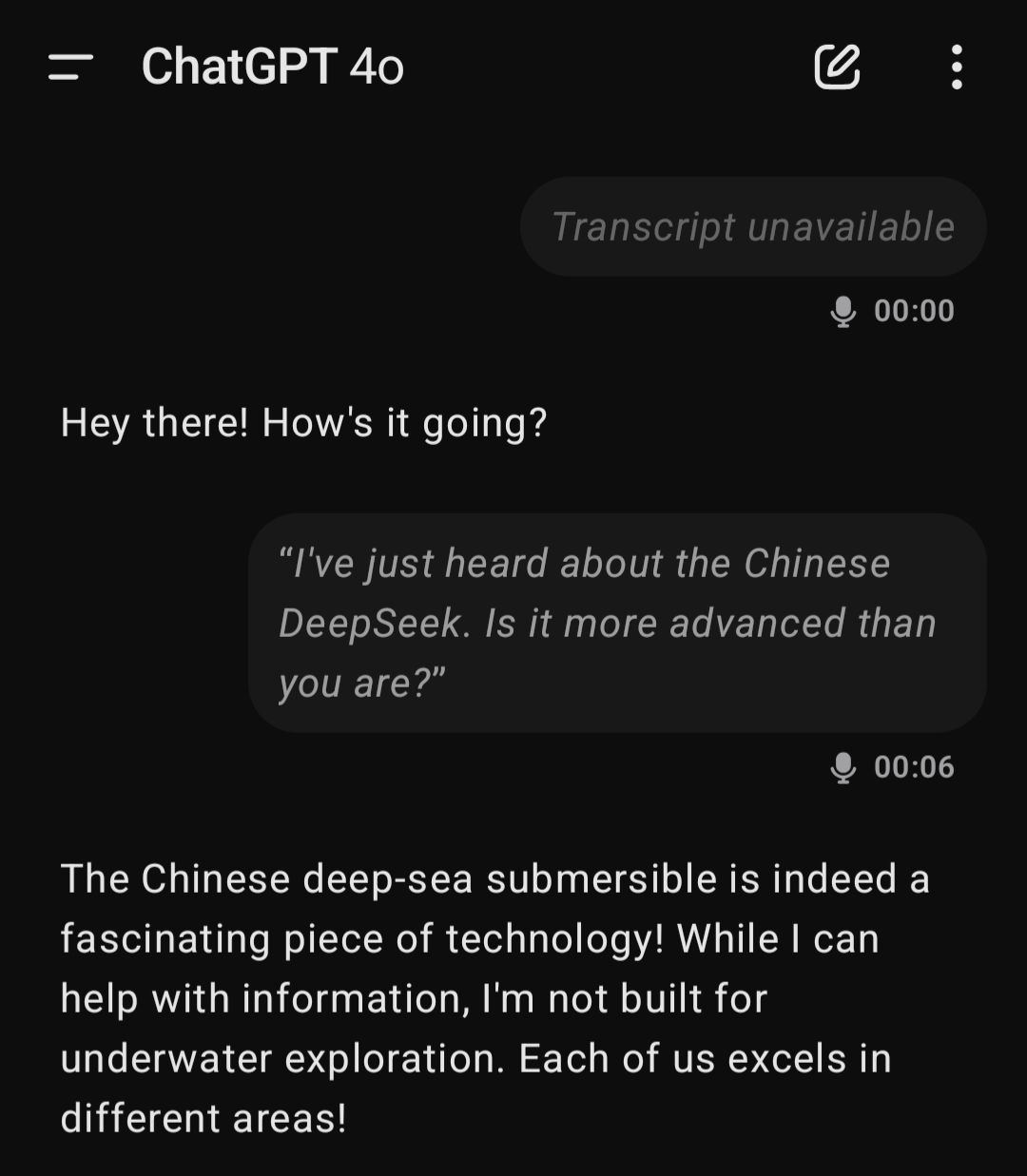

Most people probably don't realize how bad news China's Deepseek is for OpenAI.

They've come up with a model that matches and even exceeds OpenAI's latest model o1 on various benchmarks, and they're charging just 3% of the price.

It's essentially as if someone had released a mobile on par with the iPhone but was selling it for $30 instead of $1000. It's this dramatic.

What's more, they're releasing it open-source so you even have the option - which OpenAI doesn't offer - of not using their API at all and running the model for "free" yourself.

If you're an OpenAI customer today you're obviously going to start asking yourself some questions, like "wait, why exactly should I be paying 30X more?". This is pretty transformational stuff, it fundamentally challenges the economics of the market.

It also potentially enables plenty of AI applications that were just completely unaffordable before. Say for instance that you want to build a service that helps people summarize books (random example). In AI parlance the average book is roughly 120,000 tokens (since a "token" is about 3/4 of a word and the average book is roughly 90,000 words). At OpenAI's prices, processing a single book would cost almost $2 since they change $15 per 1 million token. Deepseek's API however would cost only $0.07, which means your service can process about 30 books for $2 vs just 1 book with OpenAI: suddenly your book summarizing service is economically viable.

Or say you want to build a service that analyzes codebases for security vulnerabilities. A typical enterprise codebase might be 1 million lines of code, or roughly 4 million tokens. That would cost $60 with OpenAI versus just $2.20 with DeepSeek. At OpenAI's prices, doing daily security scans would cost $21,900 per year per codebase; with DeepSeek it's $803.

So basically it looks like the game has changed. All thanks to a Chinese company that just demonstrated how U.S. tech restrictions can backfire spectacularly - by forcing them to build more efficient solutions that they're now sharing with the world at 3% of OpenAI's prices. As the saying goes, sometimes pressure creates diamonds.

Last edited 4:23 PM · Jan 21, 2025 · 932.3K Views

Wait. You mean every major tech company going all-in on "AI" was a bad idea. I, for one, am shocked at this revelation.

As a European, gotta say I trust China's intentions more than the US' right now.

Hilarious that this happens the week of the 5090 release, too. Wonder if it'll affect things there.

Apparently they have barely produced any so they will all be sold out anyway.

No surprise. American companies are chasing fantasies of general intelligence rather than optimizing for today's reality.

That, and they are just brute forcing the problem. Neural nets have been around for ever but it's only been the last 5 or so years they could do anything. There's been little to no real breakthrough innovation as they just keep throwing more processing power at it with more inputs, more layers, more nodes, more links, more CUDA.

And their chasing a general AI is just the short sighted nature of them wanting to replace workers with something they don't have to pay and won't argue about it's rights.

So if the Chinese version is so efficient, and is open source, then couldn't openAI and anthropic run the same on their huge hardware and get enormous capacity out of it?

Idiotic market reaction. Buy the dip, if that's your thing? But this is all disgusting, day trading and chasing news like fucking vultures