Oh look! Socialism for the rich!

People Twitter

People tweeting stuff. We allow tweets from anyone.

RULES:

- Mark NSFW content.

- No doxxing people.

- Must be a pic of the tweet or similar. No direct links to the tweet.

- No bullying or international politcs

- Be excellent to each other.

- Provide an archived link to the tweet (or similar) being shown if it's a major figure or a politician.

🧑🚀🔫🧑🚀

See - this is why I don't give a shit about copyright.

It doesn't protect creators - it just enriches rent-seeking corporate fuckwads.

Daily reminder that copyright isn’t the only conceivable weapon we can wield against AI.

Anticompetitive business practices, labor law, privacy, likeness rights. There are plenty of angles to attack from.

Most importantly, we need strong unions. However we model AI regulation, we will still want some ability to grant training rights. But it can’t be a boilerplate part of an employment/contracting agreement. That’s the kind of thing unions are made to handle.

Look, I'm not against AI and automation in general. I'm not against losing my job either. We should use this as tools to overcome scarcity, use it for the better future of all of humanity. I don't mind losing my job if I could use my time to do things I love. But that won't happen as long as greedy ass companies use it against us.

We conquered our resource scarcity problem years ago. Artificial scarcity still exists in society because we haven't conquered our greed problem.

Both of you argue from the flawed assumption that AI actually has the potential that marketing people trying to bullshit you say it has. It doesn't.

AI has its usage. Not the ones people cream their pants about, but to say it's useless is just wrong. But people tend to misunderstand what AI, ML, and whatever else is. Just like everyone was celebrating the cloud without knowing what the cloud was ten, twenty years ago.

It has its uses but none of them include anything even close to replacing entire jobs or even significant portions of jobs.

I disagree, but I might have different experiences.

It doesn't matter what it's good for. What matters is what the MBA parasites think it's good for.

They will impulsively replace jobs, and then when it fails, try to rehire at lower wages.

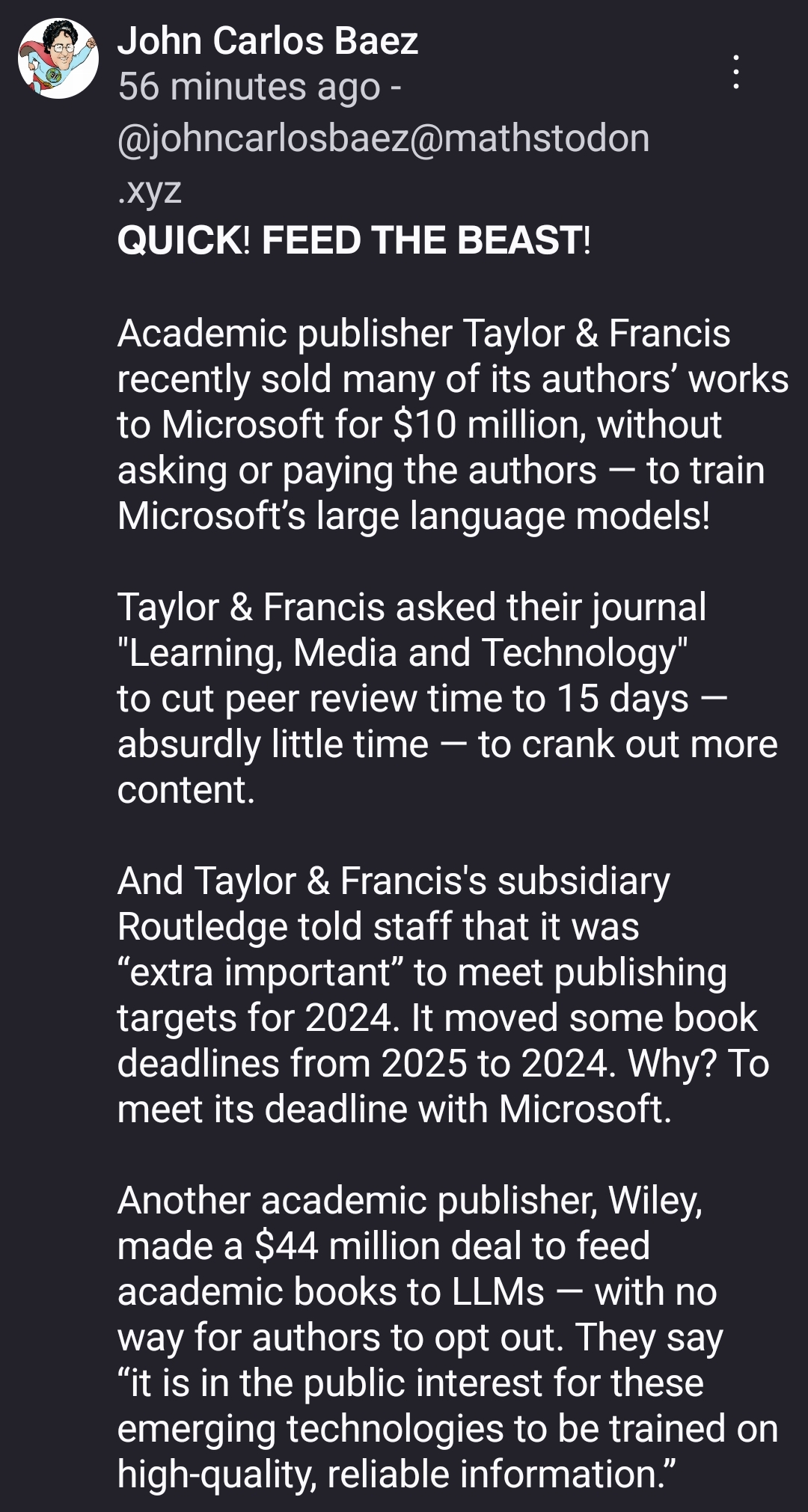

“it is in the public interest for these emerging technologies to be trained on high-quality, reliable information.”

Oh, well if you say so. Oh wait, no one has a say anyway because corporations ru(i)n everything.

"We need to train LLMs with your data in order to make you obsolete."

If that's what it takes to get rid of CEOs then I'm on board.

Seriously though, that's the best application of AI. CEO is a simple logic based position, or so they tell us, that happens to consume more financial resources than many dozen lower level employees. If anyone is on the chopping block it should be them, in both senses of the phrase.

For CEOs it might even bring down the percentage of nonsense they say even with the high rates of nonsense AI produces.

It's nice to see them lowering the bar for "high-quality" at the same time. Really makes it seem like they mean it. /s

"it's in the public interest" so all these articles will be freely available to the public. Right?... Riiight?!

"How is nobody talking about this?"

The average person has the science literacy at or below a fifth grader, and places academic study precedence below that of a story about a wish granting sky fairy who made earth in his basement as a hobby with zero lighting (obviously, as light hadn't been invented at that point).

A musician friend of mine, when asked "Why are there no Mozarts or Beethovens any more?" replies "We went through your schools."

It's for reasons like these that I think its foolhardy to be advocating for a strengthening of copyrights when it comes to AI.

The windfall will not be shared, the data is already out of the hands of the individuals and any "pro-artist" law will only help kill the competition for companies like Google, Sony Music, Disney and Microsoft.

These companies will happily pay huge sums to lock anyone out of the scene. They are already splitting it between each other, they are anticipating a green light for regulatory capture.

Copyright is not supposed to be protecting individuals work from corporations, but the otherway around

I think this happens because the publisher owns the content and owes royalties to authors under certain conditions (which may or may not be met in this situation). The reason I think this is I had a PhD buddy who published a book (nonfiction history) and we all got a hardy chuckle at the part of the contract that said the publisher got the theme park rights. But what if there were other provisions in the contract that would allow for this situation without compensating the authors? Anywho, this is a good reminder to read the fine print on anything you sign.

I’d guess books are different, but researchers don’t get paid anything for publishing in academic journals

Oh yeah, good point.

"it is in the public interest for these emerging technologies to be trained on high quality information"

Ok but we have to pay hundreds of dollars for a single book in college because....?

If it is in the public interest then all of that information should be open sourced.

Guess it's time to poison the data

A couple dozen zero-width unicode characters between every letter, white text on white background filled with nonsense, any other ideas?

Hilariously the data is poisoning itself, because as the criteria for decent review are dwindling, more non - reproducible crap science is published. Or its straight up fake. Journals don't care, correcting the scientific record always takes months or years. Fuck the publishers.

How does cutting peer review time help get more content? The throughput will still be the same regardless of if it takes 15 days or a year to complete a peer review

Isn't that because the peers also write stuff? So it's not just a fixed delay on one-by-one papers, but a delay that goes between peers' periods of working on papers too.

I believe that most of today's writers would advise you to run your publisher's contract past a competent lawyer before you sign.

Maybe academic authors are not as aware that publishing is full of ravening wolves that have been pulling these tricks since Dickens handed his Pickwick Papers to Punch. Poor babes in the woods.

Reminds me of the song "Feed the Machine" by Poor Man's Poison:

Good way to make authors negotiate differently with you in the future. I doubt they’ll just sign on to whatever they did previously without some guard rails around this

The shitty chat bots do need high quality data. This is much better than scraping off reddit, since a glorified auto-complete cannot know that eating rocks is bad for you. You can't retroactively complain after having signed away your rights to something. But you can change things moving forward. If you are incorruptible and don't care about money, start an organization with those values and convince the researchers to join you. Good luck (seriously, I hope you succeed).

Thanks for sharing this with us!

Terms of Service and contracts...

It reminded me of this:

"Disney says man can't sue over wife's death because he agreed to Disney+ terms of service" [https://www.nbcnews.com/news/us-news/disney-says-man-cant-sue-wifes-death-agreed-disney-terms-service-rcna166594]

It's in the public interest for academic publishers to be wiped off the face of the planet