US chip-maker Nvidia led a rout in tech stocks Monday after the emergence of a low-cost Chinese generative AI model that could threaten US dominance in the fast-growing industry.

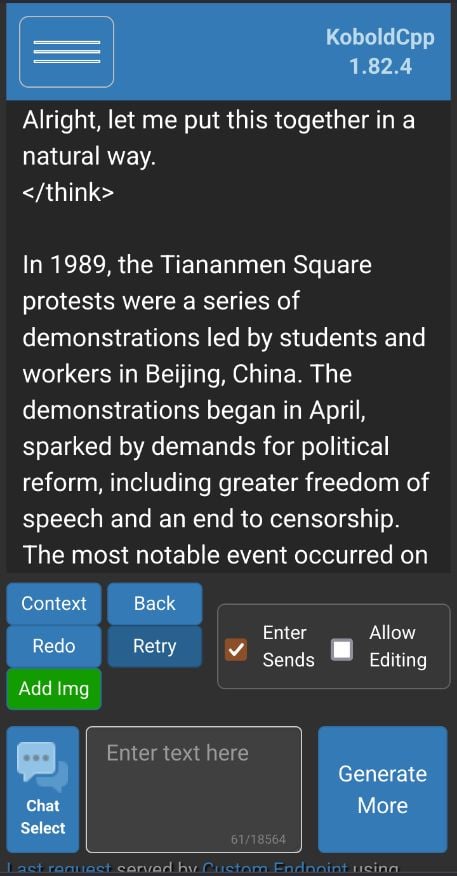

The chatbot developed by DeepSeek, a startup based in the eastern Chinese city of Hangzhou, has apparently shown the ability to match the capacity of US AI pace-setters for a fraction of the investments made by American companies.

Shares in Nvidia, whose semiconductors power the AI industry, fell more than 15 percent in midday deals on Wall Street, erasing more than $500 billion of its market value.

The tech-rich Nasdaq index fell more than three percent.

AI players Microsoft and Google parent Alphabet were firmly in the red while Meta bucked the trend to trade in the green.

DeepSeek, whose chatbot became the top-rated free application on Apple's US App Store, said it spent only $5.6 million developing its model -- peanuts when compared with the billions US tech giants have poured into AI.

US "tech dominance is being challenged by China," said Kathleen Brooks, research director at trading platform XTB.

"The focus is now on whether China can do it better, quicker and more cost effectively than the US, and if they could win the AI race," she said.

US venture capitalist Marc Andreessen has described DeepSeek's emergence as a "Sputnik moment" -- when the Soviet Union shocked Washington with its 1957 launch of a satellite into orbit.

As DeepSeek rattled markets, the startup on Monday said it was limiting the registration of new users due to "large-scale malicious attacks" on its services.

Meta and Microsoft are among the tech giants scheduled to report earnings later this week, offering opportunity for comment on the emergence of the Chinese company.

Shares in another US chip-maker, Broadcom, fell 16 percent while Dutch firm ASML, which makes the machines used to build semiconductors, saw its stock tumble 6.7 percent.

"Investors have been forced to reconsider the outlook for capital expenditure and valuations given the threat of discount Chinese AI models," David Morrison, senior market analyst at Trade Nation.

"These appear to be as good, if not better, than US versions."

Wall Street's broad-based S&P 500 index shed 1.7 percent while the Dow was flat at midday.

In Europe, the Frankfurt and Paris stock exchanges closed in the red while London finish flat.

Asian stock markets mostly slid.

Just last week following his inauguration, Trump announced a $500 billion venture to build infrastructure for AI in the United States led by Japanese giant SoftBank and ChatGPT-maker OpenAI.

SoftBank tumbled more than eight percent in Tokyo on Monday while Japanese semiconductor firm Advantest was also down more than eight percent and Tokyo Electron off almost five percent.