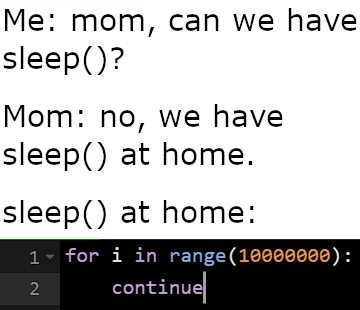

Ah yes. The speedup-loop.

https://thedailywtf.com/articles/The-Speedup-Loop

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

This is brilliant.

I think some compilers will just drop that in the optimization step.

Real pain in the ass when you're in embedded and your carefully placed NOPs get stripped

asm("nop");

Homer: "oh yeah speed ~~holes~~ sleep"

Sleep holes

Tell the CPU to wait for you?

Na, keep the CPU busy with useless crap till you need it.

Fuck those other processes. I want to hear that fan.

I paid good money for my fan, I want to know it's working!

Have you considered a career in middle management

On microcontrollers that might be a valid approach.

I've written these cycle-perfect sleep loops before.

It gets really complicated if you want to account for time spent in interrupt handlers.

Thankfully I didn't need high precision realtime. I just needed to wait a few seconds for serial comm.

But then I gotta buy a space heater too...

Microcontrollers run 100% of the time even while sleeping.

Nah, some MCUs have low power modes.

ESP32 has 5 of them, from disabling fancy features, throttling the clock, even delegating to an ultra low power coprocessor, or just going to sleep until a pin wakes it up again. It can go from 240mA to 150uA and still process things, or sleep for only 5uA.

Nah, Sleeping != Low power mode. The now obsolete ATmega328 has a low power mode.

This should be the new isEven()/isOdd(). Calculate the speed of the CPU and use that to determine how long it might take to achieve a 'sleep' of a required time.

I took an embedded hardware class where specifically we were required to manually calculate our sleeps or use interrupts and timers rather than using a library function to do it for us.

Javascript enters chat:

await new Promise(r => setTimeout(r, 2000));

Which is somehow even worse.

As someone who likes to use the CPU, I don't think it's worse.

I mean, it’s certainly better than pre-2015.

I actually remember the teacher having us do this in high school. I tried it again a few years later and it didn't really work anymore.

On my first programming lesson, we were taught that 1 second sleep was for i = 1 to 1000 😀, computers was not that fast back then...

I mean maybe in an early interpreted language like BASIC… even the Intel 8086 could count to 1000 in a fraction of a second

This was in 1985, on a ABC80, a Swedish computer with a 3 MHz CPU. So, in theory it would be much faster, but I assume there were many performance losses (slow basic interpretor and thing like that) so that for loop got close enough to a second for us to use.

You gotta measure the latency of the first loop.

I can relate. We have breaks ate work too.

I just measured it, and this takes 0.17 seconds. And it's really reliable, I added another zero to that number and it was 1.7 seconds

Sudo sleep