Honestly chapter 3 was way worse to me at first. I hate those red dustbunny things and I swear they made the timing specifically so that I would constantly run into them and I hate it.

Zangoose

Decentralized/OSS platforms >>> Multiple competing centralized platforms >>> One single centralized platform

Bluesky and Threads are both bad but having more options than Twitter/X is still a step in the right direction, especially given the direction Musk is taking it in. As much as I like the fediverse (I won't be using either Threads or BlueSky anytime soon), it still has a lot of problems surrounding ease of use. Lemmy, Mastodon, Misskey, etc. would benefit a lot from improving the signup process so that the average user doesn't need to be overwhelmed with picking an instance and understanding how federation works.

I believe in 2a and 5b Celeste supremacy. Aside from having the best soundtracks I also like those levels the most as well

You don't use keyboard shortcuts because you have ADHD and can't remember them

I use keyboard shortcuts because I have ADHD and would never be able to stay focused on anything without them

We are not the same

It got revived also! They're back on F-droid as well, not sure about it being the same developer as this though since I don't use friendica

edit: here's the new GitHub link: https://github.com/LiveFastEatTrashRaccoon/RaccoonForLemmy

I'm not trying to say LLM's haven't gotten better on a technical level, nor am I trying to say there should have been AGI by now. I'm trying to say that from a user perspective, ChatGPT, Google Gemini, etc. are about as useful as they were when they came out (i.e. not very). Context size might have changed, but what does that actually mean for a user? ChatGPT writing is still obviously identifiable and immediately discredits my view of someone when I see it. Same with AI generated images. From experience, ChatGPT, Gemini, and all the others still hallucinate points which makes it near-useless for learning new topics since you can't be sure what is real and what's made up.

Another thing I take issue with is open source models that are driven by VCs anyway. A model of an LLM might be open source, but is the LLM actually open source? IMO this is one of those things where the definitions haven't caught up to actual usage. A set of numerical weights achieved by training on millions of pieces of involuntarily taken data based on retroactively modified terms of service doesn't seem open source to me, even if the model itself is. And AI companies have openly admitted that they would never be able to make what they have if they had to ask for permission. When you say that "open source" LLMs have caught up, is that true, or are these the LLM-equivalent of uploading a compiled binary to GitHub and then calling that open source?

ChatGPT still loses OpenAI hundreds of thousands of dollars per day. The only way for a user to be profitable to them is if they own the paid tier and don't use it. The service was always venture capital hype to begin with. The same applies to Copilot and Gemini as well, and probably to companies like Perplexity as well.

My issue with LLMs isn't that it's useless or immoral. It's that it's mostly useless and immoral, on top of causing higher emissions, making it harder to find actual results as AI-generated slop combines with SEO. They're also normalizing collection of any and all user data for training purposes, including private data such as health tracking apps, personal emails, and direct messages. Half-baked AI features aren't making computers better, they're actively making computers worse.

And if I asked you 2 years ago I bet you'd think LLMs would have gotten a lot better by now :)

My current phone has less utility than the phone I had in 2018, which had a headphone jack, SD card, IR emitter (I could use it as a TV remote!), heartrate sensor, and a decent camera.

My current laptop is less upgradable than pretty much anything that came out in 2010. The storage uses a technically standard but uncommon drive size, and the wifi and RAM are both soldered on. It is faster and has a nicer screen, but DRMs in web browsers make it hard to take advantage of that screen, and bloated electron apps make it not feel much faster.

Oh but here's the catch! Now, thanks to a significant amount of stolen data being used to train some autocorrect, my computer can generate code that's significantly worse than what I can write as a junior software dev with under a year of job experience, and takes twice as long to debug. It can also generate uncanny valley level images that look about like I typed in a one sentence prompt to get them.

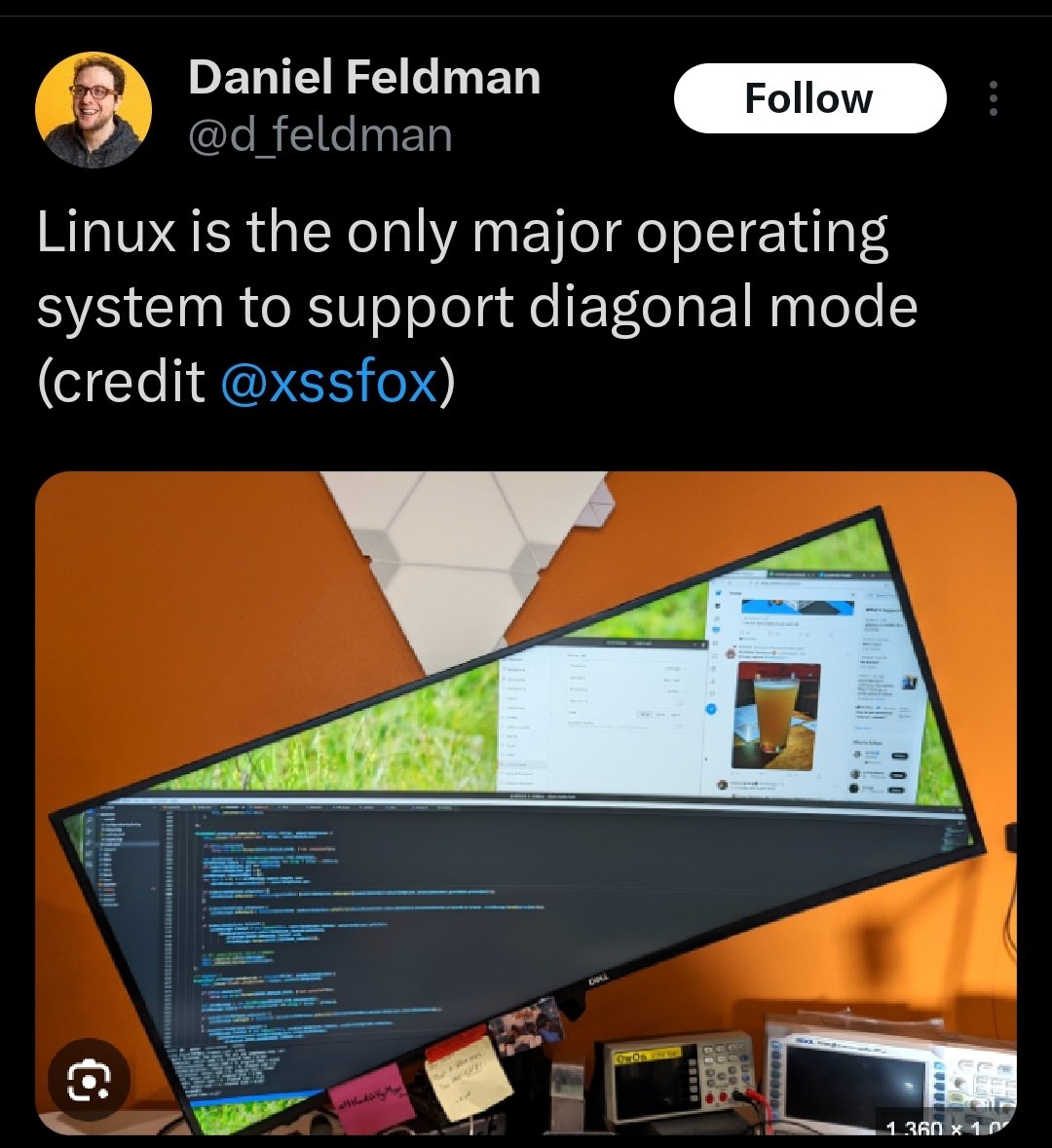

Archinstall exists which does something similar but with a TUI. I disagree that it's as easy as Debian's graphical installer but it is significantly easier than it used to be before archinstall existed.

Internet sarcasm is hard. Poe's law and all, there's probably someone who would say this unironically

I like the idea of the local feed, especially for smaller, less generalized instances, but the default should definitely be federated and the wording could also be changed if only because the word "federated" would probably be confusing to non-technical people. Replacing it with something like "All" might be a better idea

Hey, graduating Gen Z here, where are those mythical high-paying remote jobs? Hell, where's somewhere that will actually look at my resume? People that got hired during COVID got laid off and now we're competing with people who have 2-4 years of experience for a junior position, inflation is significantly higher and paying for college and rent didn't exactly get easier. How can you look at the current situation and say we have it easy, just because you also had it rough?