this post was submitted on 26 Feb 2024

748 points (95.7% liked)

Programmer Humor

19817 readers

878 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

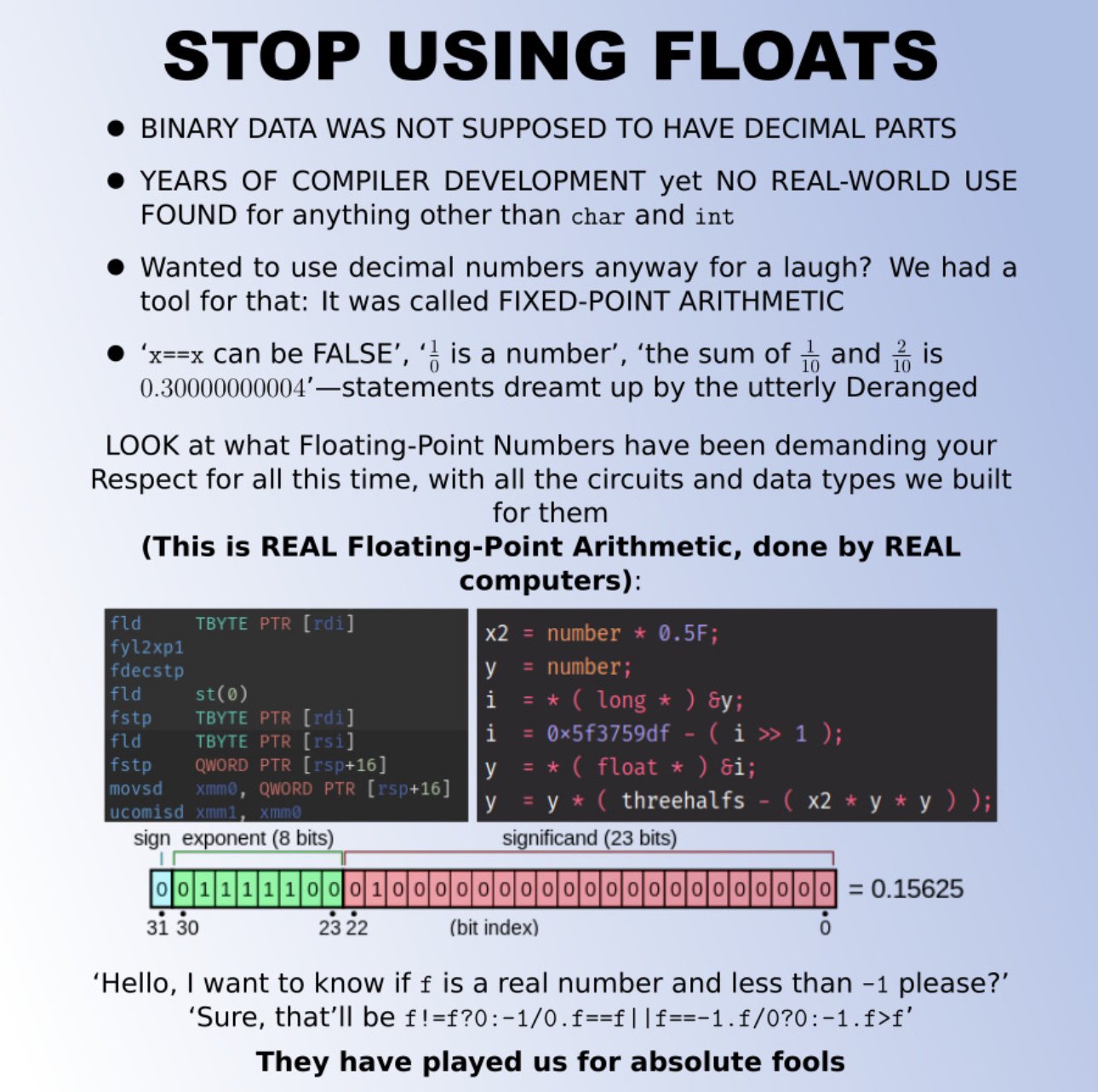

There are probably a lot of scientific applications (e.g. statistics, audio, 3D graphics) where exponential notation is the norm and there’s an understanding about precision and significant digits/bits. It’s a space where fixed-point would absolutely destroy performance, because you’d need as many bits as required to store your largest terms. Yes, NaN and negative zero are utter disasters in the corners of the IEEE spec, but so is trying to do math with 256bit integers.

For a practical explanation about how stark a difference this is, the PlayStation (one) uses an integer z-buffer (“fixed point”). This is responsible for the vertex popping/warping that the platform is known for. Floating-point z-buffers became the norm almost immediately after the console’s launch, and we’ve used them ever since.

While it's true the PS1 couldn't do floating point math, it did NOT have a z-buffer at all.

https://www.ncesc.com/gaming-faq/does-ps1-have-z-buffer/

What's the problem with -0?

It conceptually makes sense for to negativ values to close to 0 to be represented as -0.

In practice I have never seen a problem with -0.

On NaN: While its use cases can nowadays be replaced with language constructs like result types, it was created before exceptions or sum types. The way it propagates kind of mirrors Haskells monadic

Maybe.We should be demanding more and better wrapper types from our language/standard library designers.