For legibility I split the post into: my current setup; the problem I'm trying to solve; the constraints for solving the problem; what I've tried and failed to do; and key questions.

When roasting me in the comments, go nuts, I'm not a complete beginner, but I wouldn't rank myself as an intermediate yet. My lab is almost entirely tteck scripts, and what isn't built by tteck are docker containers. My inexperience informs some of my decisions for example: I'm using nginxproxymanager because Nginx documentation is beyond me, I couldn't write a nginx.config and NPM makes reverse proxies accessible to me.

My Current setup

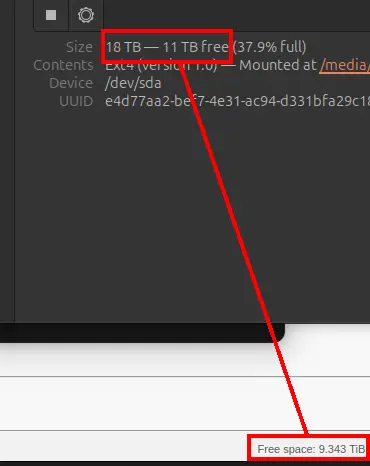

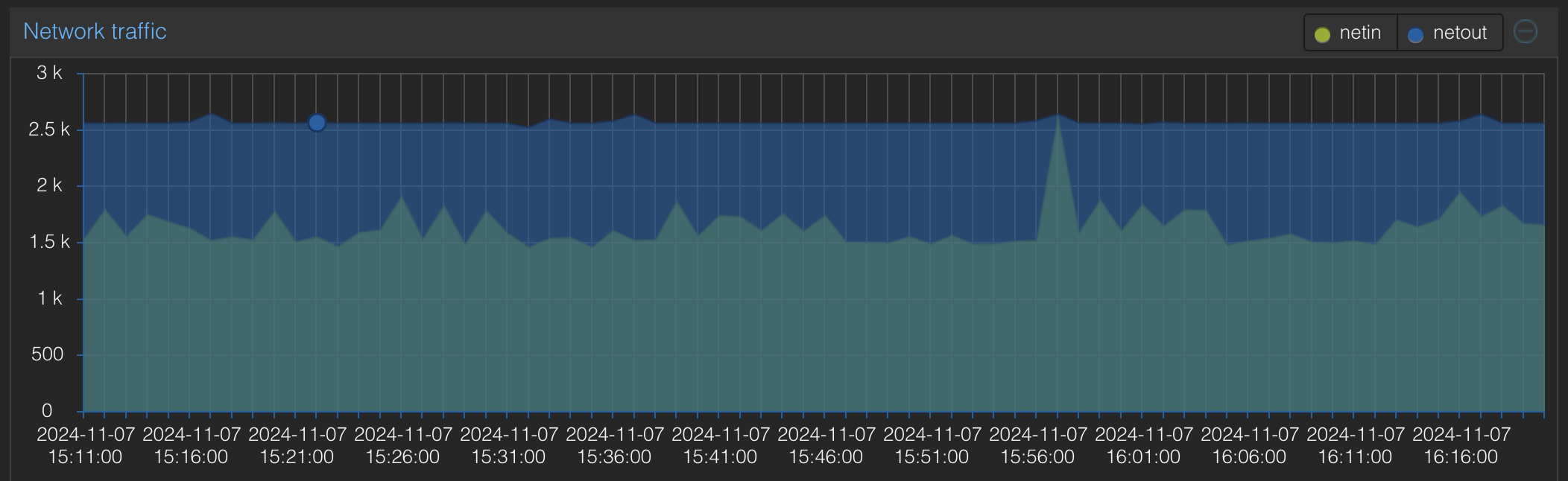

I have a Proxmox based home server running multiple services as LXCs (a servarr, jellyfin, immich, syncthing, paperless, etc. Locally my fiancée and I connect to our services. Using pihole-NginxProxyManager(NPM) @ "service.server" and that's good. Remotely we connect to key services over tailscale using tailscale's magic DNS @ "lxcname:port" and that works... fine. We each have a list of "service: address" and it's tolerable. Finally, my parents have a home server, that I manage, it is Debian based with much the same services running all in Docker (I need to move it to Podman, but I got shit to do). We run each others' off-site backup over tailscale-syncthing and that seems good. But, our media and photos are our own ecosystems.

The Problem

I would like to give someone (Bob) a box (a Pi, a minipc, a whatever). The sole function of this box is to act as a gateway for Bob's devices to connect to key LXCs on my tailnet. Thus Bob can enjoy my legally obtained media and back up their photos.

The constraints

These are in order of importance, I would be giving ground from the bottom up. The top two are non negotiable though.

A VPS has low to zero WAF. Otherwise I would have followed the well trodden ground.

Failsafe. If the box dies bob can't access jellyfin until I can be arsed to fix it. Otherwise, they experience no other inconvenience.

No requirement to install tailscale on Bob's devices. Some devices aren't compatible with tailscale: Amazon fire stick. A different bob does't want to install a VPN on their phone. Some devices I don't trust to be up to date and secure, I don't want them on my tailnet... I have no idea if the one degree of separation is any more secure, but it gives me the willies.

I'm pretty sure I can solve this using pihole-nginx-tailscale with my skillset. But then I have to get into bob's router, and maybe bob might not like that. If I could just give them a preconfigured box that would be ideal. They would have pretty addresses though.

I don't currently have a domain, I do plan to get one. I just don't currently have one.

My attempts and failures to solve the problem.

I've built a little VM to act as a box (box), it requests a static IP. On it I installed Mint (production would probably be DietPi or Debian) Tailscale,Docker (bare metal) and NPM as a container. In NPM I set a proxy host 192.168.box.IP to forward to 100.jellyfin.tailscale.IP:8096. I tested it by going to box.IP and jellyfin works. Next up Jellyseerr... I can't make another proxy host with the same domain name for obvious reasons.

I tried "box.IP:8096" as a domain name and NPM rejected it. I tried "box.IP/jellyfin" and NPM rejected that too (I'll try Locations in a bit). I tried both "service.box.IP" and "box.IP.service" and I'd obviously need to set up DNS for that. Look, I'm an idiot, I make no apologies. I know I can solve it by getting into their router, setting Pihole as their DNS, and going that route.

Next I tried Locations. The required hostname and port I set up as jellyfin.lxc.tailnet.IP:8096 and I set /jellyseerr to go to jellyseerr.lxc.tailnet.IP and immich set up the same way. Then I tested the services. Jellyfin works. Jellyseerr connects then immediately rewrites the URL from "box.IP/jellyseerr" to "box.IP/login" and then hangs. Immich does much the same thing. In desperation I asked chatGPT... the less said about that the better. Just know I've been at this a while.

Here's where I'm at: I have two Google terms left to learn about in an attempt to solve this. The first is "IP tables" the second is "tailscale subnet routers" and I have effort left to learn about one of them.

During this process I learned I could solve this problem thusly: give Bob a box. On this box is a number of virtual machines(vm). Each vm is dedicated to a single service, and what the fuck is that for a solution?! It would satisfy my all of my constraints though, its just ugly.

Key questions

Is my problem solvable by just giving someone a Pi with the setup pre-installed? If not I'll go the pihole-npm-tailnet and be happy. Bob'll connect to "service.box" and it'll proxy to "service.lxc.tailnet.IP".

Assuming I can give them a box. Is nginx the way forward? Should I be learning /Locations configs to stop jellyseerr's rewrite request. Forcing it to go to "box.IP/jellyseerr/login". Or, is there some other Google term I should be learning about.

Asssuming I can give them a box, and nginx alone is not useful to me. Is it subnet routers I should be learning about? They seem like a promising solution, but I'll need to learn how the addressing works... Or how any of it works... IP tables seem like another solution on the face of it. But both I don't know where to send bob without doing local DNS/CNAME shenanigans

Finally assuming I'm completely in the weeds and hopelessly lost... What is it I should I be learning about? A VPS I guess... There's a reason everyone is going that route., Documentation on this "box" concept isn't readily findable for a reason I imagine.