me after 15 years of intermittent learning self hosting:

i have the one random office PC that runs minecraft

....yeah that's it

A place to share alternatives to popular online services that can be self-hosted without giving up privacy or locking you into a service you don't control.

Rules:

Be civil: we're here to support and learn from one another. Insults won't be tolerated. Flame wars are frowned upon.

No spam posting.

Posts have to be centered around self-hosting. There are other communities for discussing hardware or home computing. If it's not obvious why your post topic revolves around selfhosting, please include details to make it clear.

Don't duplicate the full text of your blog or github here. Just post the link for folks to click.

Submission headline should match the article title (don’t cherry-pick information from the title to fit your agenda).

No trolling.

Resources:

Any issues on the community? Report it using the report flag.

Questions? DM the mods!

me after 15 years of intermittent learning self hosting:

i have the one random office PC that runs minecraft

....yeah that's it

With the enshittification of streaming platforms, a Kodi or Jellyfin server would be a great starting point. In my case, I have both, and the Kodi machine gets the files from the Jellyfin machine through NFS.

Or Home Assistant to help keep IOT devices that tend to be more IoS. Or a Nextcloud server to try to degoogle at least a little bit.

Maybe a personal Friendica instance for your LAN so your family can get their Facebook addiction without giving their data to Meta?

Additionally, using jottacloud with 2 VPS's (one of them being built on epyc like from OVH cloud) can get you a really good download server and streaming server for about £30 a month, which is the same as having netflix and Disney plus, except now you can have anything you want.

I have a contabo 4core 8gb ram VPS that handles downloading content.

A OVH 4core 8gb VPS that handles emby (I keep trying to go back to jellyfin but it's just slightly slower than emby at transcoding and I need to squeeze as much performance out of my VPS as possible so... Maybe one day jelly)

And I have a really good streaming experience with subtitles that don't put big black boxes on the screen making 1/8th of the screen non viewable.

Nice

Only host what you need.

This seems like work but from/for home.

You should see some of the literal data centers folks have in their houses. It's nuts.

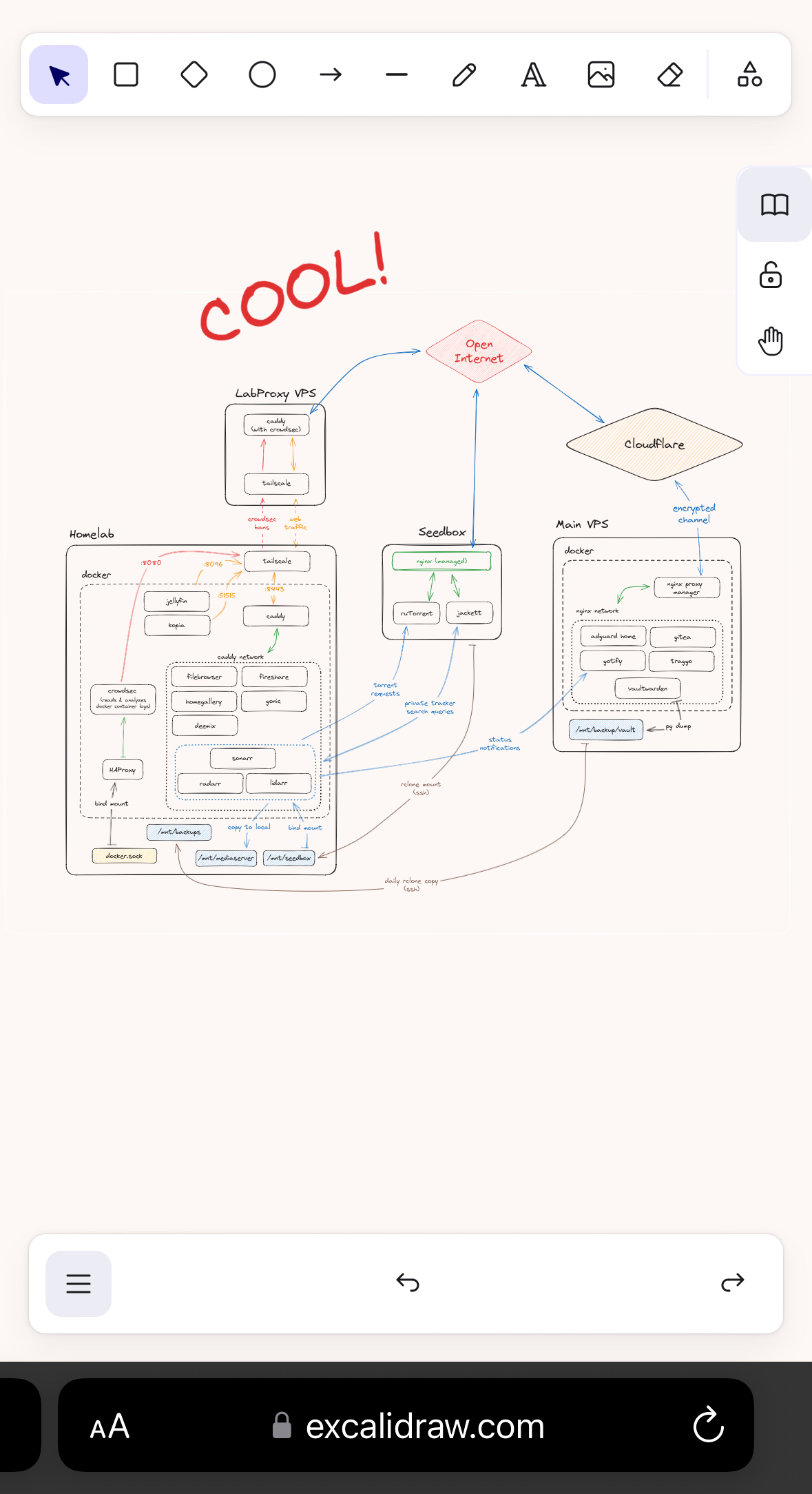

I've saved this. I set up unraid and docker, have the home media server going, but I'm absolutely overwhelmed trying to understand reverse proxy, Caddy, NGINX and the security framework. I guess that's my next goal.

Hey! I'm also running my homelab on unraid! :D

The reverse proxy basically allows you to open only one port on your machine for generic web traffic, instead of opening (and exposing) a port for each app individually. You then address each app by a certain hostname / Domain path, so either something like movies.myhomelab.com or myhomelab.com/movies.

The issue is that you'll have to point your domain directly at your home IP. Which then means that whenever you share a link to an app on your homelab, you also indirectly leak your home location (to the degree that IP location allows). Which I simply do not feel comfortable with. The easy solution is running the traffic through Cloudflare (this can be set up in 15 minutes), but they impose traffic restrictions on free plans, so it's out of the question for media or cloud apps.

That's what my proxy VPS is for. Basically cloudflare tunnels rebuilt. An encrypted, direct tunnel between my homelab and a remote server in a datacenter, meaning I expose no port at home, and visitors connect to that datacenter IP instead of my home one. There is also no one in between my two servers, so I don't give up any privacy. Comes with near zero bandwith loss in both directions too! And it requires near zero computational power, so it's all running on a machine costing me 3,50 a month.

I appreciate this thoughtful reply. I read it a few times, I think I understand the goal. Basically you're systematically closing off points that leak private information or constitute a security weakness. The IP address and the ports.

For the VPS, in order for that to have no bandwidth loss, does that mean it's only used for domain resolution but clients actually connect directly to your own server? If not and if all data has to pass through a data center, I'd assume that makes service more unreliable?

I'd recommend using Borgbackup over SSH, instead of just using rclone for backups. As far as I know, rclone is like rsync in that you only have one copy of the data. If it gets corrupted at the source, and that gets synced across, your backup will be corrupted too. Borgbackup and Borgmatic are a great way to do backups, and since it's deduplicated you can usually store months of daily backups without issue. I do daily backups and retain 7 daily backups, 4 weekly backups, and 'infinite' monthly backups (until my backup server runs out of space, then I'll start pruning old monthly backups).

Borgbackup also has an append-only mode, which prevents deleting backups. This protects the backup in case the client system is hacked. Right now, someone that has unauthorized access to your main VPS could in theory delete both the system and the backup (by connecting via rclone and deleting it). Borg's append-only mode can be enabled per SSH key, so for example you could have one SSH key on the main VPS that is in append-only mode, and a separate key on your home PC that has full access to delete and prune backups. It's a really nice system overall.

You're right, that's one of the remaining pain points of the setup. The rclone connections are all established from the homelab, so potential attackers wouldn't have any traces of the other servers. But I'm not 100% sure if I've protected the local backup copy from a full deletion.

The homelab is currently using Kopia to push some of the most important data to OneDrive. From what I've read it works very similarly to Borg (deduplicate, chunk based, compression and encryption) so it would probably also be able to do this task? Or maybe I'll just move all backups to Borg.

Do you happen to have a helpful opinion on Kopia vs Borg?

I haven't tried Kopia, so unfortunately I can't compare the two. A lot of the other backup solutions don't have an equivalent to Borg's append-only mode though.

Very nice setup imho. Quite a bit more complicated than mine - mine is basically just the left box without being behind a VPS or anything. I don't expose anything through Caddy except Jellyfin. I'm also running fail2ban in front of my services, so that if it gets hit with too many 404s because someone is poking around, they get IP banned for 30d

I'm still on the fence if I want to expose Jellyfin publicly or not. On the one hand, I never really want to stream movies or shows from abroad, so there's no real need. And in desperate times I can always connect to Tailscale and watch that way. But on the other, it's really cool to simply have a web accessible Netflix. Idk.

Architecture looks dope

Hope you've safeguarded your setup by writing a provisoning script in case anything goes south.

I had to reinstall my server from scratch twice and can't fathom having to reconfigure everything manually anymore

Nope, don't have that yet. But since all my compose and config files are neatly organized on the file system, by domain and then by service, I tar up that entire docker dir once a week and pull it to the homelab, just in case.

How have you setup your provisioning script? Any special services or just some clever batch scripting?

Old school ansible at first, then I ditched it for Cloudbox (an OSS provisioning script for media server)

Works wonders for me but I believe it's currently stuck on a deprecated Ubuntu release

Possible for a dark mode version XD? excalidraw can do that.

Of course! here you go: https://files.catbox.moe/hy713z.png. The image has the raw excalidraw data embedded, so you can import it to the website like a save file and play around with the sorting if need be.

Thanks for the dark mode link!!

I was also going to mention draw.io

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I've seen in this thread:

| Fewer Letters | More Letters |

|---|---|

| DNS | Domain Name Service/System |

| HTTP | Hypertext Transfer Protocol, the Web |

| HTTPS | HTTP over SSL |

| IP | Internet Protocol |

| Plex | Brand of media server package |

| SSH | Secure Shell for remote terminal access |

| SSL | Secure Sockets Layer, for transparent encryption |

| TCP | Transmission Control Protocol, most often over IP |

| VPN | Virtual Private Network |

| VPS | Virtual Private Server (opposed to shared hosting) |

| k8s | Kubernetes container management package |

| nginx | Popular HTTP server |

11 acronyms in this thread; the most compressed thread commented on today has 15 acronyms.

[Thread #473 for this sub, first seen 2nd Feb 2024, 05:25] [FAQ] [Full list] [Contact] [Source code]

How do you like crowdsec? I've used it on a tiny VPS (2 vcpu / 1 GB RAM) and it hogs my poor machine. I also found it to have a bit of learning curve, compared to fail2ban (which is much simpler, but dosen't play well with Caddy by default).

Would be happy to see your Caddy / Crowdsec configuration.

The crowdsec agent running on my homelab (8 Cores, 16GB RAM) is currently sitting idle at 96.86MiB RAM and between 0.4 and 1.5% CPU usage. I have a separate crowdsec agent running on the Main VPS, which is a 2 vCPU 4GB RAM machine. There, it's using 1.3% CPU and around 2.5% RAM. All in all, very manageable.

There is definitely a learning curve to it. When I first dove into the docs, I was overwhelmed by all the new terminology, and wrapping my head around it was not super straightforward. Now that I've had some time with it though, it's become more and more clear. I've even written my own simple parsers for apps that aren't on the hub!

What I find especially helpful are features like explain, which allow me to pass in logs and simulate which step of the process picks that up and how the logs are processed, which is great when trying to diagnose why something is or isn't happening.

The crowdsec agent running on my homelab is running from the docker container, and uses pretty much exactly the stock configuration. This is how the docker container is launched:

crowdsec:

image: crowdsecurity/crowdsec

container_name: crowdsec

restart: always

networks:

socket-proxy:

ports:

- "8080:8080"

environment:

DOCKER_HOST: tcp://socketproxy:2375

COLLECTIONS: "schiz0phr3ne/radarr schiz0phr3ne/sonarr"

BOUNCER_KEY_caddy: as8d0h109das9d0

USE_WAL: true

volumes:

- /mnt/user/appdata/crowdsec/db:/var/lib/crowdsec/data

- /mnt/user/appdata/crowdsec/acquis:/etc/crowdsec/acquis.d

- /mnt/user/appdata/crowdsec/config:/etc/crowdsec

Then there's the Caddyfile on the LabProxy, which is where I handle banned IPs so that their traffic doesn't even hit my homelab. This is the file:

{

crowdsec {

api_url http://homelab:8080

api_key as8d0h109das9d0

ticker_interval 10s

}

}

*.mydomain.com {

tls {

dns cloudflare skPTIe-qA_9H2_QnpFYaashud0as8d012qdißRwCq

}

encode gzip

route {

crowdsec

reverse_proxy homelab:8443

}

}

Keep in mind that the two machines are connected via tailscale, which is why I can pass in the crowdsec agent with its local hostname. If the two machines were physically separated, you'd need to expose the REST API of the agent over the web.

I hope this helps clear up some of your confusion! Let me know if you need any further help with understanding it. It only gets easier the more you interact with it!

don't worry, all credentials in the two files are randomized, never the actual tokens

What software did you use to make this image? Its very well done

Thank you! It's done in excalidraw.com. Not the most straightforward for flowcharts, took me some time to figure out the best way to sort it all. But very powerful once you get into the flow.

If you're feeling funny, you can download the original image from the catbox link and plug it right back into the site like a save file!

Now just gotta understand everything beyond… Jellyfin haha

Draw.io is also pretty good or lucidcharts

I saved this! Yeah, it seems like a lot of work, but I got inspired again (I had a slight self-hosting burnout and nuked my raspberry setup ~year ago) so I appreciate it. :) Can I ask what hardware you run this on? edit: I just wanted to ramble some more: I just fired up my rPI4 again just last week, setup it with just as barebone VPS with wireguard, samba, jellyfin and pi-hole+unbound (as to not burn myself again :D )

Glad to have gotten you back into the grind!

My homelab runs on an N100 board I ordered on Aliexpress for ~150€, plus some 16GB Corsair DDR5 SODIMM RAM. The Main VPS is a 2 vCPU 4GB RAM machine, and the LabProxy is a 4 vCPU 4GB RAM ARM machine.

Remeber, the more boxes you have, the more advanced you are as an admin! Once you do his job for money, the challenge is the exact opposite. The less parts you have, the better. The more vanilla they are, the better.

Absolutely! To be honest, I don't even want to have countless machines under my umbrella, and constantly have consodilation in mind - but right now, each machine fulfills a separate purpose and feels justified in itself (homelab for large data, main VPS for anything thats operation critical and cant afford power/network outages and so on). So unless I find another purpose that none of the current machines can serve, I'll probably scale vertically instead of horizontally (is that even how you use that expression?)

I have taken a picture and shall study it

I've seen Caddy mentioned a few times recently, what do you like about it over other tools?

In addition to the other commenter and their great points, here's some more things I like:

I can answer this one, but mainly only in reference to the other popular solutions:

I am sorry, I am but a worm just starting Docker and I have two questions.

Say I set up pihole in a container. Then say I use Pihole's web UI to change a setting, like setting the web UI to the midnight theme.

Do changes persist when the container updates?

I am under the impression that a container updating is the old one being deleted and a fresh install taking its place. So all the changes in settings vanish.

I understand that I am supposed to write files to define parameters of the install. How am I supposed to know what to write to define the changes I want?

Sorry to hijack, the question doesn't seem big enough for its own post.

With containers, most will have a persistent volume that is mapped to the host filesystem. This is where your config data is. When you update a container, just the image is updated(pihole binaries) but it leaves the config files there. Things like your block lists and custom dns settings, theme settings, all of that will remain.

What is the proxy in front of crowdsec for?

If you're referring to the "LabProxy VPS": So that I don't have to point a public domain that I (plan to) use more and more in online spaces to my personal IP address, allowing anyone and everyone to pinpoint my location. Also, I really don't want to mess with the intricacies of DynDNS. This solution is safer and more reliable than DynDNS and open ports on my router thats not at all equipped to fend off cyberspace attacks.

If you're referring to the caddy reverse proxy on the LabProxy VPS: I'm pointing domains that I want to funnel into my homelab at the external IP of the proxy VPS. The caddy server on that VPS reads these requests and reverse-proxies them onto the caddy-port from the homelab, using the hostname of my homelab inside my tailscale network. That's how I make use of the tunnel. This also allows me to send the crowdsec ban decisions from the homelab to the Proxy VPS, which then denies all incoming requests from that source IP before they ever hit my homelab. Clean and Safe!