this post was submitted on 11 Apr 2024

1226 points (95.5% liked)

Science Memes

11784 readers

1076 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

I've also heard it's true that as far as we can figure, we've basically reached the limit on certain aspects of LLMs already. Basically, LLMs need a FUCK ton of data to be good. And we've already pumped them full of the entire internet so all we can do now is marginally improve these algorithms that we barely understand how they work. Think about that, the entire Internet isnt enough to successfully train LLMs.

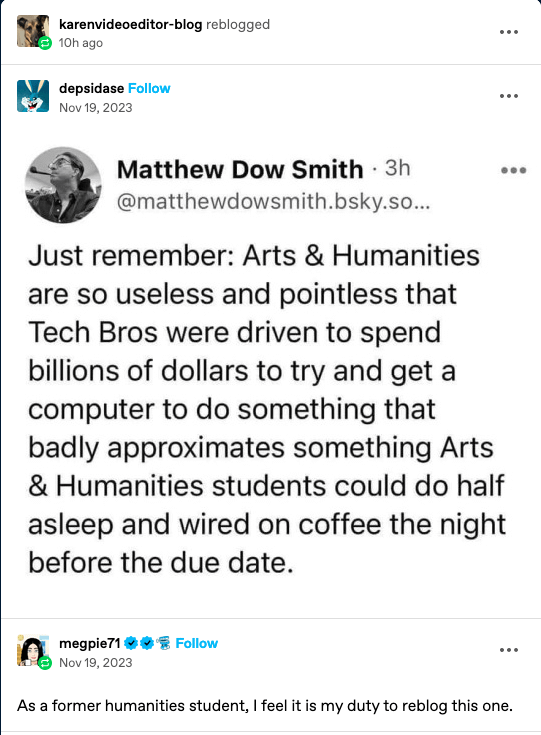

LLMs have taken some jobs already (like audio transcription, basic copyediting, and aspects of programming), we're just waiting for the industries to catch up. But we'll need to wait for a paradigm shift before they start producing pictures and books or doing complex technical jobs with few enough hallucinations that we can successfully replace people.

The (really, really, really) big problem with the internet is that so much of it is garbage data. The number of false and misleading claims spread endlessly on the internet is huge. To rule those beliefs out of the data set, you need something that can grasp the nuances of published, peer-reviewed data that is deliberately misleading propaganda, and fringe conspiracy nuts that believe the Earth is controlled by lizards with planes, and only a spritz bottle full of vinegar can defeat them, and everything in between.

There is no person, book, journal, website, newspaper, university, or government that has reliably produced good, consistent help on questions of science, religion, popular lies, unpopular truths, programming, human behavior, economic models, and many, many other things that continuously have an influence on our understanding of the world.

We can't build an LLM that won't consistently be wrong until we can stop being consistently wrong.

Yeah I've heard medical LLMs are promising when they've been trained exclusively on medical texts. Same with the ai that's been trained exclusively on DNA etc.

My own personal belief is very close to what you've said. It's a technology that isn't new, but had been assumed to not be as good as compositional models because it would cost a fuck-ton to build and would result in dangerous hallucinations. It turns out that both are still true, but people don't particularly care. I also believe that one of the reasons why ChatGPT has performed so well compared to other LLM initiatives is because there is a huge amount of stolen data that would get OpenAI in a LOT of trouble.

IMO, the real breakthroughs will be in academia. Now that LLM's are popular again, we'll see more research into how they can be better utilised.

Afaik open ai got their training data from basically a free resource that they just had to request access to. They didn't think much about it along with everyone else. No one could have predicted that it would be that valuable until after the fact where in retrospect it seems obvious.