this post was submitted on 08 Feb 2024

921 points (95.0% liked)

Programmer Humor

32710 readers

407 users here now

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

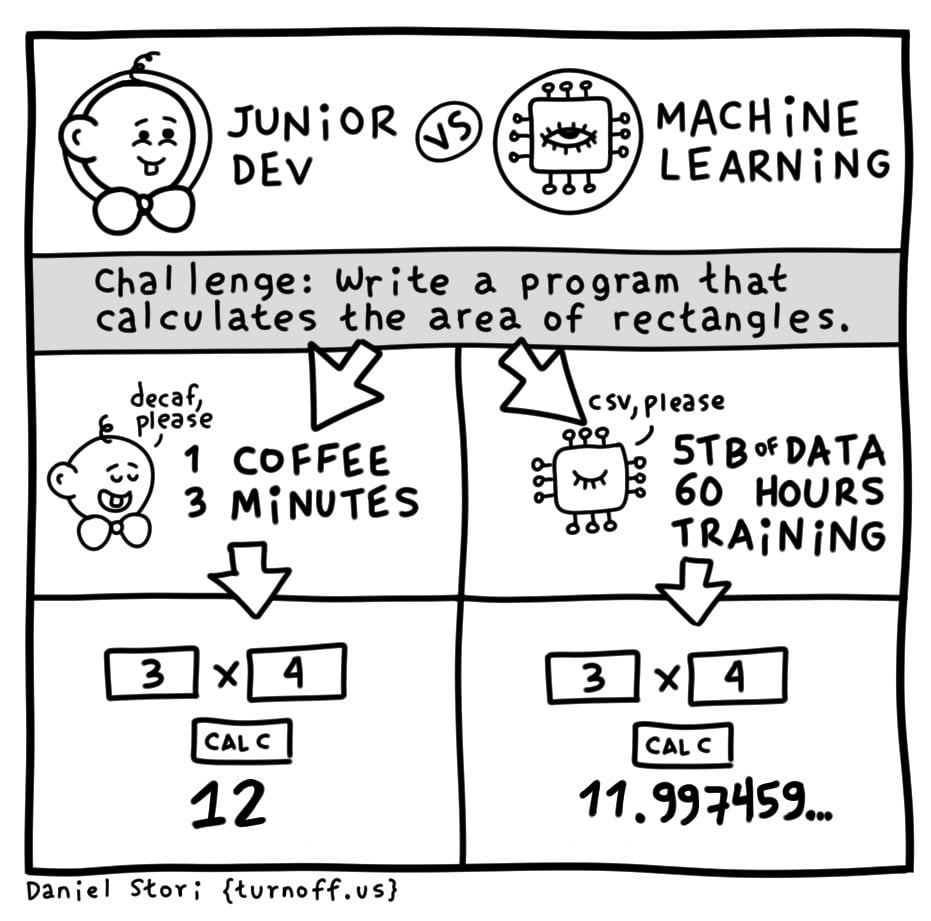

Agreed. If you need to calculate rectangles ML is not the right tool. Now do the comparison for an image identifying program.

If anyone's looking for the magic dividing line, ML is a very inefficient way to do anything; but, it doesn't require us to actually solve the problem, just have a bunch of examples. For very hard but commonplace problems this is still revolutionary.

I think the joke is that the Jr. Developer sits there looking at the screen, a picture of a cat appears, and the Jr. Developer types "cat" on the keyboard then presses enter. Boom, AI in action!

The truth behind the joke is that many companies selling "AI" have lots of humans doing tasks like this behind the scene. "AI" is more likely to get VC money though, so it's "AI", I promise.

This is also how a lot (maybe most?) of the training data - that is, the examples - are made.

On the plus side, that's an entry-level white collar job in places like Nigeria where they're very hard to come by otherwise.

I recently heard somewhere that the joke in India is that in western tech company's "AI" stands for "Absent Indians".

That's simultaneously funny and depressing.

Spot on: https://www.theverge.com/features/23764584/ai-artificial-intelligence-data-notation-labor-scale-surge-remotasks-openai-chatbots?src=longreads

It's also Blockchain and uses quantum computers somehow. /s

The correct tool for calculating the area of a rectangle is an elementary school kid who really wants that A.

Exactly. Explaining to a computer what a photo of a dog looks like is super hard. Every rule you can come up with has exceptions or edge cases. But if you show it millions of dog pictures and millions of not-dog pictures it can do a pretty decent job of figuring it out when given a new image it hasn't seen before.

Another problem is people using LLM like it's some form of general ML.

I think it's still faster than actual solutions in some cases, I've seen someone train an ML model to animate a cloak in a way that looks realistic based on an existing physics simulation of it and it cut the processing time down to a fraction

I suppose that's more because it's not doing a full physics simulation it's just parroting the cloak-specific physics it observed but still

This. I'm sure to a sufficiently intelligent observer it would still look wrong. You could probably achieve the same thing with a conventional algorithm, it's just that we haven't come up with a way to profitably exploit our limited perception quite as well as the ML does.

In the same vein, one of the big things I'm waiting on is somebody making a NN pixel shader. Even a modest network can achieve a photorealistic look very easily.