this post was submitted on 29 Aug 2024

224 points (97.1% liked)

Science Memes

11148 readers

4935 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

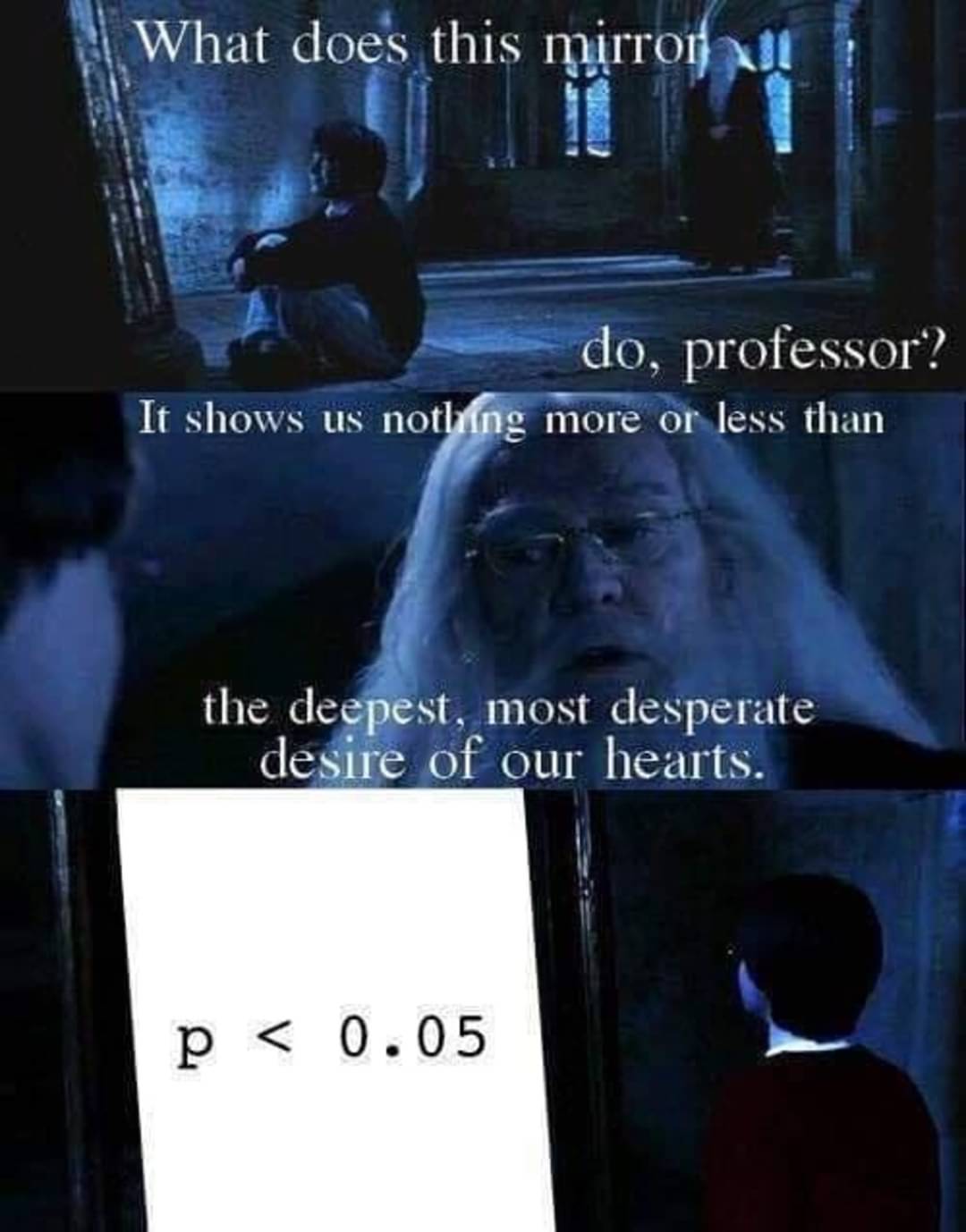

Adding onto this. p < 0.05 is the somewhat arbitrary standard that many journals have for being able to publish a result at all.

Is you do an experiment to see we whether X affects Y, and get a p = 0.05, you can say, "Either X affects Y, or it doesn't and an unlikely fluke event occurred during this experiment that had a 1 in 20 chance."

Usually, this kind of thing is publishable, but we've decided we don't want to read the paper if that number gets any higher than 1 in 20. No one wants to read the article on, "We failed to determine whether X has an effect on Y or not."

Which is sad because a lot of science is just ruling things out. We should still publish papers that say that if we do an experiment with too small of a sample, we get an inconclusive result, because that starts to put bounds on how strongly a thing gets affected, if an effect occurs at all.

That's a shame. Negative results are very important to the process.

Especially considering that PDFs can be just a few Mb, and I doubt people will care if they're not cached locally.