The European Union (EU) has managed to unite politicians, app makers, privacy advocates, and whistleblowers in opposition to the bloc’s proposed encryption-breaking new rules, known as “chat control” (officially, CSAM (child sexual abuse material) Regulation).

Thursday was slated as the day for member countries’ governments, via their EU Council ambassadors, to vote on the bill that mandates automated searches of private communications on the part of platforms, and “forced opt-ins” from users.

However, reports on Thursday afternoon quoted unnamed EU officials as saying that “the required qualified majority would just not be met” – and that the vote was therefore canceled.

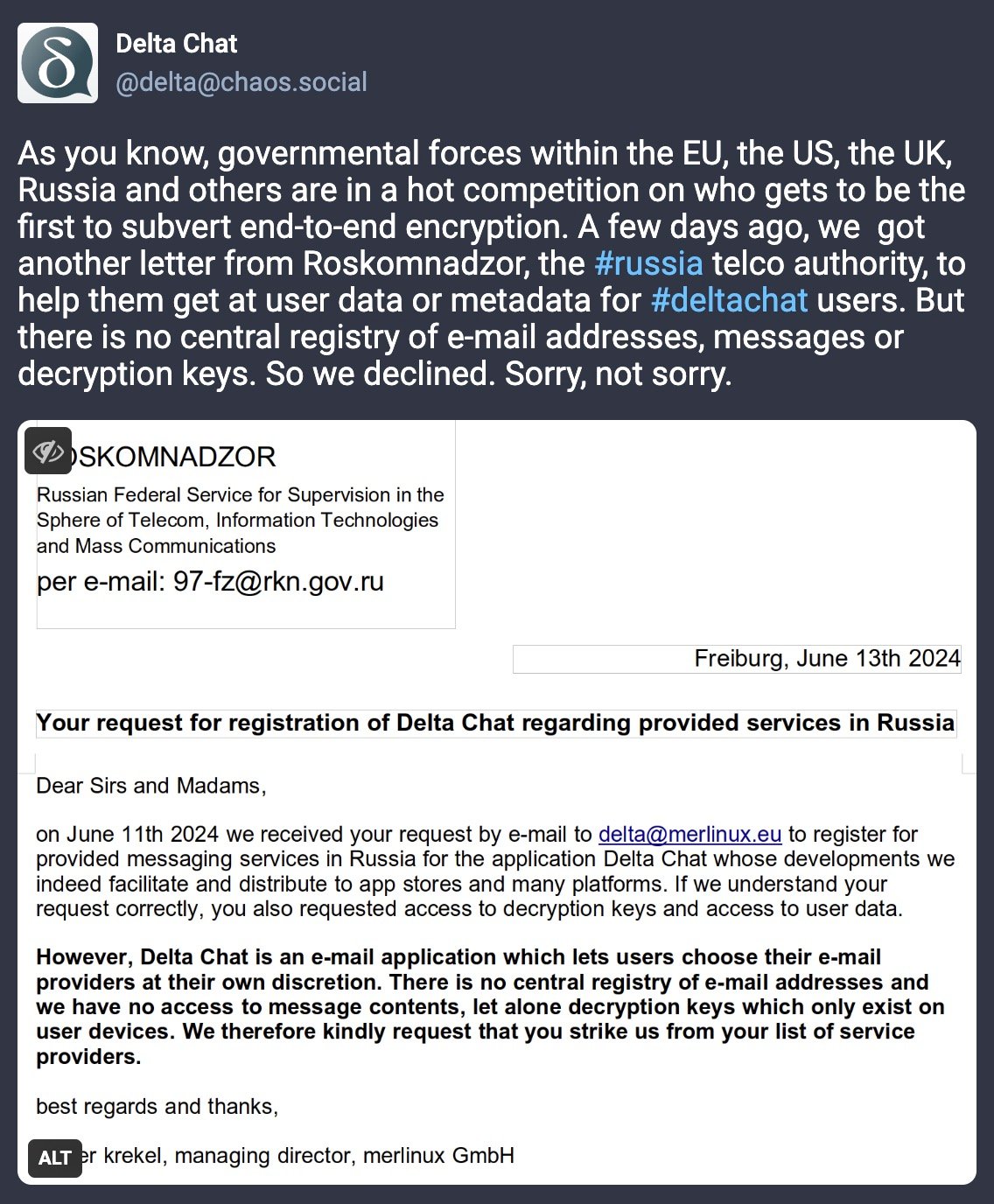

This comes after several countries, including Germany, signaled they would either oppose or abstain during the vote. The gist of the opposition to the bill long in the making is that it seeks to undermine end-to-end encryption to allow the EU to carry out indiscriminate mass surveillance of all users.

The justification here is that such drastic new measures are necessary to detect and remove CSAM from the internet – but this argument is rejected by opponents as a smokescreen for finally breaking encryption, and exposing citizens in the EU to unprecedented surveillance while stripping them of the vital technology guaranteeing online safety.

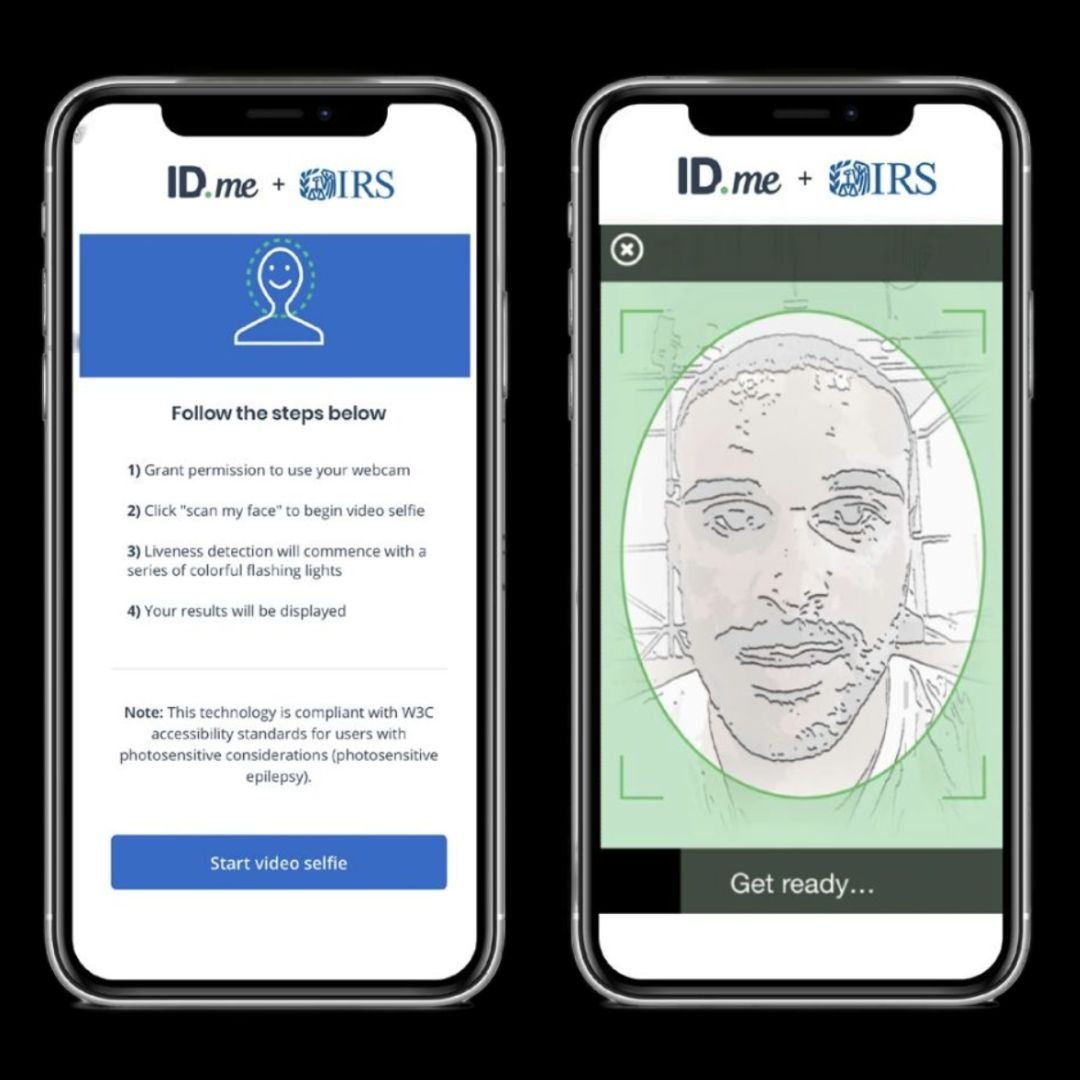

Some squarely security and privacy-focused apps like Signal and Threema said ahead of the vote that was expected today they would withdraw from the EU market if they had to include client-side scanning, i.e., automated monitoring.

WhatsApp hasn’t gone quite so far (yet) but Will Cathcart, who heads the app over at Meta, didn’t mince his words in a post on X when he wrote that what the EU is proposing – breaks encryption.

“It’s surveillance and it’s a dangerous path to go down,” Cathcart posted.

European Parliament (EP) member Patrick Breyer, who has been a vocal critic of the proposed rules, and also involved in negotiating them on behalf of the EP, on Wednesday issued a statement warning Europeans that if “chat control” is adopted – they would lose access to common secure messengers.

“Do you really want Europe to become the world leader in bugging our smartphones and requiring blanket surveillance of the chats of millions of law-abiding Europeans? The European Parliament is convinced that this Orwellian approach will betray children and victims by inevitably failing in court,” he stated.

“We call for truly effective child protection by mandating security by design, proactive crawling to clean the web, and removal of illegal content, none of which is contained in the Belgium proposal governments will vote on tomorrow (Thursday),” Breyer added.

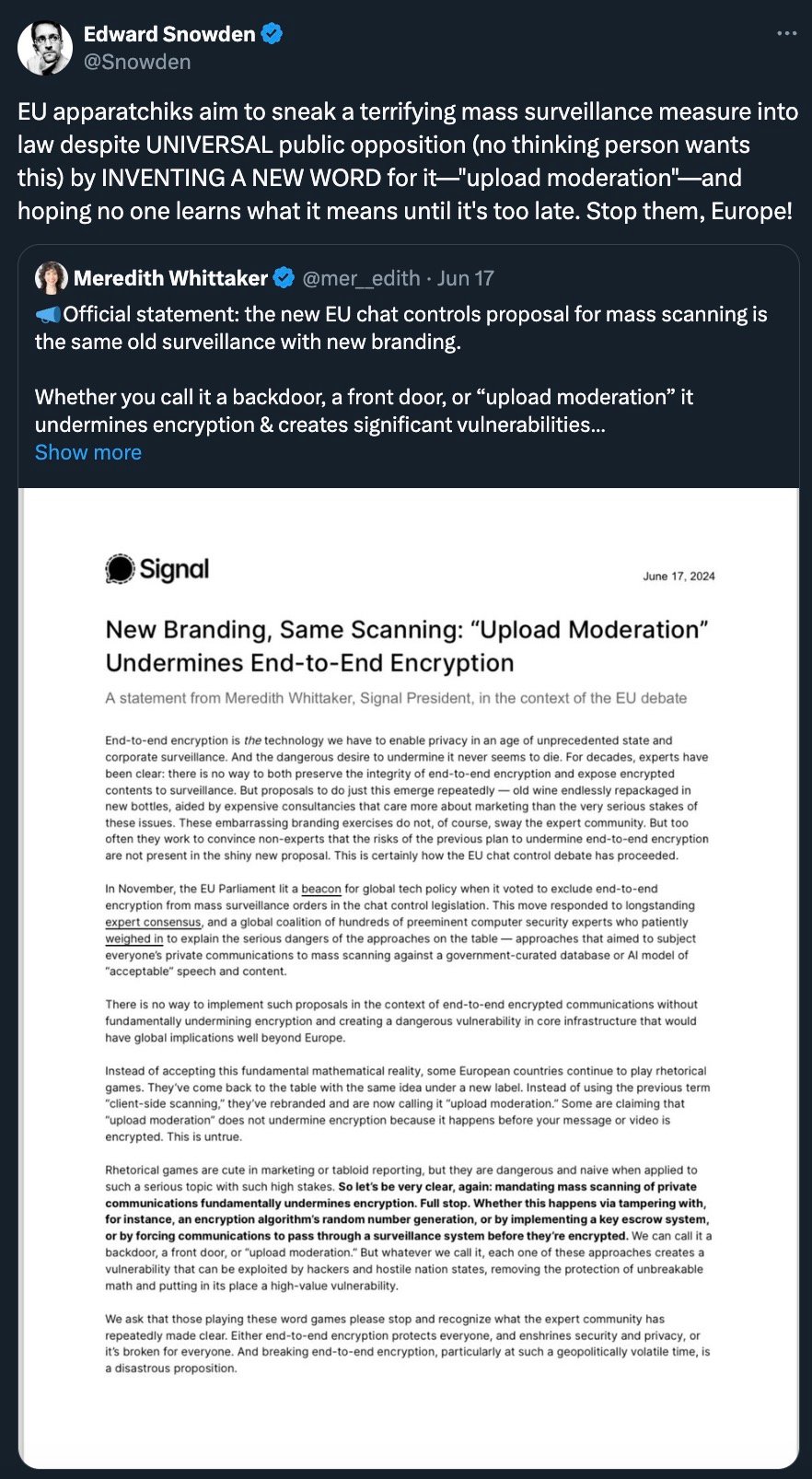

And who better to assess the danger of online surveillance than the man who revealed its extraordinary scale, Edward Snowden?

“EU apparatchiks aim to sneak a terrifying mass surveillance measure into law despite UNIVERSAL public opposition (no thinking person wants this) by INVENTING A NEW WORD for it – ‘upload moderation’ – and hoping no one learns what it means until it’s too late. Stop them, Europe!,” Snowden wrote on X.

It appears that, at least for the moment, Europe has.

nobody using endgame anymore?

https://git.hackliberty.org/Git-Mirrors/EndGame