The US Supreme Court has ruled in the hotly-awaited decision for the Murthy v. Missouri case, reinforcing the government's ability to engage with social media companies concerning the removal of speech about COVID-19 and more. This decision reverses the findings of two lower courts that these actions infringe upon First Amendment rights.

The opinion, decided by a 6-3 vote, found that the plaintiffs, lacked the standing to sue the Biden administration. The dissenting opinions came from conservative justices Samuel Alito, Clarence Thomas, and Neil Gorsuch.

We obtained a copy of the ruling for you here.

John Vecchione, Senior Litigation Counsel at NCLA, responded to the ruling, telling Reclaim The Net, "The majority of the Supreme Court has declared open season on Americans' free speech rights on the internet," referring to the decision as an "ukase" that permits the federal government to influence third-party platforms to silence dissenting voices. Vecchione accused the Court of ignoring evidence and abdicating its responsibility to hold the government accountable for its actions that crush free speech. "The Government can press third parties to silence you, but the Supreme Court will not find you have standing to complain about it absent them referring to you by name apparently. This is a bad day for the First Amendment," he added.

Jenin Younes, another Litigation Counsel at NCLA, echoed Vecchione's sentiments, labeling the decision a "travesty for the First Amendment" and a setback for the pursuit of scientific knowledge. "The Court has green-lighted the government's unprecedented censorship regime," Younes commented, reflecting concerns that the ruling might stifle expert voices on crucial public health and policy issues.

Here is the content converted to Markdown:

Further expressing the gravity of the situation, Dr. Jayanta Bhattacharya, a client of NCLA and a professor at Stanford University, criticized the Biden Administration's regulatory actions during the COVID-19 pandemic. Dr. Bhattacharya argued that these actions led to "irrational policies" and noted, "Free speech is essential to science, to public health, and to good health." He called for congressional action and a public movement to restore and protect free speech rights in America.

This ruling comes as a setback to efforts supported by many who argue that the administration, together with federal agencies, is pushing social media platforms to suppress voices by labeling their content as misinformation.

Previously, a judge in Louisiana had criticized the federal agencies for acting like an Orwellian "Ministry of Truth." However, during the Supreme Court's oral arguments, it was argued by the government that their requests for social media platforms to address "misinformation" more rigorously did not constitute threats or imply any legal repercussions – despite the looming threat of antitrust action against Big Tech.

Here are the key points and specific quotes from the decision:

Lack of Article III Standing: The Supreme Court held that neither the individual nor the state plaintiffs established the necessary standing to seek an injunction against government defendants. The decision emphasizes the fundamental requirement of a "case or controversy" under Article III, which necessitates that plaintiffs demonstrate an injury that is "concrete, particularized, and actual or imminent; fairly traceable to the challenged action; and redressable by a favorable ruling" (Clapper v. Amnesty Int'l USA, 568 U. S. 398, 409).

Inadequate Traceability and Future Harm: The plaintiffs failed to convincingly link past social media restrictions and government communications with the platforms. The decision critiques the Fifth Circuit's approach, noting that the evidence did not conclusively show that government actions directly caused the platforms' moderation decisions. The Court pointed out: "Because standing is not dispensed in gross, plaintiffs must demonstrate standing for each claim they press" against each defendant, "and for each form of relief they seek" (TransUnion LLC v. Ramirez, 594 U. S. 413, 431). The complexity arises because the platforms had "independent incentives to moderate content and often exercised their own judgment."

Absence of Direct Causation: The Court noted that the platforms began suppressing COVID-19 content before the defendants' challenged communications began, indicating a lack of direct government coercion: "Complicating the plaintiffs' effort to demonstrate that each platform acted due to Government coercion, rather than its own judgment, is the fact that the platforms began to suppress the plaintiffs' COVID–19 content before the defendants' challenged communications started."

Redressability and Ongoing Harm: The plaintiffs argued they suffered from ongoing censorship, but the Court found this unpersuasive. The platforms continued their moderation practices even as government communication subsided, suggesting that future government actions were unlikely to alter these practices: "Without evidence of continued pressure from the defendants, the platforms remain free to enforce, or not to enforce, their policies—even those tainted by initial governmental coercion."

"Right to Listen" Theory Rejected: The Court rejected the plaintiffs' "right to listen" argument, stating that the First Amendment interest in receiving information does not automatically confer standing to challenge someone else's censorship: "While the Court has recognized a 'First Amendment right to receive information and ideas,' the Court has identified a cognizable injury only where the listener has a concrete, specific connection to the speaker."

Justice Alito's dissent argues that the First Amendment was violated by the actions of federal officials. He contends that these officials coerced social media platforms, like Facebook, to suppress certain viewpoints about COVID-19, which constituted unconstitutional censorship. Alito emphasizes that the government cannot use coercion to suppress speech and points out that this violates the core principles of the First Amendment, which is meant to protect free speech, especially speech that is essential to democratic self-government and public discourse on significant issues like public health.

Here are the key points of Justice Alito's stance:

Extensive Government Coercion: Alito describes a "far-reaching and widespread censorship campaign" by high-ranking officials, which he sees as a serious threat to free speech, asserting that these actions went beyond mere suggestion or influence into the realm of coercion. He states, "This is one of the most important free speech cases to reach this Court in years."

Impact on Plaintiffs: The dissent underscores that this government coercion affected various plaintiffs, including public health officials from states, medical professors, and others who wished to share views divergent from mainstream COVID-19 narratives. Alito notes, "Victims of the campaign perceived by the lower courts brought this action to ensure that the Government did not continue to coerce social media platforms to suppress speech."

Legal Analysis: Alito criticizes the majority's dismissal based on standing, arguing that the plaintiffs demonstrated both past and ongoing injuries caused by the government's actions, which were likely to continue without court intervention. He argues, "These past and threatened future injuries were caused by and traceable to censorship that the officials coerced."

Evidence of Coercion: The dissent points out specific instances where government officials pressured Facebook, suggesting significant consequences if the platform failed to comply with their demands to control misinformation. This included threats related to antitrust actions and other regulatory measures. Alito highlights, "Not surprisingly, these efforts bore fruit. Facebook adopted new rules that better conformed to the officials' wishes."

Potential for Future Abuse: Alito warns of the dangerous precedent set by the Court's refusal to address these issues, suggesting that it could empower future government officials to manipulate public discourse covertly. He cautions, "The Court, however, shirks that duty and thus permits the successful campaign of coercion in this case to stand as an attractive model for future officials who want to control what the people say, hear, and think."

Importance of Free Speech: He emphasizes the critical role of free speech in a democratic society, particularly for speech about public health and safety during the pandemic, and criticizes the government's efforts to suppress such speech through third parties like social media platforms. Alito asserts, "Freedom of speech serves many valuable purposes, but its most important role is protection of speech that is essential to democratic self-government."

The case revolved around allegations that the federal government, led by figures such as Dr. Vivek Murthy, the US Surgeon General, (though also lots more Biden administration officials) colluded with major technology companies to suppress speech on social media platforms. The plaintiffs argue that this collaboration targeted content labeled as "misinformation," particularly concerning COVID-19 and political matters, effectively silencing dissenting voices.

The plaintiffs claim that this coordination represents a direct violation of their First Amendment rights. They argue that while private companies can set their own content policies, government pressure that leads to the suppression of lawful speech constitutes unconstitutional censorship by proxy.

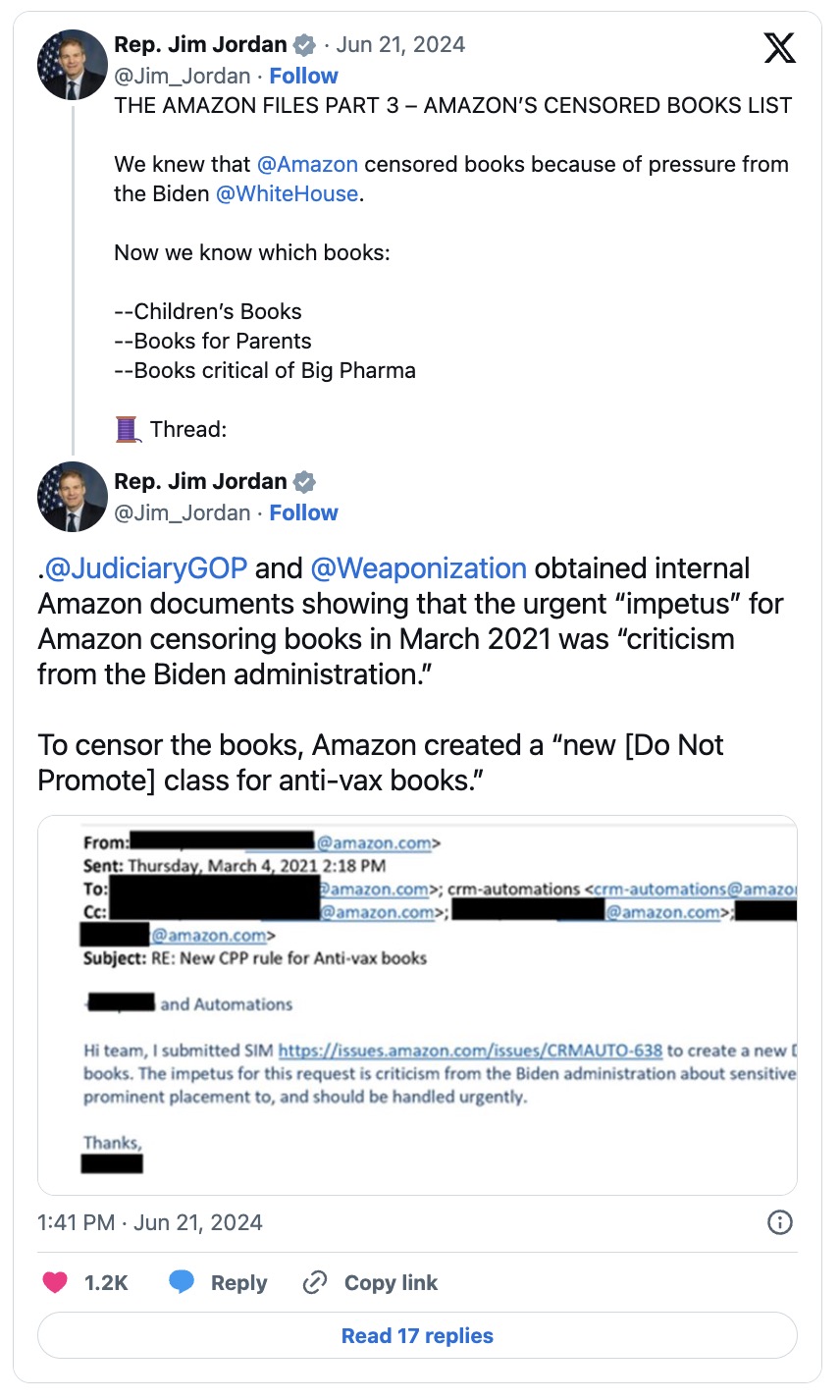

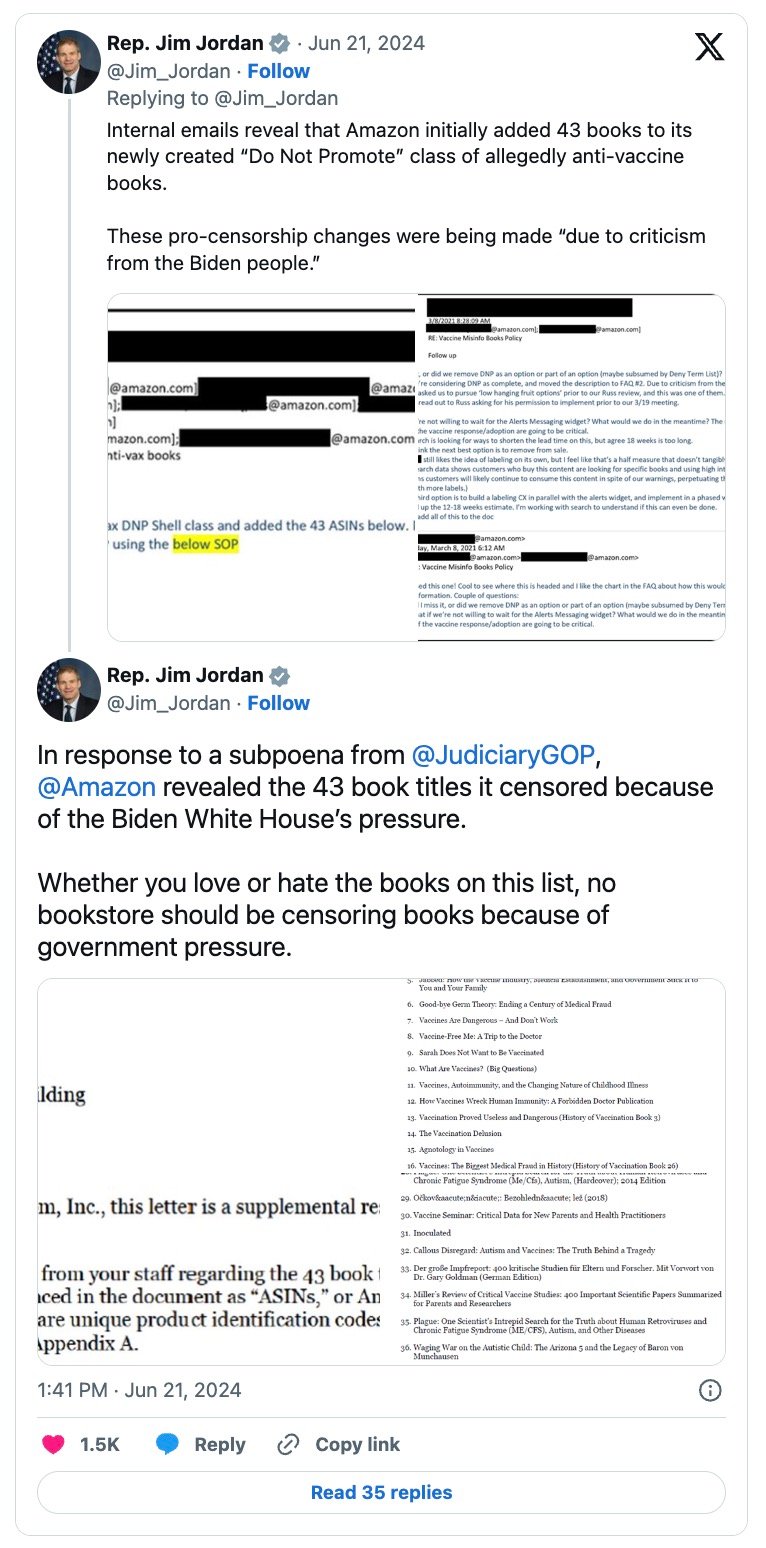

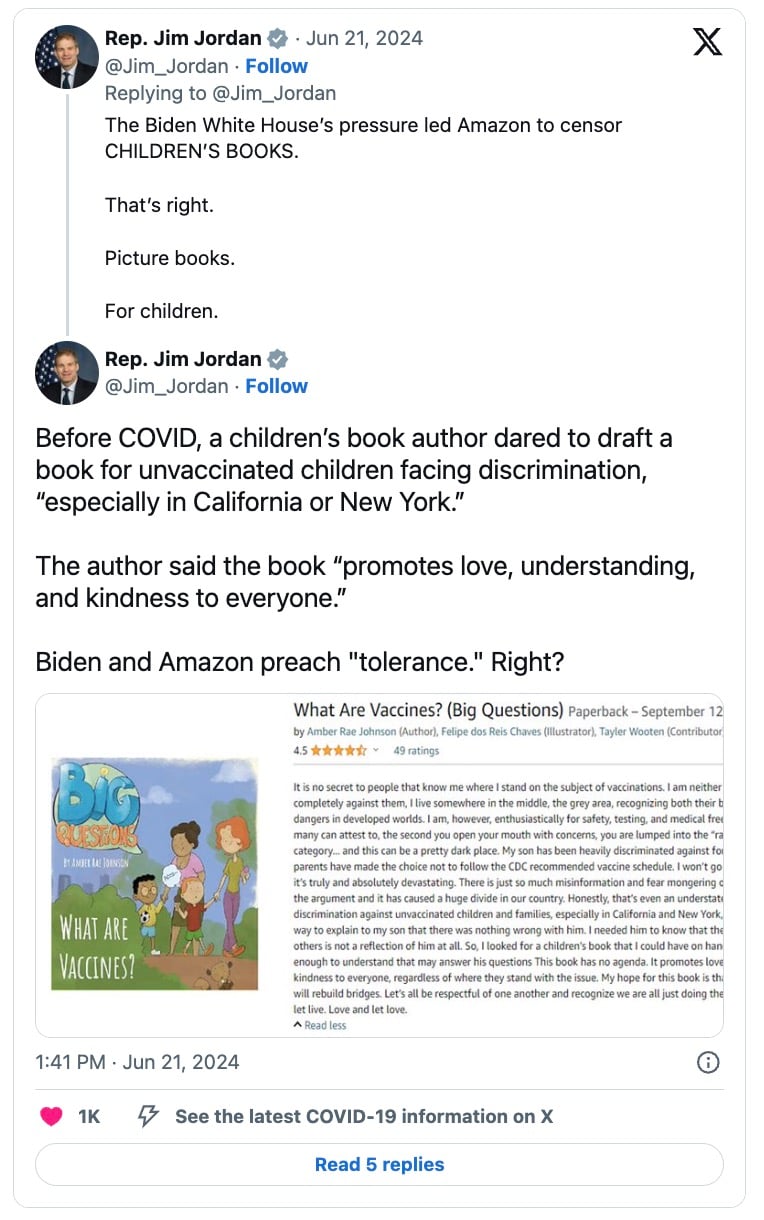

The government's campaign against what it called "misinformation," particularly during the COVID-19 pandemic – regardless of whether online statements turned out to be true or not – has been extensive.

However, Murthy v. Missouri exposed a darker side to these initiatives—where government officials allegedly overstepped their bounds by coercing tech companies to silence specific narratives.

Communications presented in court, including emails and meeting records, suggest a troubling pattern: government officials not only requested but demanded that tech companies remove or restrict certain content. The tone and content of these communications often implied serious consequences for non-compliance, raising questions about the extent to which these actions were voluntary versus compelled.

Tech companies like Facebook, Twitter, and Google have become the de facto public squares of the modern era, wielding immense power over what information is accessible to the public. Their content moderation policies, while designed to combat harmful content, have also been criticized for their lack of transparency and potential biases.

In this case, plaintiffs argued that these companies, under significant government pressure, went beyond their standard moderation practices. They allegedly engaged in the removal, suppression, and demotion of content that, although controversial, was not illegal. This raises a critical issue: the thin line between moderation and censorship, especially when influenced by government directives.

The Supreme Court ruling holds significant implications for the relationship between government actions and private social media platforms, as well as for the legal frameworks that govern free speech and content moderation.

Here are some of the broader impacts this ruling may have:

Clarification on Government Influence and Private Action: This decision clearly delineates the limits of government involvement in the content moderation practices of private social media platforms. It underscores that mere governmental encouragement or indirect pressure does not transform private content moderation into state action. This ruling could make it more challenging for future plaintiffs to claim that content moderation decisions, influenced indirectly by government suggestions or pressures, are tantamount to governmental censorship.

Stricter Standards for Proving Standing: The Supreme Court's emphasis on the necessity of concrete and particularized injuries directly traceable to the challenged government action sets a high bar for future litigants. Plaintiffs must now provide clear evidence that directly links government actions to the moderation practices that allegedly infringe on their speech rights. This could lead to fewer successful challenges against perceived government-induced censorship on digital platforms.

Impact on Content Moderation Policies: Social media platforms may feel more secure in enforcing their content moderation policies without fear of being seen as conduits for state action, as long as their decisions can be justified as independent from direct government coercion. This could lead to more assertive actions by platforms in moderating content deemed harmful or misleading, especially in critical areas like public health and election integrity.

Influence on Public Discourse: By affirming the autonomy of social media platforms in content moderation, the ruling potentially influences the nature of public discourse on these platforms. While platforms may continue to engage with government entities on issues like misinformation, they might do so with greater caution and transparency to avoid allegations of government coercion.

Future Legal Challenges and Policy Discussions: The ruling could prompt legislative responses, as policymakers may seek to address perceived gaps between government interests in combating misinformation and the protection of free speech on digital platforms. This may lead to new laws or regulations that more explicitly define the boundaries of acceptable government interaction with private companies in managing online content.

Broader Implications for Digital Rights and Privacy: The decision might also influence how digital rights and privacy are perceived and protected, particularly regarding how data from social media platforms is used or shared with government entities. This could lead to heightened scrutiny and potentially stricter guidelines to protect user data from being used in ways that could impinge on personal freedoms.

Overall, the Murthy v. Missouri ruling will likely serve as a critical reference point in ongoing debates about the government's ability to influence and shut down speech.