🤖 Happy FOSAI Friday! 🚀

Friday, September 22, 2023

HyperTech News Report #0001

Hello Everyone!

This series is a new vehicle for [email protected] news reports. In these posts I'll go over projects or news I stumble across week-over-week. I will try to keep Fridays consistent with this series, covering most of what I have been (but at regular cadence). For this week, I am going to do my best catching us up on a few old (and new) hot topics you may or may not have heard about already.

Table of Contents

Community Changelog

Image of the Week

A Stable Diffusion + ControlNet image garnered a ton of attention on social media this last week. This image has brought more recognition to the possibilities of these tools and helps shed a more positive light on the capabilities of generative models.

Read More

Introducing HyperTech

HyperionTechnologies a.k.a. HyperTech or HYPERION - a sci-fi company.

HyperTech Workshop (V0.1.0)

I am excited to announce my technology company: HyperTech. The first project of HyperionTechnologies is a digital workshop that comes in the form of a GitHub repo template for AI/ML/DL developers. HyperTech is a for-fun sci-fi company I started to explore AI development (among other emerging technologies I find curious and interesting). It is a satire corpo sandbox I have designed around my personal journey inside and outside of [email protected] with highly experimental projects and workflows. I will be using this company and setting/narrative/thematic to drive some of the future (and totally optional) content of our community. Any tooling, templates, or examples made along the way are entirely for you to learn from or reverse engineer for your own purpose or amusement. I'll be doing a dedicated post to HyperTech later this weekend. Keep your eye out for that if you're curious. The future is now. The future is bright. The future is HYPERION. (don't take this project too seriously).

New GGUF Models

Within this last month or so, llama.cpp have begun to standardize a new model format - the .GGUF model - which is much more optimized than its now legacy (and deprecated predecessor - GGML). This is a big deal for anyone running GGML models. GGUF is basically superior in all ways. Check out llama.cpp's notes about this change on their official GitHub. I have used a few GGUF models myself and have found them much more performant than any GGML counterpart. TheBloke has already converted many of his older models into this new format (which is compatible with anything utilizing llama.cpp).

More About GGUF:

It is a successor file format to GGML, GGMF and GGJT, and is designed to be unambiguous by containing all the information needed to load a model. It is also designed to be extensible, so that new features can be added to GGML without breaking compatibility with older models. Basically: 1.) No more breaking changes 2.) Support for non-llama models. (falcon, rwkv, bloom, etc.) and 3.) No more fiddling around with rope-freq-base, rope-freq-scale, gqa, and rms-norm-eps. Prompt formats could also be set automatically.

Falcon 180B

Many of you have probably already heard of this, but Falcon 180B was recently announced - and I haven't covered it here yet so it's worth mentioning in this post. Check out the full article regarding its release here on HuggingFace. Can't wait to see what comes next! This will open up a lot of doors for us to explore.

Today, we're excited to welcome TII's Falcon 180B to HuggingFace! Falcon 180B sets a new state-of-the-art for open models. It is the largest openly available language model, with 180 billion parameters, and was trained on a massive 3.5 trillion tokens using TII's RefinedWeb dataset. This represents the longest single-epoch pretraining for an open model. The dataset for Falcon 180B consists predominantly of web data from RefinedWeb (~85%). In addition, it has been trained on a mix of curated data such as conversations, technical papers, and a small fraction of code (~3%). This pretraining dataset is big enough that even 3.5 trillion tokens constitute less than an epoch.

The released chat model is fine-tuned on chat and instruction datasets with a mix of several large-scale conversational datasets.

‼️ Commercial Usage: Falcon 180b can be commercially used but under very restrictive conditions, excluding any "hosting use". We recommend to check the license and consult your legal team if you are interested in using it for commercial purposes.

You can find the model on the Hugging Face Hub (base and chat model) and interact with the model on the Falcon Chat Demo Space.

LLama 3 Rumors

Speaking of big open-source models - Llama 3 is rumored to be under training or development. Llama 2 was clearly an improvement over its predecessor. I wonder how Llama 3 & 4 will stack in this race to AGI. I forget that we're still early to this party. At this rate of development, I believe we're bound to see it within the decade.

Meta plans to rival GPT-4 with a rumored free Llama 3- According to an early rumor, Meta is working on Llama 3, which is intended to compete with GPT-4, but will remain largely free under the Llama license.- Jason Wei, an engineer associated with OpenAI, has indicated that Meta possesses the computational capacity to train Llama 3 to a level comparable to GPT-4. Furthermore, Wei suggests that the feasibility of training Llama 4 is already within reach.- Despite Wei's credibility, it's important to acknowledge the possibility of inaccuracies in his statements or the potential for shifts in these plans.

DALM

I recently stumbled across DALM - a new domain adapted language modeling toolkit which is supposed to enable a workflow that trains a retrieval augmented generation (RAG) pipeline from end-to-end. According to their results, the DALM specific training process leads to a much higher response quality when it comes to retrieval augmented generation. I haven't had a chance to tinker with this a lot, but I'd keep an eye on it if you're engaging with RAG workflows.

DALM Manifesto:

A great rift has emerged between general LLMs and the vector stores that are providing them with contextual information. The unification of these systems is an important step in grounding AI systems in efficient, factual domains, where they are utilized not only for their generality, but for their specificity and uniqueness. To this end, we are excited to open source the Arcee Domain Adapted Language Model (DALM) toolkit for developers to build on top of our Arcee open source Domain Pretrained (DPT) LLMs. We believe that our efforts will help as we begin next phase of language modeling, where organizations deeply tailor AI to operate according to their unique intellectual property and worldview.

For the first time in the literature, we modified the initial RAG-end2end model (TACL paper, HuggingFace implementation) to work with decoder-only language models like Llama, Falcon, or GPT. We also incorporated the in-batch negative concept alongside the RAG's marginalization to make the entire process efficient.

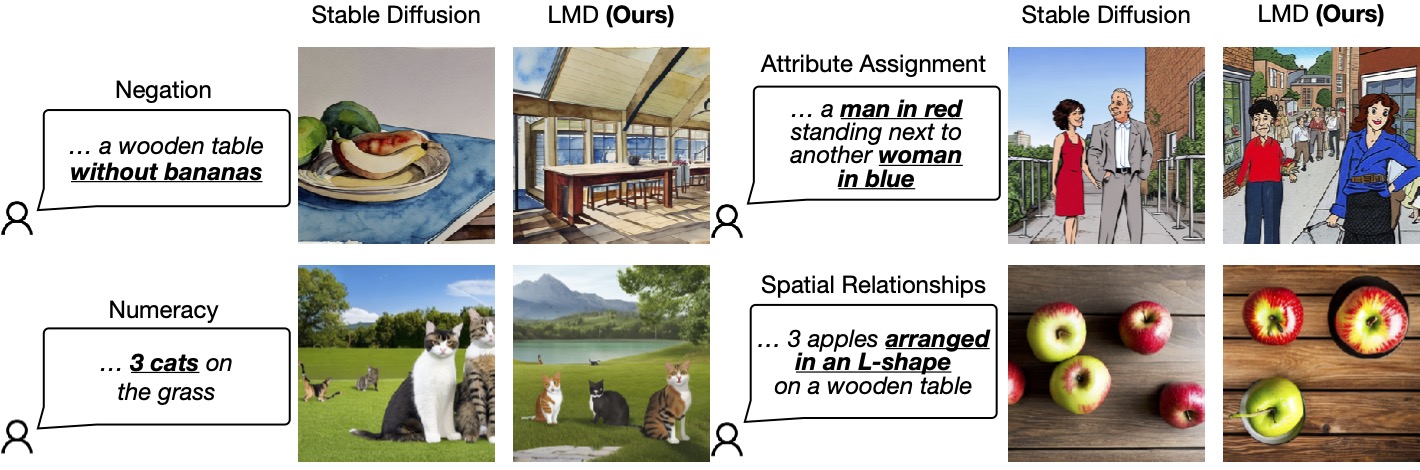

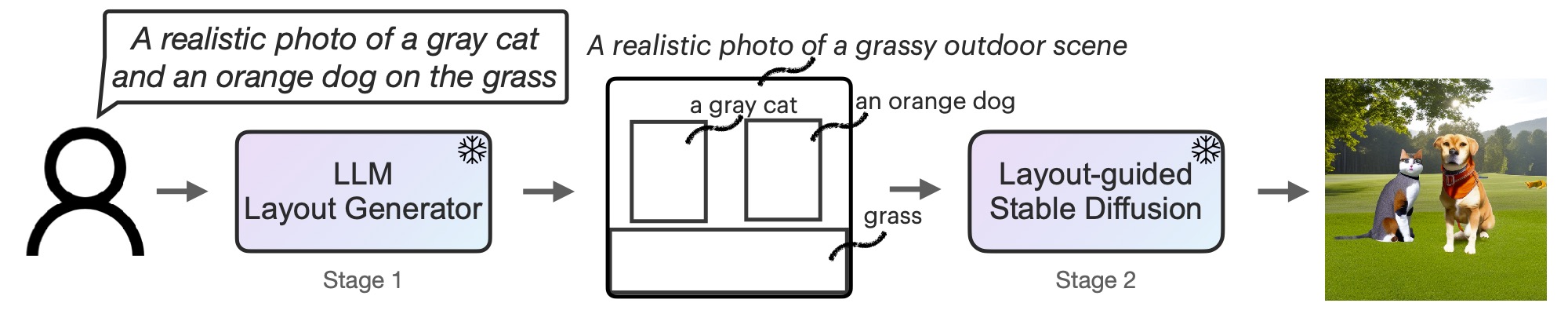

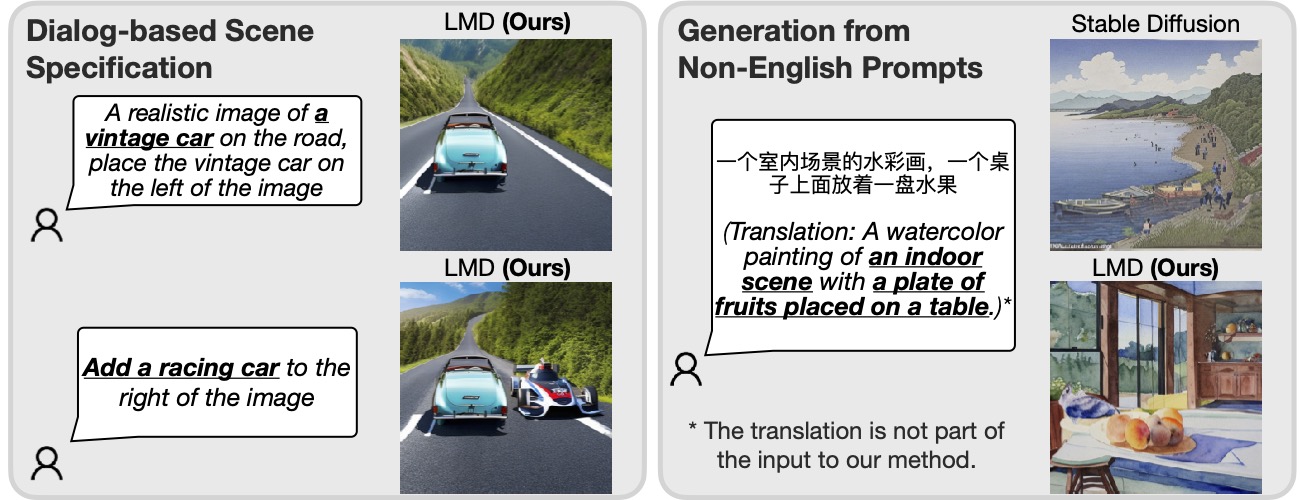

DALL-E 3

OpenAI announced DALL-E 3 that will have direct native compatibility within ChatGPT. This means users should be able to naturally and semantically iterate over images and features over time, adjusting the output from the same chat interface throughout their conversation. This will enable many users to seamlessly incorporate image diffusion into their chat workflows.

I think this is huge, mostly because it illustrates a new technique that removes some of the barriers that prompt engineers have to solve (it reads prompts differently than other diffusers). Not to mention you are permitted to sell, keep, and commercialize any image DALL-E generates.

I am curious to see if open-source workflows can follow a similar approach and have iterative design workflows that seamlessly integrate with a chat interface. That, paired with manual tooling from things like ControlNet would be a powerful pairing that could spark a lot of creativity. Don't get me wrong, sometimes I really like manual and node-based workflows, but I believe semantic computation is the future. Regardless of how 'open' OpenAI truly is, these breakthroughs help chart the path forward for everyone else still catching up.

More About DALL-E 3:

DALL·E 3 is now in research preview, and will be available to ChatGPT Plus and Enterprise customers in October, via the API and in Labs later this fall. Modern text-to-image systems have a tendency to ignore words or descriptions, forcing users to learn prompt engineering. DALL·E 3 represents a leap forward in our ability to generate images that exactly adhere to the text you provide. DALL·E 3 is built natively on ChatGPT, which lets you use ChatGPT as a brainstorming partner and refiner of your prompts. Just ask ChatGPT what you want to see in anything from a simple sentence to a detailed paragraph. When prompted with an idea, ChatGPT will automatically generate tailored, detailed prompts for DALL·E 3 that bring your idea to life. If you like a particular image, but it’s not quite right, you can ask ChatGPT to make tweaks with just a few words.

DALL·E 3 will be available to ChatGPT Plus and Enterprise customers in early October. As with DALL·E 2, the images you create with DALL·E 3 are yours to use and you don't need our permission to reprint, sell or merchandise them.

Author's Note

This post was authored by the moderator of [email protected] - Blaed. I make games, produce music, write about tech, and develop free open-source artificial intelligence (FOSAI) for fun. I do most of this through a company called HyperionTechnologies a.k.a. HyperTech or HYPERION - a sci-fi company.

Thanks for Reading!

If you found anything about this post interesting, consider subscribing to [email protected] where I do my best to keep you informed about free open-source artificial intelligence as it emerges in real-time.

Our community is quickly becoming a living time capsule thanks to the rapid innovation of this field. If you've gotten this far, I cordially invite you to join us and dance along the path to AGI and the great unknown.

Come on in, the water is fine, the gates are wide open! You're still early to the party, so there is still plenty of wonder and discussion yet to be had in our little corner of the digiverse.

This post was written by a human. For other humans. About machines. Who work for humans for other machines. At least for now...

Until next time!

Blaed

This is all very good feedback! I appreciate everyone who has commented so far. I will leave this post pinned for the remainder of the year for anyone (new member or old) to share their thoughts and what else they (you) think we should explore next with [email protected].