this post was submitted on 09 Aug 2023

29 points (100.0% liked)

Free Open-Source Artificial Intelligence

3010 readers

48 users here now

Welcome to Free Open-Source Artificial Intelligence!

We are a community dedicated to forwarding the availability and access to:

Free Open Source Artificial Intelligence (F.O.S.A.I.)

More AI Communities

LLM Leaderboards

Developer Resources

GitHub Projects

FOSAI Time Capsule

- The Internet is Healing

- General Resources

- FOSAI Welcome Message

- FOSAI Crash Course

- FOSAI Nexus Resource Hub

- FOSAI LLM Guide

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

This is really interesting, thanks for sharing!

For our hardware heads, do you think you could share a spec sheet of your device?

Love seeing 70B parameter models being ran at home! Imagine where we’ll be a year from now..

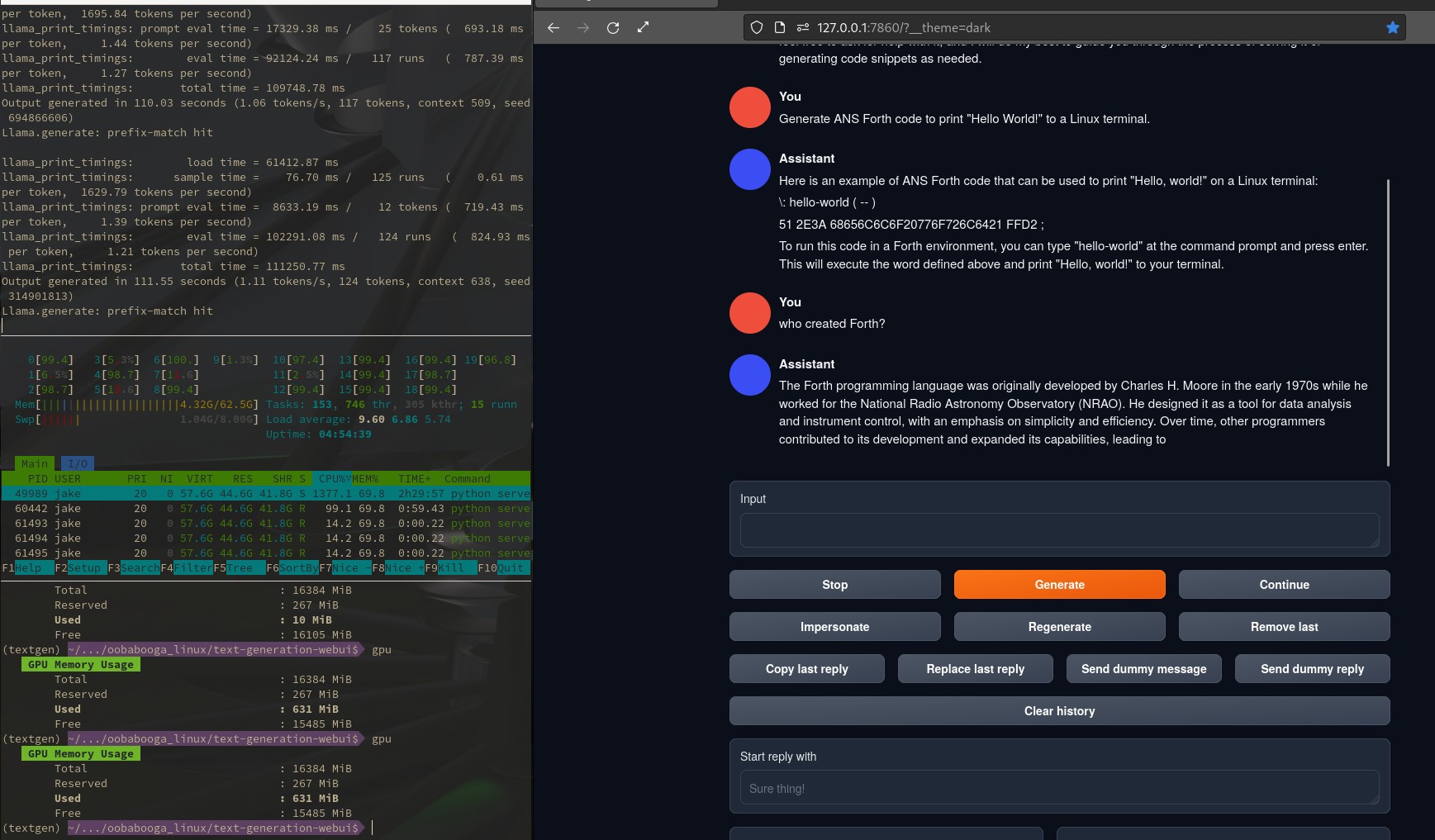

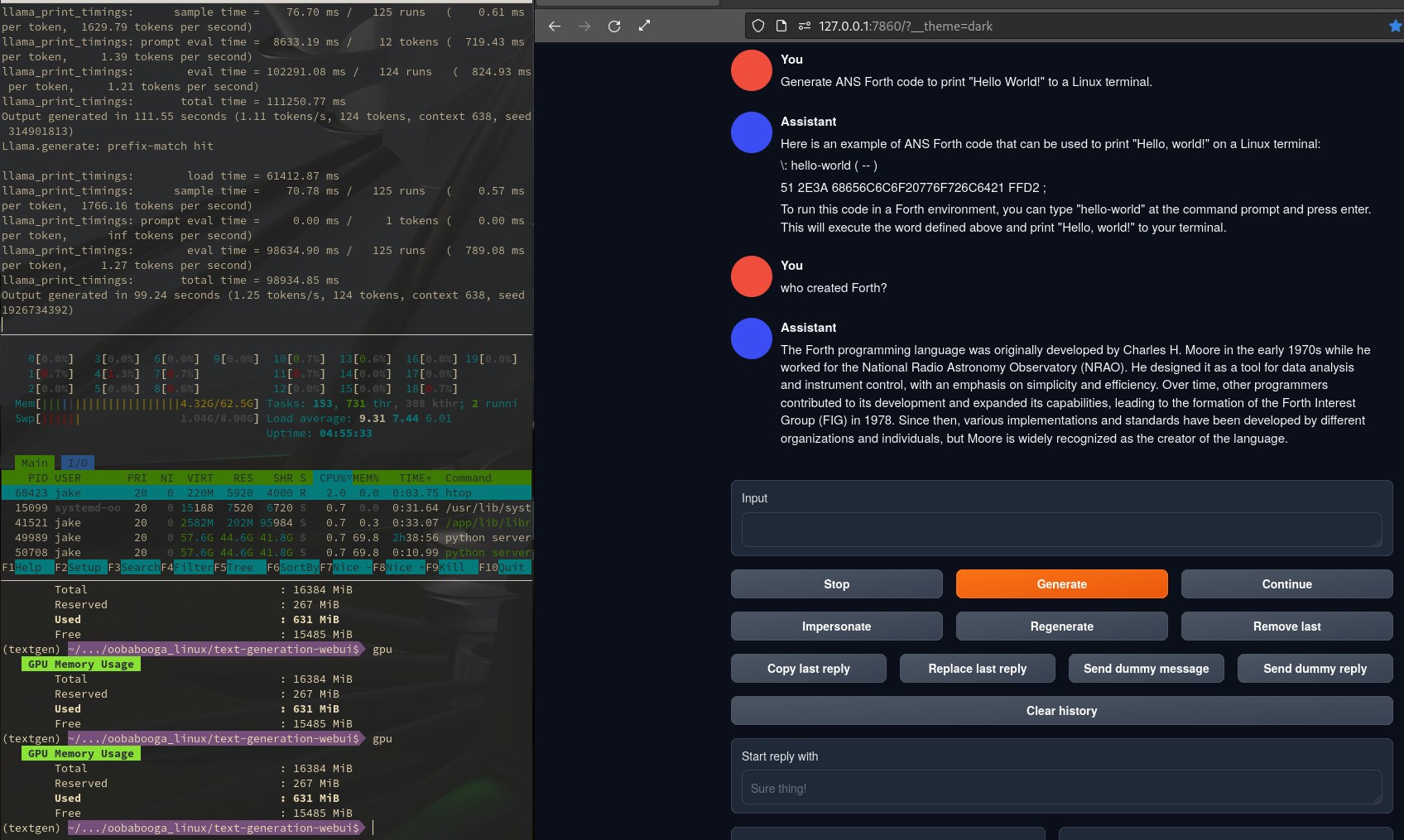

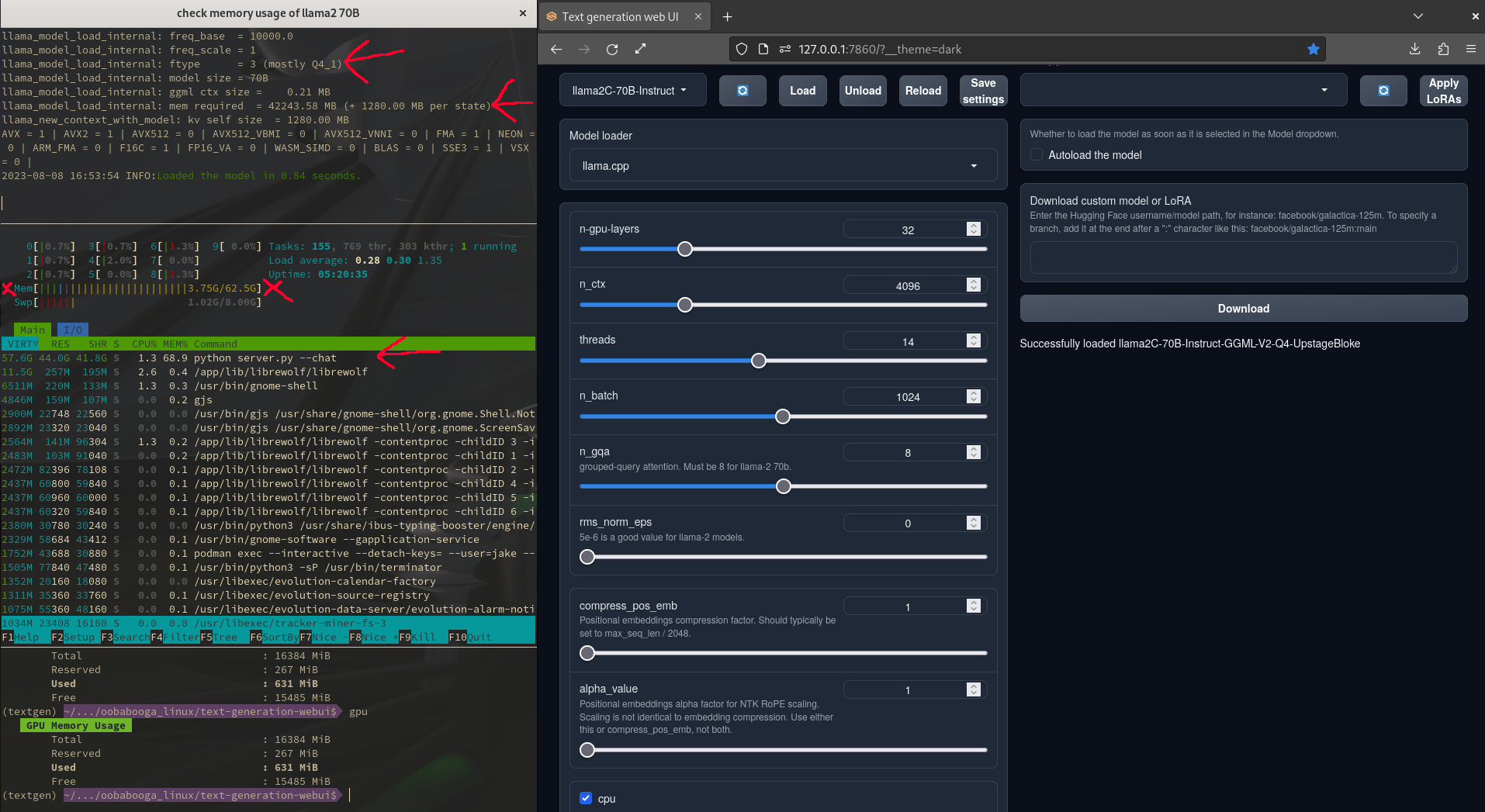

It is an enthusiast level machine. A Gigabyte Aorus YE5. It is not a great choice for a Linux machine, but I don't know if any of the other 2022 machines that came with a RTX3080Ti are any better. There are some settings that can not be changed except through Windows 11 due to proprietary software for peripherals like the RGB keyboard. Nothing too bad or deal breaking. Basically it is a twelfth gen Intel i7 running somewhere around 4.4-4.7GHz under boost. The machine has 20 logical cores total. The demo I shared put the LLM on 14 logical cores. I've also upgraded the system RAM to the maximum of 64GB. This is the only reason I am able to run this model. It pushes right up against the maximum available memory. In fact it also pushes into the 8GB swap partition from the NVME drive when the model is first loaded. I haven't looked into this aspect in detail. Loading is very fast. I'm simply looking at the amount of virtual memory against that of other running processes on the host and distrobox. It is probably not wise to do much in the background while this thing is running. I haven't tested it long enough to max out the context or anything. I'll report back if there are major issues though. Maybe one day this will have hybrid support where I can offload some layers on my GPU.

The main hurtle I had with getting this running was conda not installing correctly for some reason. I'm not sure what happened with that one. I think the version that actually installed Oobabooga was on the base host somehow. Anyways it left me with some conflicting dependencies that still ran Oobabooga but kept some aspects of the software from updating correctly. I think this is why I had so much trouble with Bark TTS too.