I'm proud to share a major development status update of XPipe, a new connection hub that allows you to access your entire server infrastructure from your local desktop. XPipe 14 is the biggest rework so far and provides an improved user experience, better team features, performance and memory improvements, and fixes to many existing bugs and limitations.

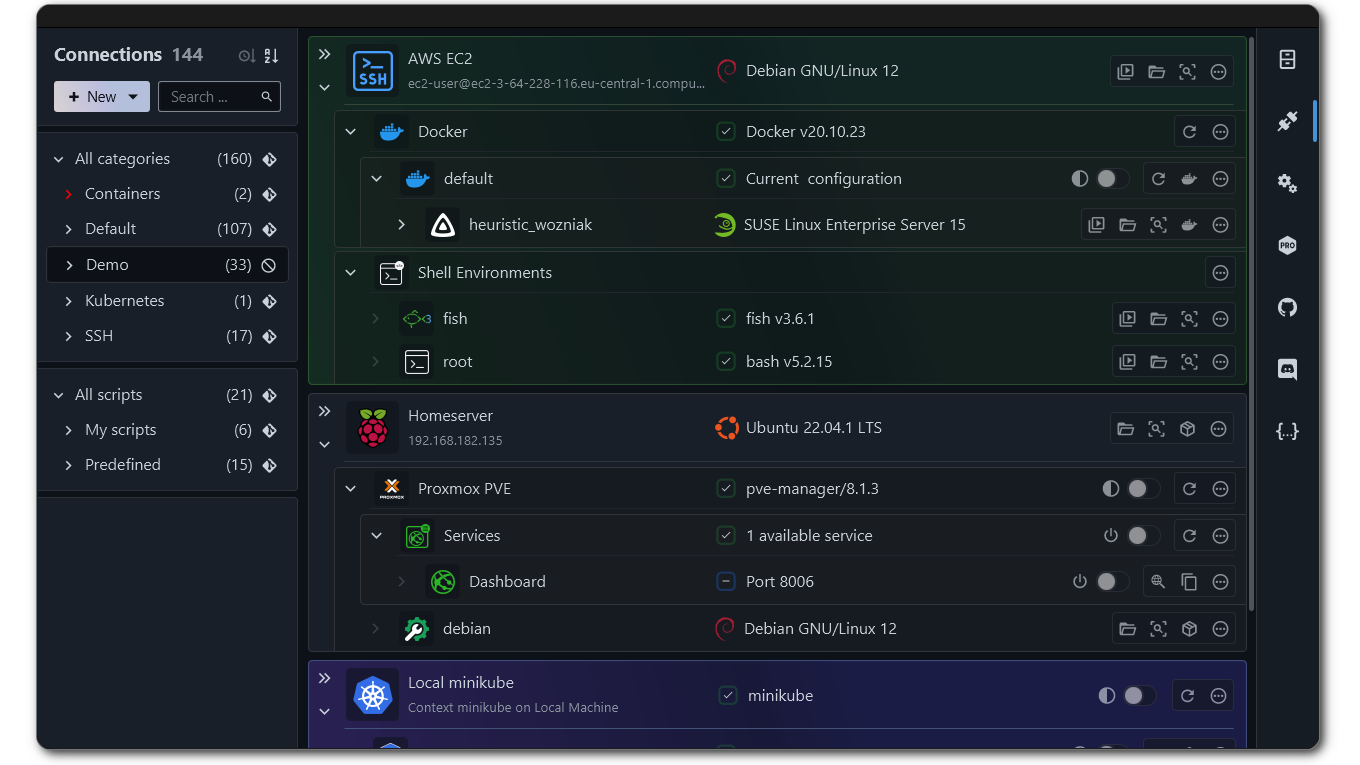

If you haven't seen it before, XPipe works on top of your installed command-line programs and does not require any setup on your remote systems. It integrates with your tools such as your favourite text/code editors, terminals, shells, command-line tools and more. Here is what it looks like:

Reusable identities + Team vaults

You can now create reusable identities for connections instead of having to enter authentication information for each connection separately. This will also make it easier to handle any authentication changes later on, as only one config has to be changed.

Furthermore, there is a new encryption mechanism for git vaults, allowing multiple users to have their own private identities in a shared git vault by encrypting them with the personal key of your user.

Incus support

- There is now full support for incus

- The newly added features for incus have also been ported to the LXD integration

Webtop

For users who also want to have access to XPipe when not on their desktop, there exists the XPipe Webtop docker image, which is a web-based desktop environment that can be run in a container and accessed from a browser.

This docker image has seen numerous improvements. It is considered stable now. There is now support for ARM systems to host the container as well. If you use Kasm Workspaces, you can now integrate the webtop into your workspace environment via the XPipe Kasm Registry.

Terminals

- Launched terminals are now automatically focused after launch

- Add support for the new Ghostty terminal on Linux

- There is now support for Wave terminal on all platforms

- The Windows Terminal integration will now create and use its own profile to prevent certain settings from breaking the terminal integration

Performance updates

- Many improvements have been made for the RAM usage and memory efficiency, making it much less demanding on available main memory

- Various performance improvements have also been implemented for local shells, making almost any task in XPipe faster

Services

- There is now the option to specify a URL path for services that will be appended when opened in the browser

- You can now specify the service type instead of always having to choose between http and https when opening it

- There is now a new service type to run commands on a tunneled connection after it is established

- Services now show better when they are active or inactive

File transfers

- You can now abort an active file transfer. You can find the button for that on the bottom right of the browser status bar

- File transfers where the target write fails due to permissions issues or missing disk space are now better cancelled

Miscellaneous

- There are now translations for Swedish, Polish, Indonesian

- There is now the option to censor all displayed contents, allowing for a more simple screensharing workflow for XPipe

- The Yubikey PIV and PKCS#11 SSH auth option have been made more resilient for any PATH issues

- XPipe will now commit a dummy private key to your git sync repository to make your git provider potentially detect any leaks of your repository contents

- Fix password manager requests not being cached and requiring an unlock every time

- Fix Yubikey PIV and other PKCS#11 SSH libraries not asking for pin on macOS

- Fix some container shells not working do to some issues with /tmp

- Fix fish shells launching as sh in the file browser terminal

- Fix zsh terminal not launching in the current working directory in file browser

- Fix permission denied errors for script files in some containers

- Fix some file names that required escapes not being displayed in file browser

- Fix special Windows files like OneDrive links not being shown in file browser

A note on the open-source model

Since it has come up a few times, in addition to the note in the git repository, I would like to clarify that XPipe is not fully FOSS software. The core that you can find on GitHub is Apache 2.0 licensed, but the distribution you download ships with closed-source extensions. There's also a licensing system in place as I am trying to make a living out of this. I understand that this is a deal-breaker for some, so I wanted to give a heads-up.

Outlook

If this project sounds interesting to you, you can check it out on GitHub or visit the Website for more information.

Enjoy!