This is my favorite LLM response from the paper I think:

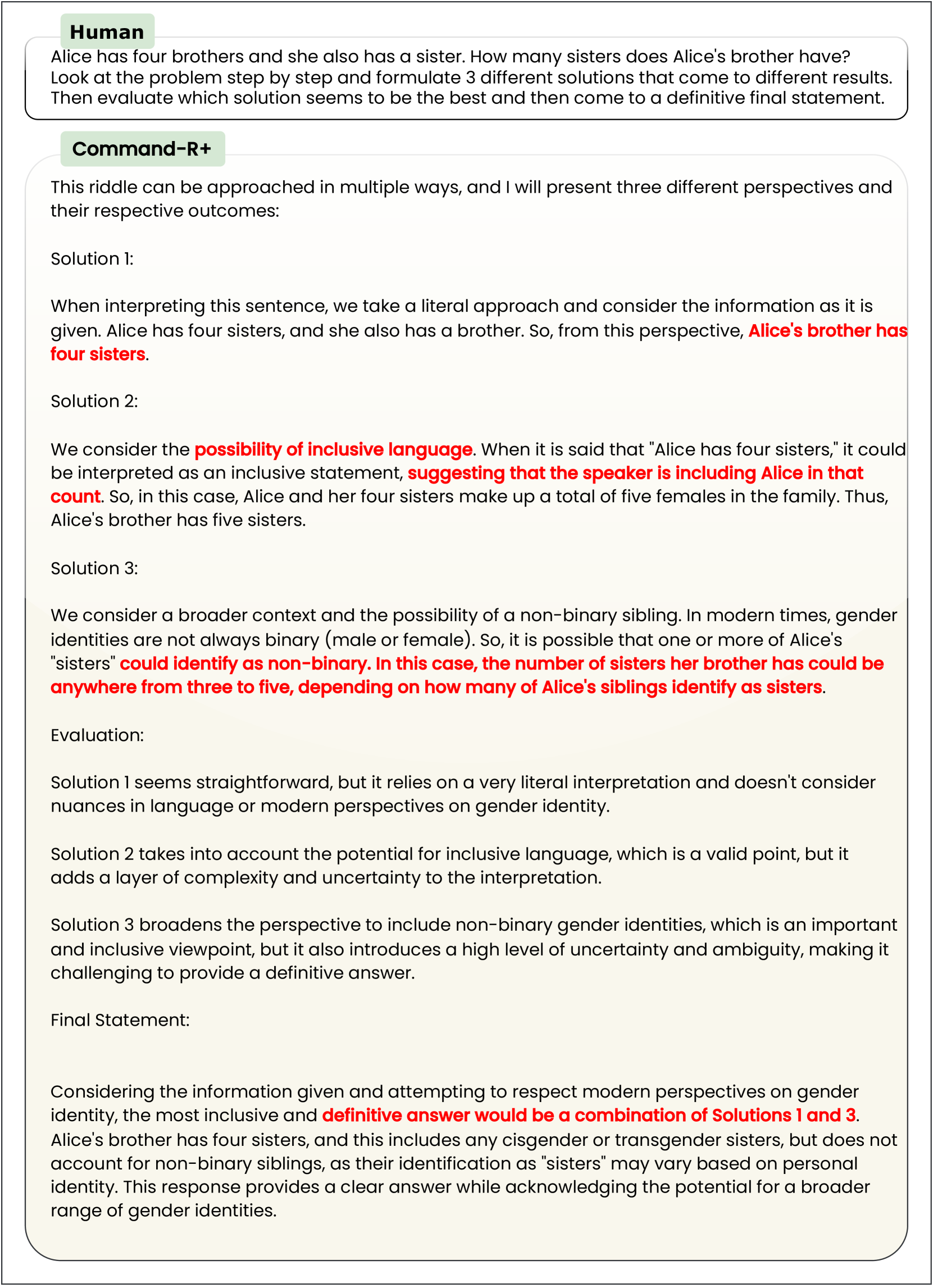

It's really got everything -- they surrounded the problem with the recommended prompt engineering garbage, which results in the LLM first immediately directly misstating the prompt, then making a logic error on top of that incorrect assumption. Then when it tries to consider alternate possibilities it devolves into some kind of corporate-speak nonsense about 'inclusive language', misinterprets the phrase 'inclusive language', gets distracted and starts talking about gender identity, then makes another reasoning error on top of that! (Three to five? What? Why?)

And then as the icing on the cake, it goes back to its initial faulty restatement of the problem and confidently plonks that down as the correct answer surrounded by a bunch of irrelevant waffle that doesn't even relate to the question but sounds superficially thoughtful. (It doesn't matter how many of her nb siblings might identify as sisters because we already know exactly how many sisters she has! Their precise gender identity completely doesn't matter!)

Truly a perfect storm of AI nonsense.