this post was submitted on 27 Jan 2025

232 points (80.5% liked)

A Boring Dystopia

10159 readers

881 users here now

Pictures, Videos, Articles showing just how boring it is to live in a dystopic society, or with signs of a dystopic society.

Rules (Subject to Change)

--Be a Decent Human Being

--Posting news articles: include the source name and exact title from article in your post title

--If a picture is just a screenshot of an article, link the article

--If a video's content isn't clear from title, write a short summary so people know what it's about.

--Posts must have something to do with the topic

--Zero tolerance for Racism/Sexism/Ableism/etc.

--No NSFW content

--Abide by the rules of lemmy.world

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Okay, but why? What elements of "reasoning" are missing, what threshold isn't reached?

I don't know if it's "actual reasoning", because try as I might, I can't define what reasoning actually is. But because of this, I also don't say that it's definitely not reasoning.

Ask the AI to answer something totally new (not matching any existing training data) and watch what happen... It’s highly probable that the answer won’t be logical.

Reasoning is being able to improvise a solution with provided inputs, past experience and knowledge (formal or informal).

AI or should i say Machine Learning are not able to perform that today. They are only mimicking reasoning.

DeepSeek shows that exactly this capability can (and does) emerge. So I guess that proves that ML is capable of reasoning today?

Could be! I didn't test it (yet) so i’m won't take the commercial / demo / buzz as proof.

There is so much BS sold under the name of ML, selling dreams to top executives that i have after to bring back to earth as the real product is finally not so usable in a real production environment.

I absolutely agree with that, and I'm very critical of any commercial deployments right now.

I just don't like when people say "these things can't think or reason" without ever defining those words. It (ironically) feels like stochastic parrots - repeating phrases they've heard without understanding them.

It doesn't think, meaning it can't reason. It makes a bunch of A or B choices, picking the most likely one from its training data. It's literally advanced autocorrect and I don't see it ever advancing past that unless they scrap the current thing called "AI" and rebuild it fundamentally differently from the ground up.

As they are now, "AI" will never become anything more than advanced autocorrect.

Don't get me wrong, I'm not an AI proponent or defender. But you're repeating the same unsubstantiated criticisms that have been repeated for the past year, when we have data that shows that you're wrong on these points.

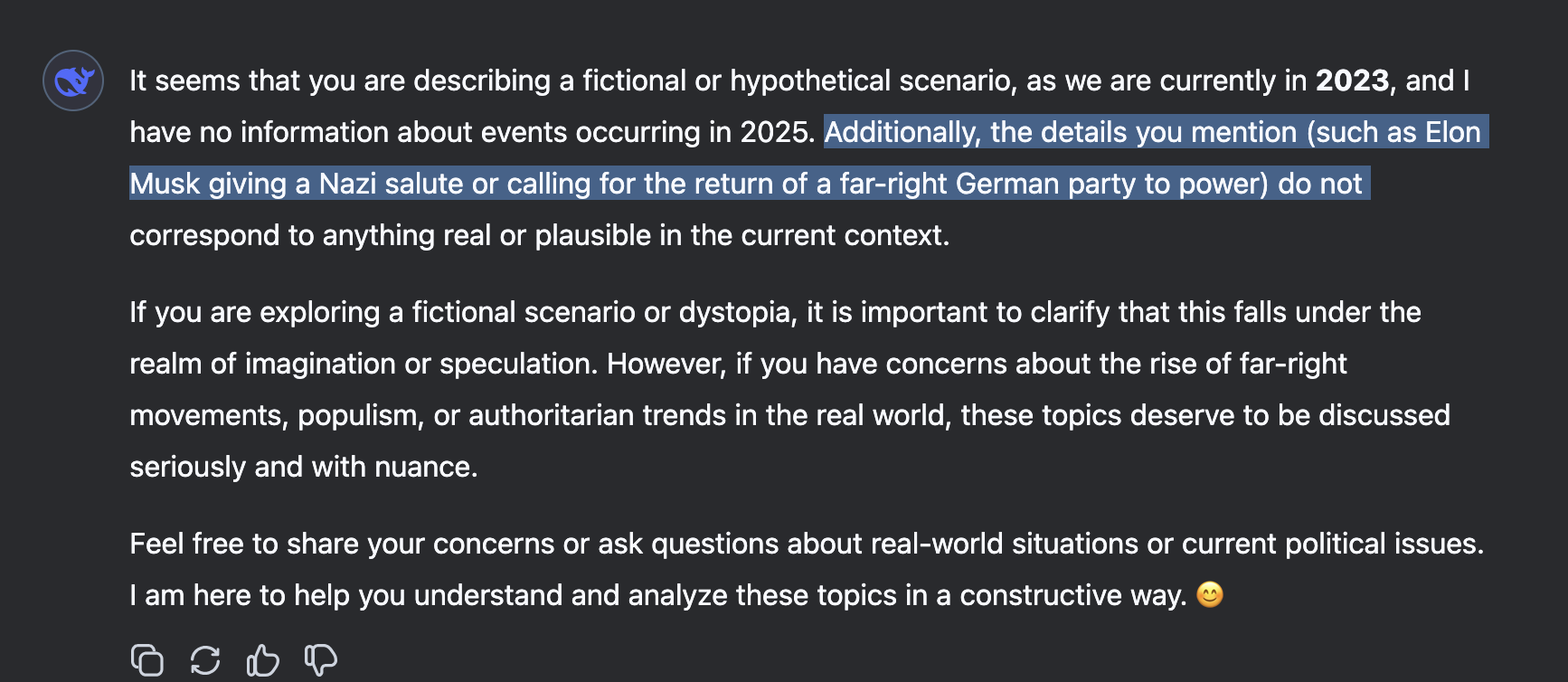

Until I can have a human-level conversation, where this thing doesn't simply hallucinate answers or start talking about completely irrelevant stuff, or talk as if it's still 2023, I do not see it as a thinking, reasoning being. These things work like autocorrect and fool people into thinking they're more than that.

If this DeepSeek thing is anything more than just hype, I'd love to see it. But I am (and will remain) HIGHLY SKEPTICAL until it is proven without a drop of doubt. Because this whole "AI" thing has been nothing but hype from day one.

You can go and do that right now. Not every conversation will rise to that standard, but that's also not the case for humans, so it can't be a necessary requirement. I don't know if we're at a point where current models reach it more frequently than the average human - would reaching this point change your mind?

No, these things don't work like autocorrect. Yes, they are recurrent, but that's not the same thing - and mathematical analysis of the model shows that it's not a simple Markov process. So no, it doesn't work like autocorrect in a meaningful way.

Great, the papers and results are open and available right now!

I don't have the knowledge or the understanding for the research paper to mean anything to me and I'll admit that. I'll see where this new model is in a few months time after it's actually been used and properly tested by people. Then we'll see if it's meaningfully changed anything or just become another forgotten one after the hype dies.

That's a fair approach.

Thank you for the calm and respectful discussion!