My NAS is on an embedded Xeon that at this point is close to a decade old and one of my proxmox boxes is on an Intel 6500t. I'm not really running anything on any really low spec machines anymore, though earlyish in the pandemic I was running boinc with the Open Pandemics project on 4 raspberry pis.

Selfhosted

A place to share alternatives to popular online services that can be self-hosted without giving up privacy or locking you into a service you don't control.

Rules:

-

Be civil: we're here to support and learn from one another. Insults won't be tolerated. Flame wars are frowned upon.

-

No spam posting.

-

Posts have to be centered around self-hosting. There are other communities for discussing hardware or home computing. If it's not obvious why your post topic revolves around selfhosting, please include details to make it clear.

-

Don't duplicate the full text of your blog or github here. Just post the link for folks to click.

-

Submission headline should match the article title (don’t cherry-pick information from the title to fit your agenda).

-

No trolling.

Resources:

- selfh.st Newsletter and index of selfhosted software and apps

- awesome-selfhosted software

- awesome-sysadmin resources

- Self-Hosted Podcast from Jupiter Broadcasting

Any issues on the community? Report it using the report flag.

Questions? DM the mods!

I had a old Acer SFF desktop machine (circa 2009) with an AMD Athlon II 435 X3 (equivalent to the Intel Core i3-560) with a 95W TDP, 4 GB of DDR2 RAM, and 2 1TB hard drives running in RAID 0 (both HDDs had over 30k hours by the time I put it in). The clunker consumed 50W at idle. I planned on running it into the ground so I could finally send it off to a computer recycler without guilt.

I thought it was nearing death anyways, since the power button only worked if the computer was flipped upside down. I have no idea why this was the case, the computer would keep running normally afterwards once turned right side up.

The thing would not die. I used it as a dummy machine to run one-off scripts I wrote, a seedbox that would seed new Linux ISOs as it was released (genuinely, it was RAID0 and I wouldn't have downloaded anything useful), a Tor Relay and at one point, a script to just endlessly download Linux ISOs overnight to measure bandwidth over the Chinanet backbone.

It was a terrible machine by 2023, but I found I used it the most because it was my playground for all the dumb things that I wouldn't subject my regular home production environments to. Finally recycled it last year, after 5 years of use, when it became apparent it wasn't going to die and far better USFF 1L Tiny PC machines (i5-6500T CPUs) were going on eBay for $60. The power usage and wasted heat of an ancient 95W TDP CPU just couldn't justify its continued operation.

Always wanted am x3, just such an oddball thing, I love this. I had a 965 x4

People in this thread have very interesting ideas of what "shit hardware" is

My cluster ranges from 4th gen to 8th gen Intel stuff. 8th gen is the newest I've ever had (until I built a 5800X3D PC).

I've seen people claiming 9th gen is "ancient". Like...ok moneybags.

My 9th gen intel is still not the bottleneck of my 120hz 4K/AI rig, not by a longshot.

Just down load more ram capacity. It the button right under the down load more ram button.

Plex server is running on my old Threadripper 1950X. Thing has been a champ. Due to rebuild it since I've got newer hardware to cycle into it but been dragging my heels on it. Not looking forward to it.

All my stuff is running on a 6-year-old Synology D918+ that has a Celeron J3455 (4-core 1.5 GHz) but upgraded to 16 GB RAM.

Funny enough my router is far more powerful, it's a Core i3-8100T, but I was picking out of the ThinkCentre Tiny options and was paranoid about the performance needed on a 10 Gbit internet connection

kind of.. a "AMD GX-420GI SOC: quad-core APU" the one with no L3 Cache, in an Thin Client and 8Gb Ram. old Laptop ssd for Storage (128GB) Nextcloud is usable but not fast.

edit: the Best thing: its 100% Fanless

My first @home server was an old defective iMac G3 but it did the job (and then died for good) A while back, I got a RP3 and then a small thin client with some small AMD CPU. They (barely) got the job done.

I replaced them with an HP EliteDesk G2 micro with a i5-6500T. I don't know what to do with the extra power.

I was for a while. Hosted a LOT of stuff on an i5-4690K overclocked to hell and back. It did its job great until I replaced it.

Now my servers don't lag anymore.

EDIT: CPU usage was almost always at max. I was just redlining that thing for ~3 years. Cooling was a beefy Noctua air cooler so it stayed at ~60 C. An absolute power house.

your hardware ain't shit until it's a first gen core2duo in a random Dell office PC and 2gb of memory that you specifically only use just because it's a cheaper way to get x86 when you can't use your raspberry pi.

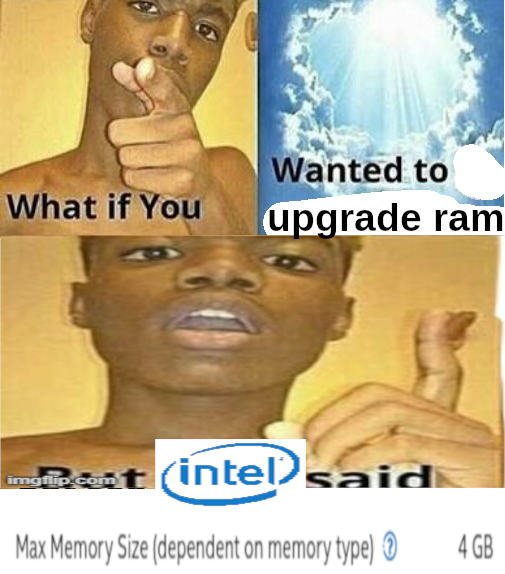

Also they lie most of the time and it may technically run fine on more memory, especially if it's older when dimm capacities were a lot lower than they can be now. It just won't be "supported".

4 gigs of RAM is enough to host many singular projects - your own backup server or VPN for instance. It's only if you want to do many things simultaneously that things get slow.

It is amazing what you can do with so little. My server has nas, jellyfin, plex, ebook reader, recipe, vpn, notes, music server, backups, and serves 4 people. If it hits 4gb ram usage it is a rare day.

You can do quite a bit with 4GB RAM. A lot of people use VPSes with 4GB (or less) RAM for web hosting, small database servers, backups, etc. Big providers like DigitalOcean tend to have 1GB RAM in their lowest plans.

I'm sure a lot of people's self hosting journey started on junk hardware... "try it out", followed by "oh this is cool" followed by "omg I could do this, that and that" followed by dumping that hand-me-down garbage hardware you were using for something new and shiny specifically for the server.

My unRAID journey was this exactly. I now have a 12 hot/swap bay rack mounted case, with a Ryzan 9 multi core, ECC ram, but it started out with my 'old' PC with a few old/small HDDs

3x Intel NUC 6th gen i5 (2 cores) 32gb RAM. Proxmox cluster with ceph.

I just ignored the limitation and tried with a single sodim of 32gb once (out of a laptop) and it worked fine, but just backed to 2x16gb dimms since the limit was still 2core of CPU. Lol.

Running that cluster 7 or so years now since I bought them new.

I suggest only running off shit tier since three nodes gives redundancy and enough performance. I've run entire proof of concepts for clients off them. Dual domain controllers and FC Rd gateway broker session hosts fxlogic etc. Back when Ms only just bought that tech. Meanwhile my home "ARR" just plugs on in docker containers. Even my opnsense router is virtual running on them. Just get a proper managed switch and take in the internet onto a vlan into the guest vm on a separate virtual NIC.

Point is, it's still capable today.

How is ceph working out for you btw? I'm looking into distributed storage solutions rn. My usecase is to have a single unified filesystem/index, but to store the contents of the files on different machines, possibly with redundancy. In particular, I want to be able to upload some files to the cluster and be able to see them (the directory structure and filenames) even when the underlying machine storing their content goes offline. Is that a valid usecase for ceph?

I'm far from an expert sorry, but my experience is so far so good (literally wizard configured in proxmox set and forget) even during a single disk lost. Performance for vm disks was great.

I can't see why regular file would be any different.

I have 3 disks, one on each host, with ceph handling 2 copies (tolerant to 1 disk loss) distributed across them. That's practically what I think you're after.

I'm not sure about seeing the file system while all the hosts are all offline, but if you've got any one system with a valid copy online you should be able to see. I do. But my emphasis is generally get the host back online.

I'm not 100% sure what you're trying to do but a mix of ceph as storage remote plus something like syncthing on a endpoint to send stuff to it might work? Syncthing might just work without ceph.

I also run zfs on an 8 disk nas that's my primary storage with shares for my docker to send stuff, and media server to get it off. That's just truenas scale. That way it handles data similarly. Zfs is also very good, but until scale came out, it wasn't really possible to have the "add a compute node to expand your storage pool" which is how I want my vm hosts. Zfs scale looks way harder than ceph.

Not sure if any of that is helpful for your case but I recommend trying something if you've got spare hardware, and see how it goes on dummy data, then blow it away try something else. See how it acts when you take a machine offline. When you know what you want, do a final blow away and implement it with the way you learned to do it best.

I'm self-hosting in a 500GB HDD, 2 cores AMD A6, 8GB RAM thinkcentre (access for LAN only) that I got very cheap.

It could be better, I'm going to buy a new computer for personal use and I'm the only one in my family who uses the hosted services, so upgrades will come later 😴

I've got a i3-10100, 16gb ram, and an unused gtx 960. It's terrible but its amazing at the same time. I built it as a gaming pc then quit gaming.

10th gen is hardly "shit hardware".

That's a pretty solid machine

Odd, I have a Celeron J3455 which according to Intel only supports 8GB, yet I run it with 16 GB

Same here in a Synology DS918+. It seems like the official Intel support numbers can be a bit pessimistic (maybe the higher density sticks/chips just didn't exist back when the chip was certified?)

I faced that only with different editions of Windows limiting it by itself.

The oldest hardware I'm still using is an Intel Core i5-6500 with 48GB of RAM running our Palworld server. I have an upgrade in the pipeline to help with the lag, because the CPU is constantly stressed, but it still will run game servers.