Hello there Selfhosted community!

This is an announcement of the completion of a project I've been working on. A Script for installing Ubuntu 24.04 on a ZFS RAID 10. Now, I'd like to describe why I choose to develop this and how I'd like for other people to have access to it as well. Let us start with the hardware.

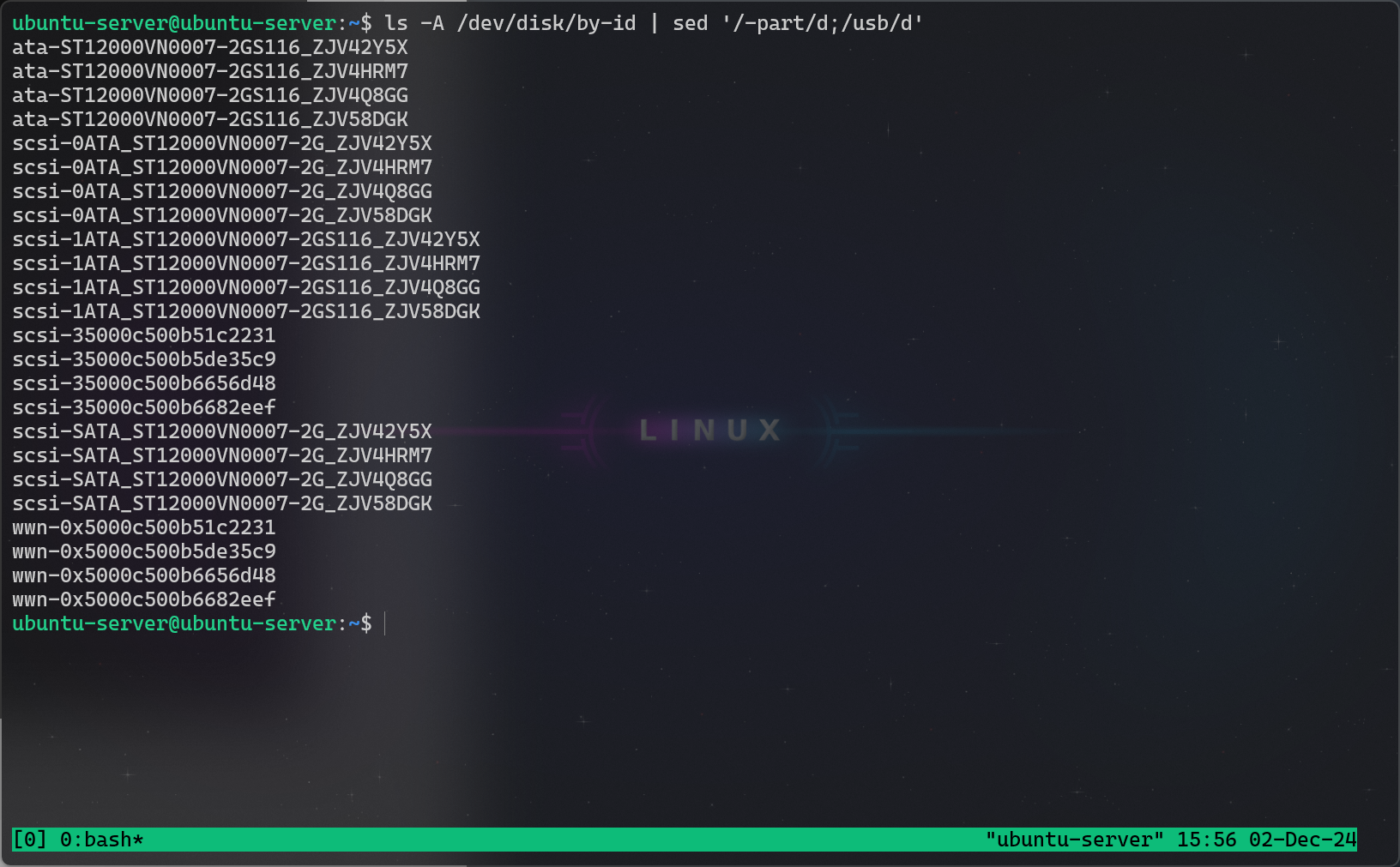

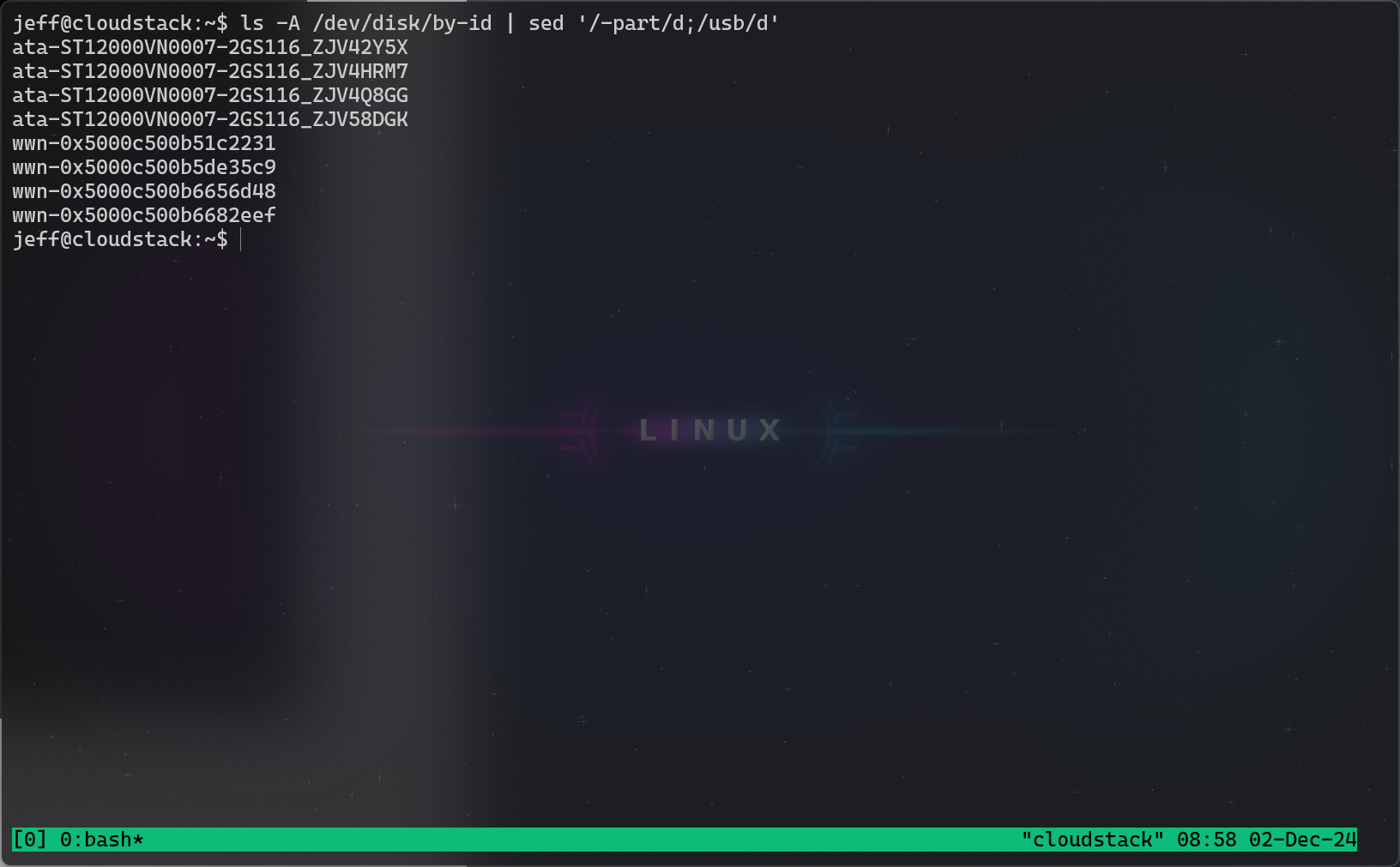

Now, I am using an old host. My host in particular was originally a BCDR device that was based on a ZFS raidz implementation. Since it was designed for ZFS, it doesn't even have a RAID card, it only has an HBA anyways. So for redundancy, ZFS is a good way to go. Now, even though this was a backup appliance, it did not have root on ZFS. Instead, it had a separate harddrive for the operating system and three individual disks for the zpool. This was not my goal.

So I did a little research and testing. I looked at two particular guides (Debian/Ubuntu). Now, I performed those steps a dozens of times because I kept messing up the little things. And to eliminate the human error(that's me) I decided to just go ahead and script the whole thing.

The Github Repository I linked contains all the code needed to setup a generic ubuntu-server host using a ZFS RAID 10.

Instructions for starting the script are easy. Boot up a live cd(https://ubuntu.com/download/server). Hit CTRL+ALT+F2 to go into the shell. Run the following command:

bash <(wget -qO- https://raw.githubusercontent.com/Reddimes/ubuntu-zfsraid10/refs/heads/main/tools/install.sh)

This command does clone the repository, changes directory into it, and runs the entrypoint(sudo ./init.sh). Hopefully, this should be easy to customize to meet your needs.

More Engineering details are on the Github.