Setting up some proper infrastructure to move the perchance sets onto Huggingface with text_encodings.

This post is a developer diary , kind of. Its gonna be a bit haphazard, but that's the way things are before I get the huggingface gradio module up and running.

The NND method is described here , in this paper which presents various ways to improve CLIP Interrogators: https://arxiv.org/pdf/2303.03032

Easier to just use the notebook then follow this gibberish. We pre-encode a bunch of prompt items , then select the most similiar one using dot product. Thats the TLDR.

I'll try to showcase it at some point. But really , I'm mostly building this tool because it is very convenient for myself + a fun challenge to use CLIP.

It's more complicated than the regular CLIP interrogator , but we get a whole bunch of items to select from , and can select exactly "how similiar" we want it to be to the target image/text encoding.

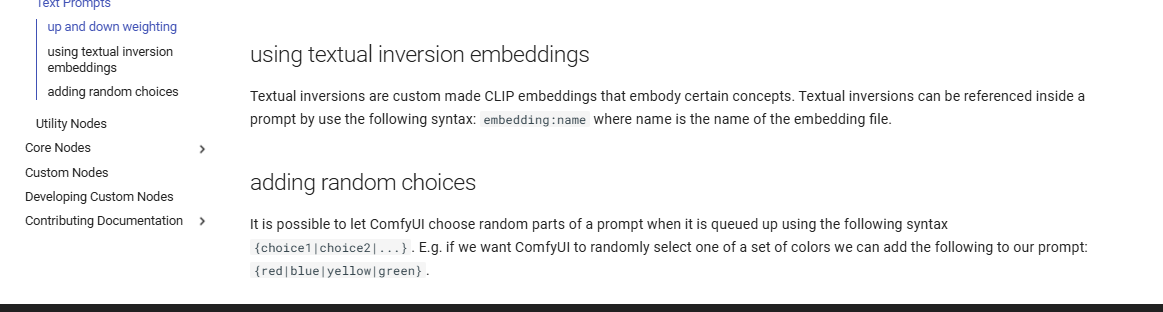

The {itemA|itemB|itemC} format is used as this will select an item at random when used on the perchance text-to-image servers: https://perchance.org/fusion-ai-image-generator

It is also a build-in random selection feature on ComfyUI , coincidentally :

Source : https://blenderneko.github.io/ComfyUI-docs/Interface/Textprompts/#up-and-down-weighting

Links/Resources posted here might be useful to someone in the meantime.

You can find tons of strange modules on the Huggingface page : https://huggingface.co/spaces

For now you will have to make do with the NND CLIP Interrogator notebook : https://huggingface.co/codeShare/JupyterNotebooks/blob/main/sd_token_similarity_calculator.ipynb

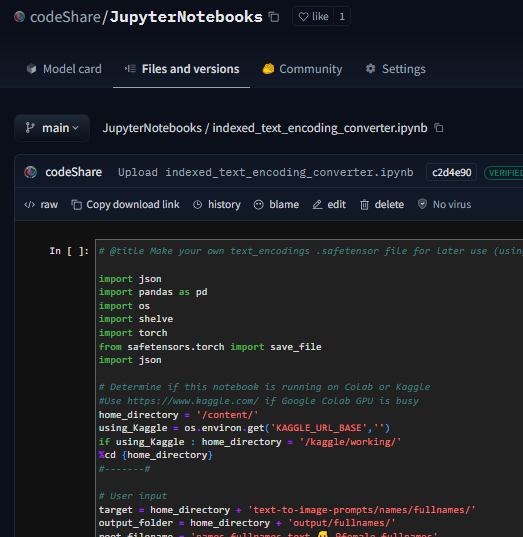

text_encoding_converter (also in the NND notebook) : https://huggingface.co/codeShare/JupyterNotebooks/blob/main/indexed_text_encoding_converter.ipynb

I'm using this to batch process JSON files into json + text_encoding paired files. Really useful (for me at least) when building the interrogator. Runs on the either Colab GPU or on Kaggle for added speed: https://www.kaggle.com/

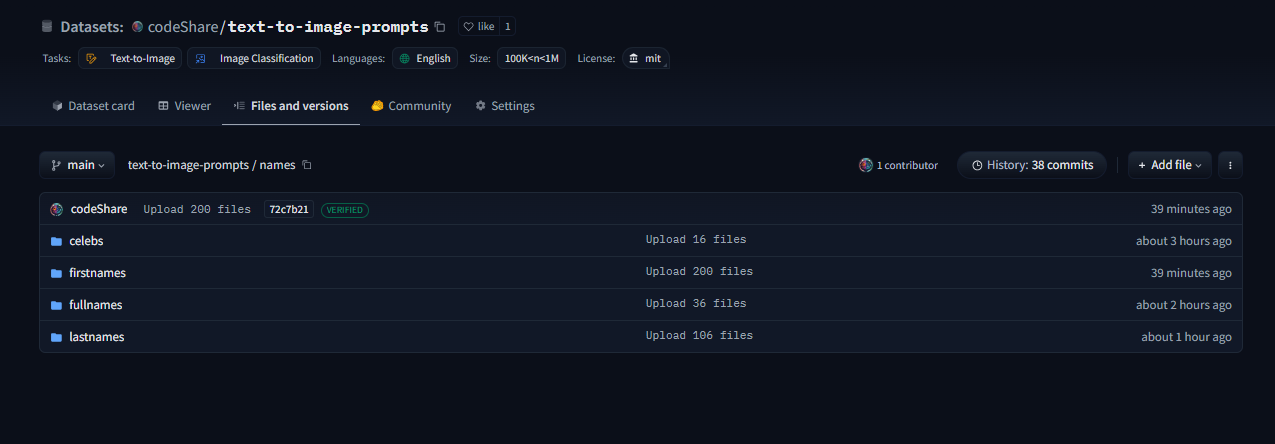

Here is the dataset folder https://huggingface.co/datasets/codeShare/text-to-image-prompts:

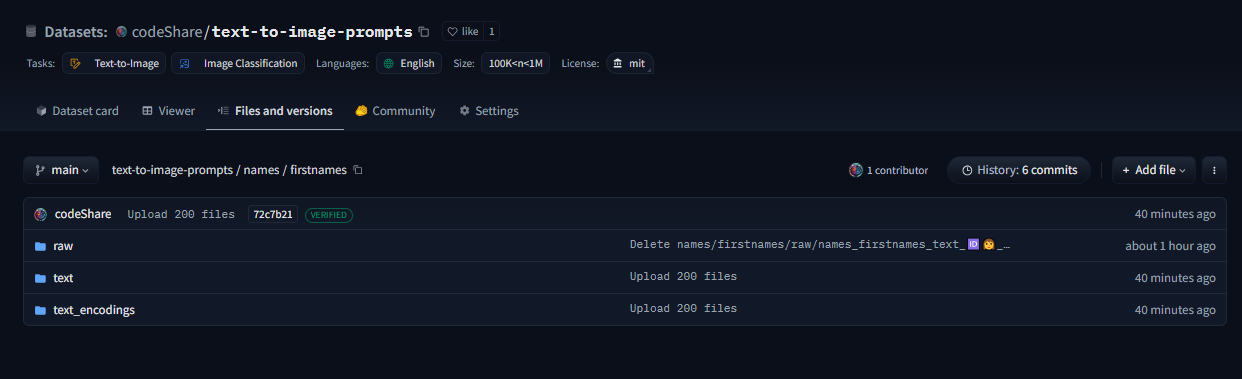

Inside these folders you can see the auto-generated safetensor + json pairings in the "text" and "text_encodings" folders.

The JSON file(s) of prompt items from which these were processed are in the "raw" folder.

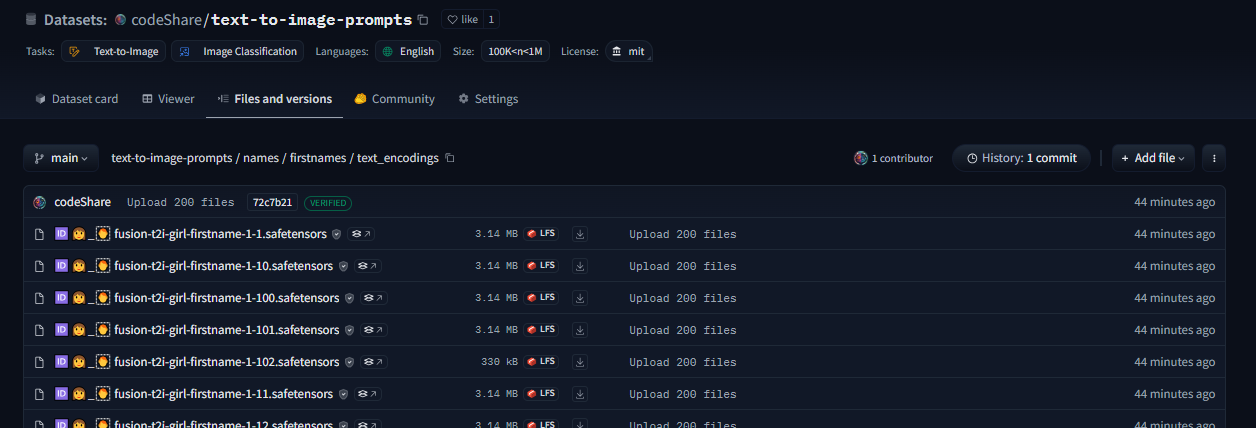

The text_encodings are stored as safetensors. These all represent 100K female first names , with 1K items in each file.

By splitting the files this way , it uses way less RAM / VRAM as lists of 1K can be processed one at a time.

//-----//

Had some issues earlier with IDs not matching their embeddings but that should be resolved with this new established method I'm using. The hardest part is always getting the infrastructure in place.

I can process roughly 50K text encodings in about the time it takes to write this post (currently processing a set of 100K female firstnames into text encodings for the NND CLIP interrogator. )

EDIT : Here is the output uploaded https://huggingface.co/datasets/codeShare/text-to-image-prompts/tree/main/names/firstnames

I've updated the notebook to include a similarity search for ~100K female firstnames , 100K lastnames and a randomized 36K mix of female firstnames + lastnames

Sources for firstnames : https://huggingface.co/datasets/jbrazzy/baby_names

List of most popular names given to people in the US by year

Sources for lastnames : https://github.com/Debdut/names.io

An international list of all firstnames + lastnames in existance, pretty much . Kinda borked as it is biased towards non-western names. Haven't been able to filter this by nationality unfortunately.

//------//

Its a JSON + safetensor pairing with 1K items in each. Inside the JSON is the name of the .safetensor files which it corresponds to. This system is super quick :)!

I plan on running a list of celebrities against the randomized list for firstnames + lastnames in order to create a list of fake "celebrities" that only exist in Stable Diffusion latent space.

An "ethical" celebrity list, if you can call it that which have similiar text-encodings to real people but are not actually real names.

I have plans on making the NND image interrogator a public resource on Huggingface later down the line, using these sets. Will likely use the repo for perchance imports as well: https://huggingface.co/datasets/codeShare/text-to-image-prompts