If you blindly follow whatever it tells you, you deserve whatever happens to you and your computer.

Arch Linux

The beloved lightweight distro

Totally agree. If you learn by nuking your system, you aren’t going to forget that lesson very easily. Fortunately though, there are also nicer ways to learn.

Filed under: "LLMs are designed to make convincing sentences. Language models should not be used as knowledge models."

I wish I got a dollar every time someone shared their surprise of what a LLM said that was factually Incorrect. I wouldn't need to work a day.

People expect a language model to be really good at other things besides language.

If you’re writing an email where you need to express a particular thought or a feeling, ask some LLM what would be a good way to say it. Even though the suggestions are pretty useful, they may still require some editing.

This use case and asking for information are completely different things. It can stylize some input perfectly fine. It just can't be a source of accurate information.It is trained to generate text that sounds plausible.

There are already ways to get around that, even though they aren't perfect. You can give the source of truth and ask it to answer using only information found in there. Even then, you should check. the accuracy of its responses.

Oh, yea, it has the habit of pretending to know things. For example i work with a lot of proprietary software with not much public documentation and when asking GPT about it GPT will absolutely pretend to know about it and will give nonsensical advice.

GPT is riding the highest peak of the Dunning-Kruger curve. It has no idea how little it really knows, so it just says whatever comes first. We’re still pretty far from having a AI capable of thinking before speaking.

Sounds like it's already capable of replacing middle managers though ^(/s)

I sure hope so. That would give me more time to get some interesting stuff done while an AI could handle all the boring administrative tasks I can’t seem to avoid.

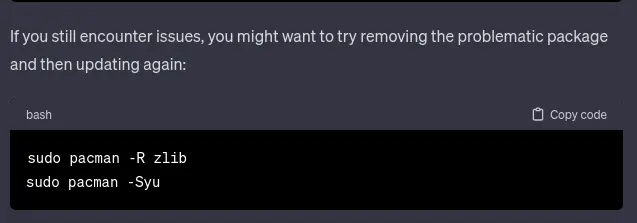

Why do people expect it to give perfect answers all the time? You should always question whatever it gives as an answer. It's not a truth machine, it's an inspiration machine. It can give you some paths to explore that you hadn't considered before. It probably isn't aware that zlib is a dependency for many other things, because that's extremely niche information. So it just gave you generic advice and an example on how to remove a package.

I recently asked ChatGPT "what's a 5 letter word for a purple flower?" It confidently responded "Violet" there's no surprise it gets far more complex questions wrong.

To be fair, the genus that violets belong to is called Viola /j

These models do not see letters but tokens. For the model, violet is probably two symbols viol and et. Apart from learning by heart the number of letters in each token, it is impossible for the model to know the number of letters in a word.

This is also why gpt family sucks at addition their tokenizer has symbols for common numbers like 14. This meant that to do 14 + 1 it could not use the knowledge 4 + 1 was 5 as it could not see the link between the token 4 and the token 14. The Llama tokenizer fixes this, and is thus much better at basic algebra even with much smaller models.

I have had some luck asking it follow-up questions to explain what each line does. LLMs are decent at that and might even discover bugs.

You could also copy the conversation and paste it to another instance. It is much easier to critique than to come up with something, and this holds true for AI as well, so the other instance can give feedback like "I would have suggested x" or "be careful with commands like y"

This feels like a lot of hoops to avoid reading a wiki page thoroughly But if you want to use gpt this may work

I’ve also tried that, but with mixed results. Generally speaking, GPT is too proud to admit its mistakes. Occasionally I’ve managed successfully point out a mistake, but usually it just thinks I’m trying to gaslight it.

Asking follow up questions works really well as long as you avoid turning it into a debate. When I notice that GPT is contradicting itself, I just keep that information to myself and make a mental note about not trusting it. Trying to argue with someone like GPT is usually just an exercise in futility.

When you have some background knowledge in the topic you’re discussing, you can usually tell when GPT is going totally off the rails. However, you can’t dive into every topic out there, so using GPT as a shortcut is very tempting. That’s when you end up playing with fire, because you can’t really tell if GPT is pulling random nonsense out of its ass or if what it’s saying is actually based on something real.

AI are always giving stupid information.

Shit be so dumb that I have to trick it into acknowledging that yes, removing x package or writing x C code = computer explode.

It’s even funnier when you start arguing with it about things like this. Some times GPT just refuses to acknowledge its mistakes and sticks to its guns even harder.