Linux

Welcome to c/linux!

Welcome to our thriving Linux community! Whether you're a seasoned Linux enthusiast or just starting your journey, we're excited to have you here. Explore, learn, and collaborate with like-minded individuals who share a passion for open-source software and the endless possibilities it offers. Together, let's dive into the world of Linux and embrace the power of freedom, customization, and innovation. Enjoy your stay and feel free to join the vibrant discussions that await you!

Rules:

-

Stay on topic: Posts and discussions should be related to Linux, open source software, and related technologies.

-

Be respectful: Treat fellow community members with respect and courtesy.

-

Quality over quantity: Share informative and thought-provoking content.

-

No spam or self-promotion: Avoid excessive self-promotion or spamming.

-

No NSFW adult content

-

Follow general lemmy guidelines.

view the rest of the comments

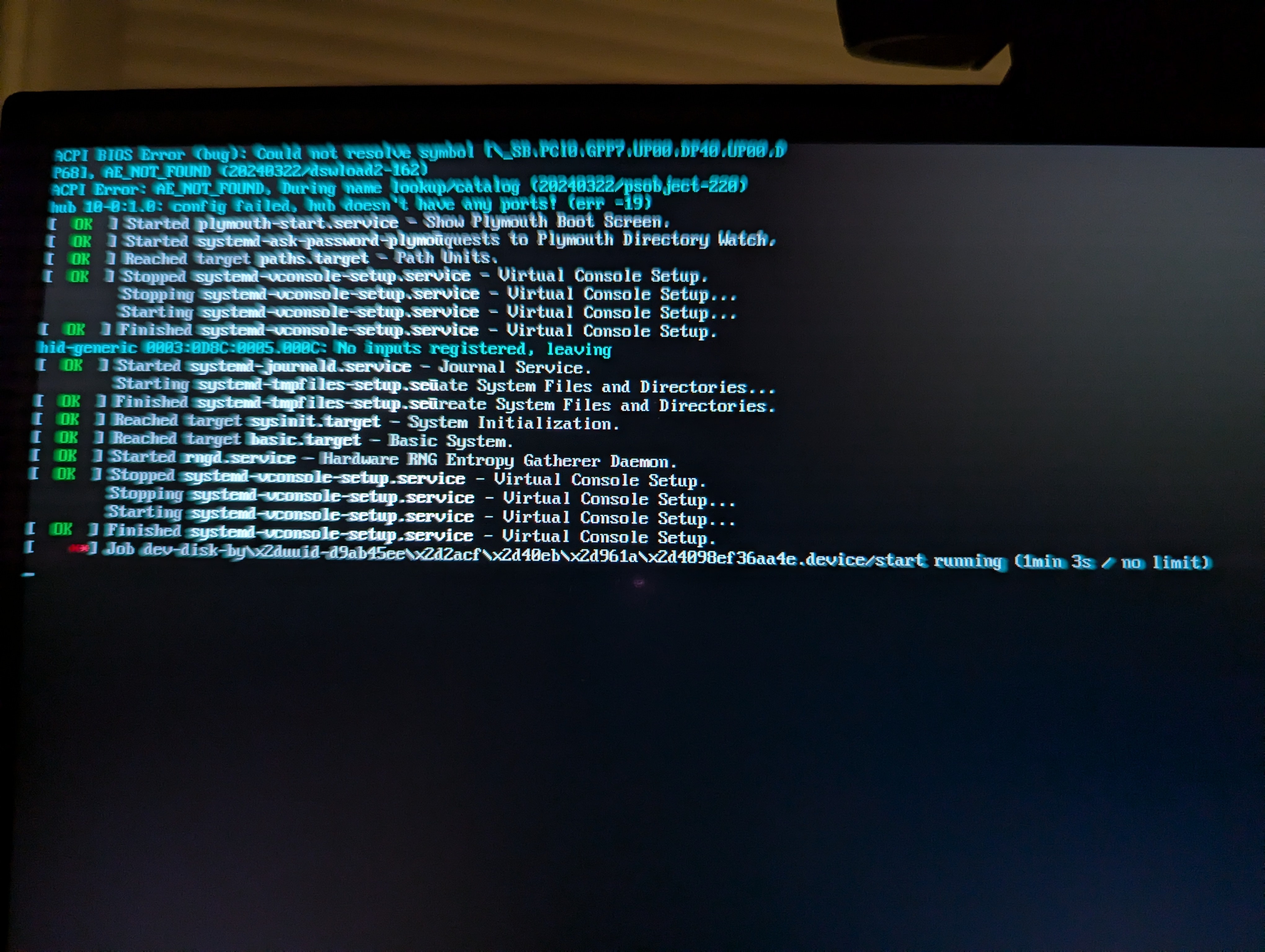

probably the disk UUID has changed because of the path to the NVMe vs SSD. If you use partition UUID, they will be exactly the same, but the UUID of the physical disk is not cloned, as it is a identifier of the physical device and not it's content.

change it to partition UUID and it will boot.

Definitely second this. If you're using LVM, it uses the physical UUID for the pv. You have to update that on the new drive so it knows where the vg and lvs are being mounted to.

There wasn't any LVM involved, it's AFAIK pretty rare outside of MBR installs (as GPT typically lets you have more than enough partitions).

LVM is actually super common. Most Linux distros default to LVM unless you do custom partitioning. It's not just about the max number of partitions supported by the table. LVM provides a TON more flexibility and ease of management of partitions.

I haven't seen LVM in any recent Fedora (very high confidence), Debian (high confidence), or OpenSUSE (fairly confident) installations (just using the default options) on any system that's using GPT partition tables.

For RAID, I've only ever seen mdadm or ZFS (though I see LVM is an option for doing this as well per the arch wiki). Snapshotting I normally see done either at the file system level with something like rsnapshot, kopia, restic, etc or using a file system that supports snapshots like btrfs or ZFS.

If you're still using MBR and/or completely disabling EFI via the "legacy boot loader" option or similar, then yeah they will use LVM ... but I wouldn't say that's the norm.

That's fair, I should have clarified that on most Enterprise Linux distros LVM is definitely the norm. I know Fedora switched to btrfs a few releases back and you may be right about Suse Tumbleweed but pretty sure Suse Leap uses LVM. CentOS, RHEL, Alma, etc. all still default to LVM, as the idea of keeping everything on a single partition is a bad idea and managing multiple partitions is significantly easier with LVM. More than likely that'll change when btrfs has a little more mileage on it and is trusted as "enterprise ready" but for now LVM is the way they go. MBR vs GPT and EFI vs non-EFI don't have a lot to do with it though, it's more about the ease of managing multiple partitions (or subvolumes if you're used to btrfs), as having a single partition for root, var, and home is bad idea jeans.

That's fair, I did just check my Rocky Linux install and it does indeed use LVM.

So much stuff in this space has moved to hosted/cloud I didn't think about that.

So I fixed this by using clonezilla (which seemed to fix things up automatically), but for my edification, how do you get the UUID of the device itself? The only UUIDs I was seeing seemingly were the partition UUIDs.

sorry for the late reply, the command 'lsblk' can output it:

"sudo lsblk -o +uuid,name"

check "man lsblk" to see all possible combinations if needed.

there is also 'blkid' but I'm unsure whether that package is installed by default on all Linux releases, so that's why I chose 'lsblk'

if 'blkid' is installed, the syntax would be:

"sudo blkid /dev/sda1 -s UUID -o value"

glad you got it fixe, and hope this answers your question

(edit pga big thumbs and autocorrect... )

also, remember that the old drive now share the UUID with the NVMe drive (which is why I recommended using partition UUID and not disk UUID), so you will have to create a new GPT signature on the old drive to avoid boot issues if both drives are connected at the same time during boot, otherwise you might run into boot issues or booting from the wrong drive.