Start by reading these two articles:

Ok, now that you've done that (hopefully in the order I posted them), I can begin.

I have always been a strong supporter of Open Source Software (OSS), so much so that all of my projects (yes all) are OSS and fully open for anyone to use. And with that, I knew that things could be used for good... and bad. I took that risk. But I also made sure to build stuff that wasn't, in itself, inherently bad. I didn't build anything unethical to my eyes (I understand the nuance here).

But I've seen what unethical devs can do.

Just take a look at those implementing the ModFascismBot for Reddit (that's not its name, but that's what it is). That is an incredibly unethical thing to build. Not because it's a private company controlling what they want their site to do, no, that's fine by me. Reddit can do whatever they want. But because it's an attempt to lie about reality, to force users to do something through manipulation not through honesty. Even subreddits that voted overwhelmingly to shut down still got messaged by the bot telling them that the users (that voted for it) didn't want it and they had to open back up or they would be removed from mod position. This is not ethical. This is not right. This is not what the internet is about.

Or the unethical devs at Twitter, who:

It's one thing for an organization to have political lean...that is just a part of life, and that will never end. It's another to actually sow disinformation in order to accomplish nefarious things to further your profits. It is what has caused massive addiction to tobacco, the continuation of climate change, death and disfiguration from forever chemicals, ovarian cancer and mesothelioma from undisclosed exposure to asbestos, or selling 'health products' that claim to cure everything under the sun, but can "interfere with clinical lab tests, such as those used to diagnose heart attacks".

Please do not confuse this for saying that companies shouldn't be able to sell things and make a profit. If you want to sell someone something that kills them if they misuse it and you market it as such, you go for it. That's literally how every product in the cleaning aisle of your grocery store works. That's how guns work, that's how fertilizers work, that's why we have labels. But manipulation for profit is unethical, and that's why companies hide it. It hurts their bottom line. They know that their products will not be used if they reveal the truth. Instead of doing something good for humanity, they choose the subterfuge. Profits over people. Profits over Earth honestly. Profits over continuing the human race. Absolutely nothing matters to companies like this. And unethical developers enable this.

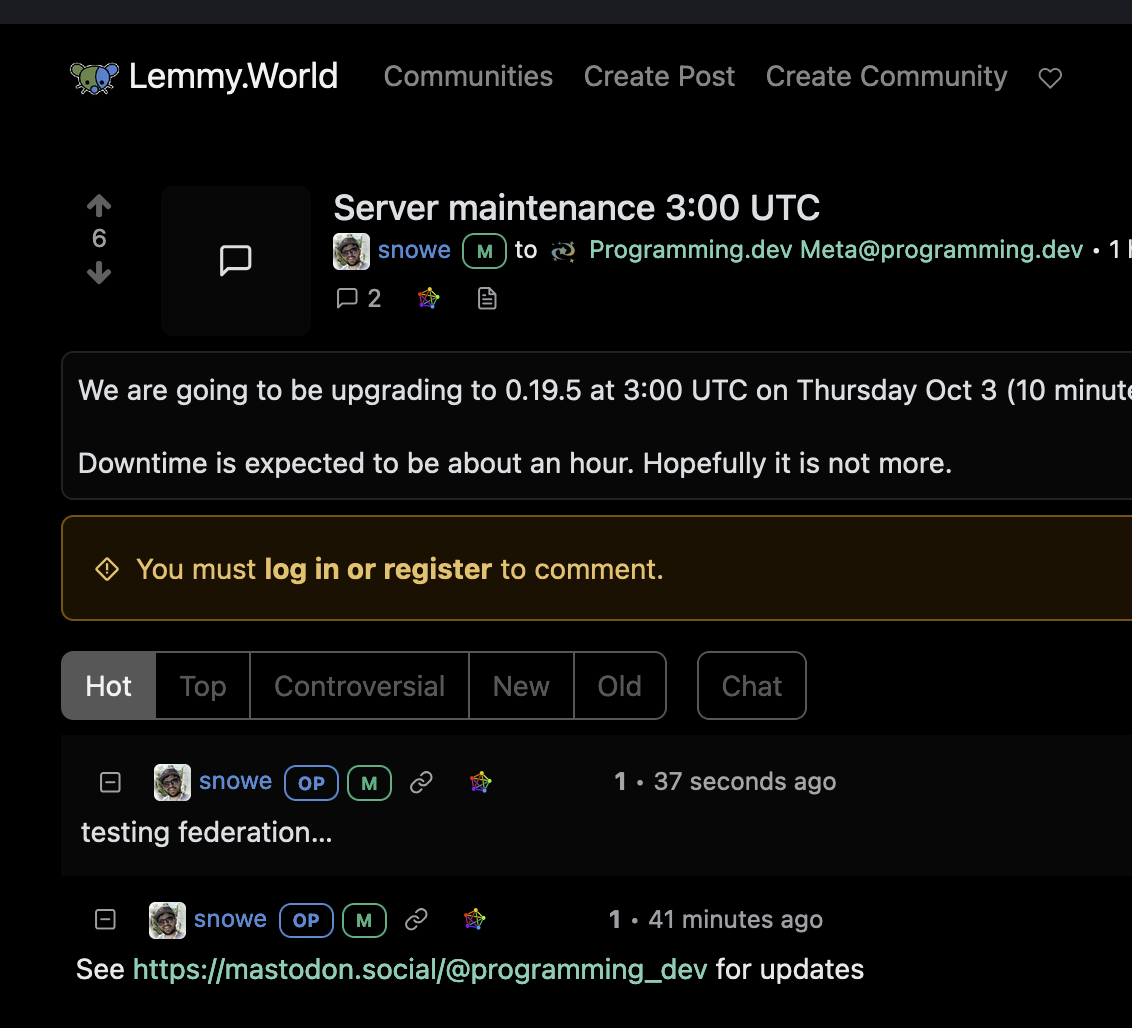

Facebook (ok, fine, Meta, still going to refer to them as FB though) is trying to join the Fediverse. We as a community, but honestly each of you as individuals, have a decision to make. Do they stay or do they go? Let's put some information on the table.

Facebook...

- lies about the amount of misinformation it removes ^1

- increased censorship of 'anti-state' posts ^1 ^2 ^3

- lied to Congress about social networks polarizing people, while FB's own researchers found that they do ^2

- attempted to attract preteens to the platform (huh, wonder where all that "you must be 13" stuff went) ^4

- rewards outrage and discord ^3

Facebook also...

- Allows for checking on friends and family in disasters ^6

- Created and maintained some of the most popular open source software on the planet (including the software that runs the interface you're looking at right now) [^7][^8]

From my perspective... There's not much good about FB. It has single handedly caused the deaths of tens of thousands of people across the planet, if not hundreds of thousands. It continually makes people angrier and angrier. It's a launching pad for scammers, thieves, malevolent malefactors, manipulators, dictators, to push their conquests onto the world through manipulation, lies, tricks, and deceit. Its algorithms foster an echo chamber effect, exacerbating division and animosity, making civil discourse and mutual understanding all but impossible. Instead of being a platform for connection, it often serves as a catalyst for discord and misinformation. FB's propensity for prioritizing user engagement over factual accuracy has resulted in a global maelstrom of confusion and mistrust. Innocent minds are drawn into this vortex, manipulated by fear and falsehoods, consequently promoting harmful actions and beliefs. Despite its potential to be a tool for good, it is more frequently wielded as a weapon, sharpened by unscrupulous entities exploiting its vast reach and influence. The promise of a globally connected community seems to be overshadowed by its darker realities.

As a person, I believe that we need to choose things as a community. I do not believe in the 'BDFL'...the Benevolent Dictator For Life. Graydon Hoare, creator of Rust, wrote an article just recently about how things would have been different if they had stayed BDFL of Rust. From my position the BDFLs we currently have on this planet really suck. Not just politically, but even in tech. I don't think that path is good for society. It might work in specific circumstances, but it usually fails, and when it does, people get hurt. Badly.

So, with that in mind, I've been working on a polling feature for Lemmy. I seriously doubt I'll be done with it soon, but hopefully FB takes a while longer to implement federation. I understand there's a desire for me, or the other admins to just make a decision, but I really don't like doing that. If it comes down to it, I will implement defederation to start with, but I will still be holding a vote as soon as I can get this damn feature done.

[^8]: the website actually uses Inferno, but from what I can tell it was forked directly from React, judging from the actually documentation and references in the repo.

when the official docs are telling you to use it, then it's used. You can have no expectation of people to think the tooling isn't shit when it's literally the official recommendation.