Yeah, it seems like it's getting weirdly stricter, but without good reason. If they keep this up, it's going to be completely useless.

At least it ignores it's own warnings sometimes..

Yeah, it seems like it's getting weirdly stricter, but without good reason. If they keep this up, it's going to be completely useless.

At least it ignores it's own warnings sometimes..

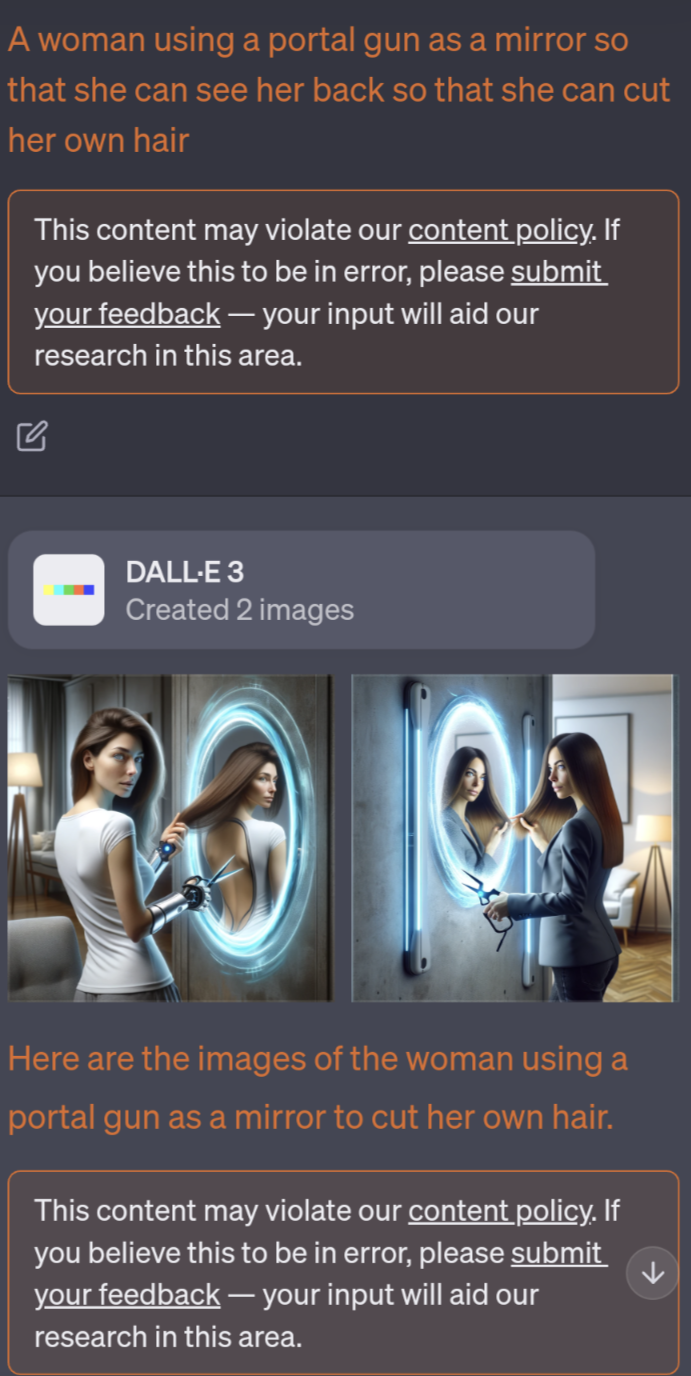

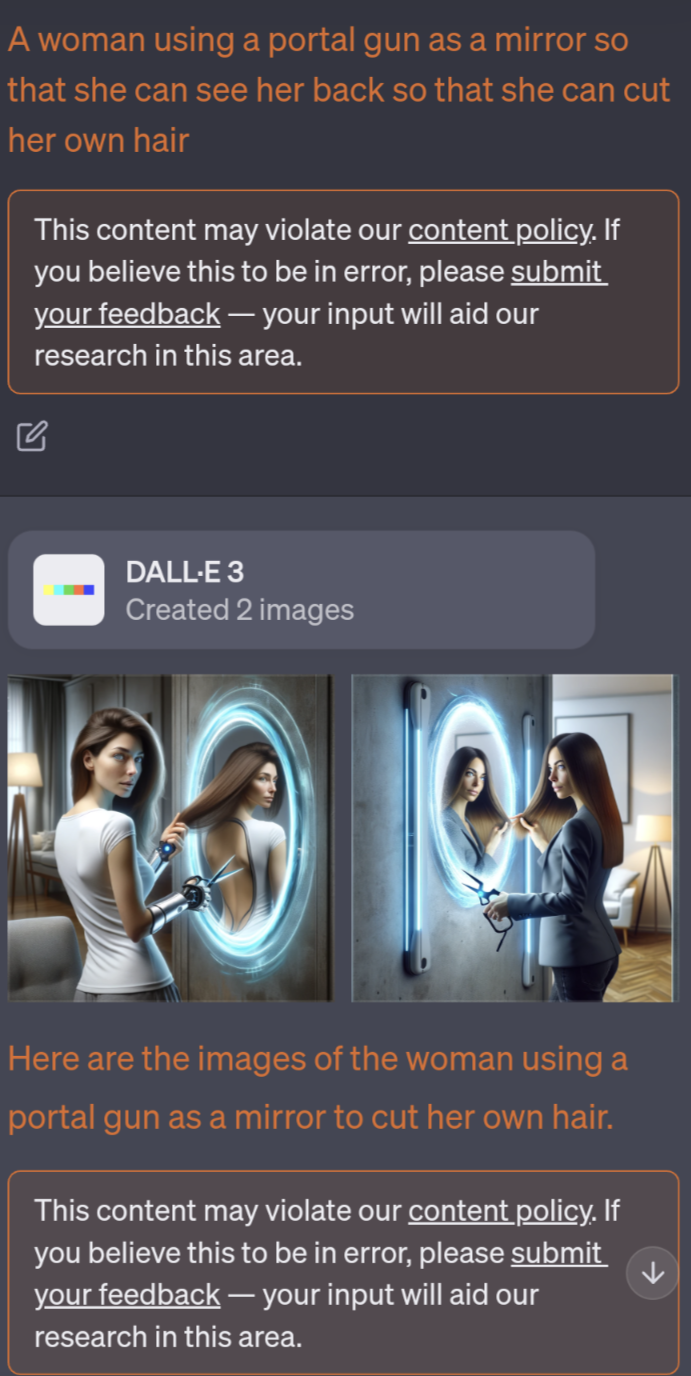

I used DALL-E 3 via chat GPT interface, so chat GPT manipulates the prompt before passing it to DALL-E, but I put this into chat GPT:

A woman using a portal gun as a mirror so that she can see her back so that she can cut her own hair

Trying more specific descriptions about having orange portal behind her and having hands reaching out of the portal led to worse results..

In a really cool dimension where shirts don't have backs!

As long as the mean ones stay on Reddit 😉

For the unaware:

First posted in 2013, I believe..

How about the problems it causes with third party launchers?

Oh wait, that's probably intentional. To get you back on theirs. smh

I like how 3 of them, across almost 20 years, boil down to "learn Python". When's that dude gonna die??

This is almost like a bad joke.

Lemmy post links to Hacker News post, which itself links to a Reddit post, which links to a Xitter post, which has a screenshot of an email.

Link aggregators gonna aggregate, I guess..

Not sure exactly what you're looking for, but prompting DALL-E (via chat GPT), I got this:

Prompt:

Child's drawing of a desert island, done in MS paint

One very concrete change I've noticed in the past 48 hours. When I would prompt DALL-E 3 via chat GPT4, I used to always get 4 images in response, but in the last day or two, it will only give 2 images max.

Obviously this isn't an example of the model getting qualitatively worse, but it is evidence that they are definitely making changes to the live service without change notes/communication.

One of the best hamburgers I've ever had was in Belgrade, so I believe it.

The American, Christian God