cross-posted from: https://lemmy.world/post/6399678

🤖 Happy FOSAI Friday! 🚀

Friday, October 6, 2023

HyperTech News Report #0003

Hello Everyone!

This week highlights a wave of new papers and frameworks that expand upon LLM functionalities. With a tsunami of applications on the horizon I foresee a bedrock of tools to preceed. I'm not sure what kits and processes will end up part of this bedrock, but I hope some of these methods end up interesting or helpful to your workflow!

Table of Contents

Community Changelog

Image of the Week

This image of the week comes from one of my own projects! I hope you don't mind me sharing.. I was really happy with this result. This was generated from an SDXL model I trained and host on Replicate. I use an mock ensemble approach to generate various game assets for an experimental roguelike I'm making with a colleague.

My current method is not at all efficient, but I have fun. Right now, I have three SDXL models I interact with, each generating art I can use for my project. Andraxus takes care of wallpapers and in-game levels (this image you're seeing here), his in-game companion Biazera imagines characters and entities of this world, while Cerephelo tinkers and toils over the machinations within - crafting items, loot, powerups, etc.

I've been hesitant self-promoting here. But if there's genuine interest in this project I would be more than happy sharing more details. It's still in pre-alpha development, but there were plans releasing all of the models we use as open-source (obviously). We're still working on the engine though. Let me know if you want to see more on this project.

News

Arxiv Publications Workflow: A new workflow has been introduced that allows users to scrape search topics from Arxiv, converting the results into markdown (MD) format. This makes it easier to digest and understand topics from Arxiv published content. The tool, available on GitHub, is particularly useful for those who wish to delve deeper into research papers and run their own research processes.

Texting LLMs from Your Phone: A guide has been shared that enables users to communicate with their personal assistants via simple text messages. The process involves setting up a Twilio account, purchasing and registering a phone number, and then integrating it with the Replicate platform. The code, available on GitHub, makes it possible to send and receive messages from LLMs directly on one's phone.

Microsoft's AutoGen: Microsoft has released AutoGen, a tool designed to aid in the creation of autonomous LLM agents. Compatible with ChatGPT models, AutoGen facilitates the development of LLM applications using multiple agents that can converse with each other to solve tasks. The framework is customizable and allows for seamless human participation. More details can be found on GitHub.

Promptbench and ACE Framework:

Promptbenchis a new project focused on the evaluation and benchmarking of models. Stemming from the DyVal paper, it aims to provide reliable insights into model performance. On the other hand, the ACE Framework, designed forautonomous cognitive entities, offers a unique approach to agent tooling. While still in its early stages, it promises to bring about innovative implementations in the realms of personal assistants, game world NPCs, autonomous employees, and embodied robots.Research Highlights: Several papers have been published that delve into the intricacies of LLMs. One paper introduces a method to enhance the zero-shot reasoning abilities of LLMs, while another, titled DyVal, proposes a dynamic evaluation protocol for LLMs. Additionally, the concept of Low-Rank Adapters (LoRA) ensembles for LLM fine-tuning has been explored, emphasizing the potential of using one model and dynamically swapping the fine-tuned QLoRA adapters.

Tools & Frameworks

Keep Up w/ Arxiv Publications

Due to a drastic change in personal and work schedules, I've had to shift how I research and develop posts and projects for you guys. That being said, I found this workflow from the same author of the ACE Framework particularly helpful. It scrapes a search topic from Arxiv and returns a massive XML that is converted to markdown (MD) to then be used as an injectable context report for a LLM of your choosing (to further break down and understand topics) or as a well of information for the classic CTRL + F search. But at this point, info is aggregated (and human readable) from Arxiv published content.

After reading abstractions you can further drill into each paper and dissect / run your own research processes as you see fit. There is definitely more room for automation and organization here I'm sure, but this has been a big resource for me lately so I wanted to proliferate it for others who might find it helpful too.

Text LLMs from Your Phone

I had an itch to make my personal assistants more accessible - so I started investigating ways I could simply text them from my iPhone (via simple sms). There are many other ways I could've done this, but texting has been something I always like to default to in communications. So, I found this cool guide that uses infra I already prefer (Replicate) and has a bonus LangChain integration - which opens up the door to a ton of other opportunities down the line.

This tutorial was pretty straightforward - but to be honest, making the Twilio account, buying a phone number (then registering it) took the longest. The code itself takes less than 10 minutes to get up and running with ngrok. Super simple and straightforward there. The Twilio process? Not so much.. but it was worth the pain!

I am still waiting on my phone number to be verified (so that the Replicate inference endpoint can actually send SMS back to me) but I ended the night successfully texting the server on my local PC. It was wild texting the Ahsoka example from my phone and seeing the POST response return (even though it didn't go through SMS I could still see the server successfully receive my incoming message/prompt). I think there's a lot of fun to be had giving casual phone numbers and personalities to assistants like this. Especially if you want to LangChain some functions beyond just the conversation. If there's more interest on this topic, I can share how my assistant evolves once it gets full access to return SMS. I am designing this to streamline my personal life, and if it proves to be useful I will absolutely release the project as open-source.

AutoGen

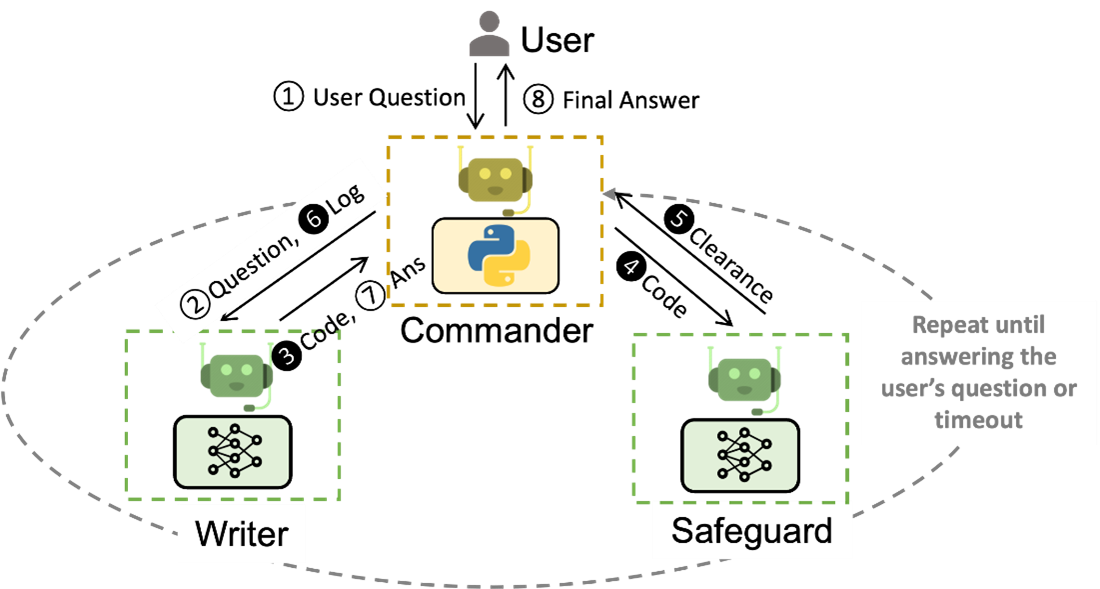

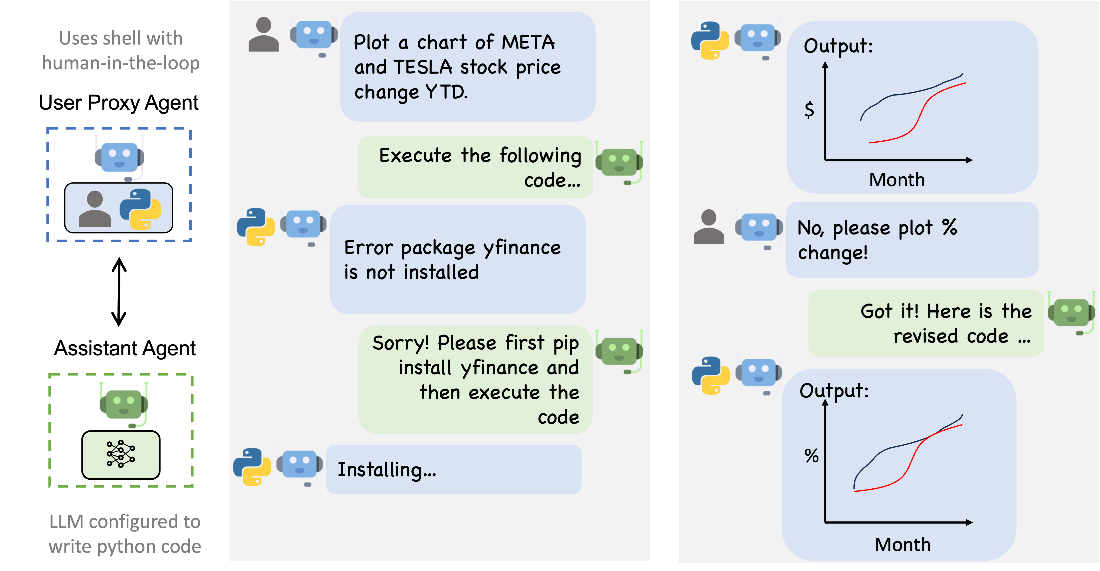

With Agents on the rise, tools and automation pipelines to build them have become increasingly more important to consider. It seems like Microsoft is well aware of this, and thus released AutoGen, a tool to help enable this automation tooling and creation of autonomous LLM agents. AutoGen is compatible with ChatGPT models and is being kitted for local LLMs as we speak.

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

Promptbench

I recently found

promptbench- a project that seems to have stemmed from the DyVal paper (shared below). I for one appreciate some of the new tools that are releasing focused around the evaluation and benchmarking of models. I hope we continue to see more evals, benchmarks, and projects that return us insights we can rely upon.ACE Framework

A new framework has been proposed and designed for

autonomous cognitive entities. This appears similar to agents and their style of tooling, but with a different architecture approach? I don't believe implementation of this is ready, but it may be soon and something to keep an eye on.There are many possible implementations of the ACE Framework. Rather than detail every possible permutation, here is a list of categories that we perceive as likely and viable.

Personal Assistant and/or Companion

- This is a self-contained version of ACE that is intended to interact with one user.

- Think of Cortana from HALO, Samantha from HER, or Joi from Blade Runner 2049. (yes, we recognize these are all sexualized female avatars)

- The idea would be to create something that is effectively a personal Executive Assistant that is able to coordinate, plan, research, and solve problems for you. This could be deployed on mobile, smart home devices, laptops, or web sites.

Game World NPC's

- This is a kind of game character that has their own personality, motivations, agenda, and objectives. Furthermore, they would have their own unique memories.

- This can give NPCs a much more realistic ability to pursue their own objectives, which should make game experiences much more dynamic and unpredictable, thus raising novelty. These can be adapted to 2D or 3D game engines such as PyGame, Unity, or Unreal.

Autonomous Employee

- This is a version of the ACE that is meant to carry out meaningful and productive work inside a corporation.

- Whether this is a digital CSR or backoffice worker depends on the deployment.

- It could also be a "digital team member" that primarily interacts via Discord, Slack, or Microsoft Teams.

Embodied Robot

The ACE Framework is ideal to create self-contained, autonomous machines. Whether they are domestic aid robots or something like WALL-E

Papers

Agent Instructs Large Language Models to be General Zero-Shot Reasoners

We introduce a method to improve the zero-shot reasoning abilities of large language models on general language understanding tasks. Specifically, we build an autonomous agent to instruct the reasoning process of large language models. We show this approach further unleashes the zero-shot reasoning abilities of large language models to more tasks. We study the performance of our method on a wide set of datasets spanning generation, classification, and reasoning. We show that our method generalizes to most tasks and obtains state-of-the-art zero-shot performance on 20 of the 29 datasets that we evaluate. For instance, our method boosts the performance of state-of-the-art large language models by a large margin, including Vicuna-13b (13.3%), Llama-2-70b-chat (23.2%), and GPT-3.5 Turbo (17.0%). Compared to zero-shot chain of thought, our improvement in reasoning is striking, with an average increase of 10.5%. With our method, Llama-2-70b-chat outperforms zero-shot GPT-3.5 Turbo by 10.2%.

DyVal: Graph-informed Dynamic Evaluation of Large Language Models

Large language models (LLMs) have achieved remarkable performance in various evaluation benchmarks. However, concerns about their performance are raised on potential data contamination in their considerable volume of training corpus. Moreover, the static nature and fixed complexity of current benchmarks may inadequately gauge the advancing capabilities of LLMs. In this paper, we introduce DyVal, a novel, general, and flexible evaluation protocol for dynamic evaluation of LLMs. Based on our proposed dynamic evaluation framework, we build graph-informed DyVal by leveraging the structural advantage of directed acyclic graphs to dynamically generate evaluation samples with controllable complexities. DyVal generates challenging evaluation sets on reasoning tasks including mathematics, logical reasoning, and algorithm problems. We evaluate various LLMs ranging from Flan-T5-large to ChatGPT and GPT4. Experiments demonstrate that LLMs perform worse in DyVal-generated evaluation samples with different complexities, emphasizing the significance of dynamic evaluation. We also analyze the failure cases and results of different prompting methods. Moreover, DyVal-generated samples are not only evaluation sets, but also helpful data for fine-tuning to improve the performance of LLMs on existing benchmarks. We hope that DyVal can shed light on the future evaluation research of LLMs.

LoRA ensembles for large language model fine-tuning

Finetuned LLMs often exhibit poor uncertainty quantification, manifesting as overconfidence, poor calibration, and unreliable prediction results on test data or out-of-distribution samples. One approach commonly used in vision for alleviating this issue is a deep ensemble, which constructs an ensemble by training the same model multiple times using different random initializations. However, there is a huge challenge to ensembling LLMs: the most effective LLMs are very, very large. Keeping a single LLM in memory is already challenging enough: keeping an ensemble of e.g. 5 LLMs in memory is impossible in many settings. To address these issues, we propose an ensemble approach using Low-Rank Adapters (LoRA), a parameter-efficient fine-tuning technique. Critically, these low-rank adapters represent a very small number of parameters, orders of magnitude less than the underlying pre-trained model. Thus, it is possible to construct large ensembles of LoRA adapters with almost the same computational overhead as using the original model. We find that LoRA ensembles, applied on its own or on top of pre-existing regularization techniques, gives consistent improvements in predictive accuracy and uncertainty quantification.

There is something to be discovered between LoRA, QLoRA, and ensemble/MoE designs. I am digging into this niche because of an interesting bit I heard from sentdex (if you want to skip to the part I'm talking about, go to 13:58). Around 15:00 minute mark he brings up QLoRA adapters (nothing new) but his approach was interesting.

He eventually shares he is working on a QLoRA ensemble approach with skunkworks (presumably Boeing skunkworks). This confirmed my suspicion. Better yet - he shared his thoughts on how all of this could be done. Watch and support his video for more insights, but the idea boils down to using one model and dynamically swapping the fine-tuned QLoRA adapters. I think this is a highly efficient and unapplied approach. Especially in that MoE and ensemble realm of design. If you're reading this and understood anything I said - get to building! This is a seriously interesting idea that could yield positive results. I will share my findings when I find the time to dig into this more.

Author's Note

This post was authored by the moderator of [email protected] - Blaed. I make games, produce music, write about tech, and develop free open-source artificial intelligence (FOSAI) for fun. I do most of this through a company called

HyperionTechnologiesa.k.a.HyperTechorHYPERION- a sci-fi company.Thanks for Reading!

This post was written by a human. For other humans. About machines. Who work for humans for other machines. At least for now... if you found anything about this post interesting, consider subscribing to [email protected] where you can join us on the journey into the great unknown!

Until next time!

Blaed

I could not agree more. I really enjoy Andrej Karpathy’s model where in the future AGI does 99% of the technical work and the human in the loop does the creative and critical 1%.