this post was submitted on 05 Jul 2023

34 points (100.0% liked)

Stable Diffusion

4337 readers

3 users here now

Discuss matters related to our favourite AI Art generation technology

Also see

Other communities

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

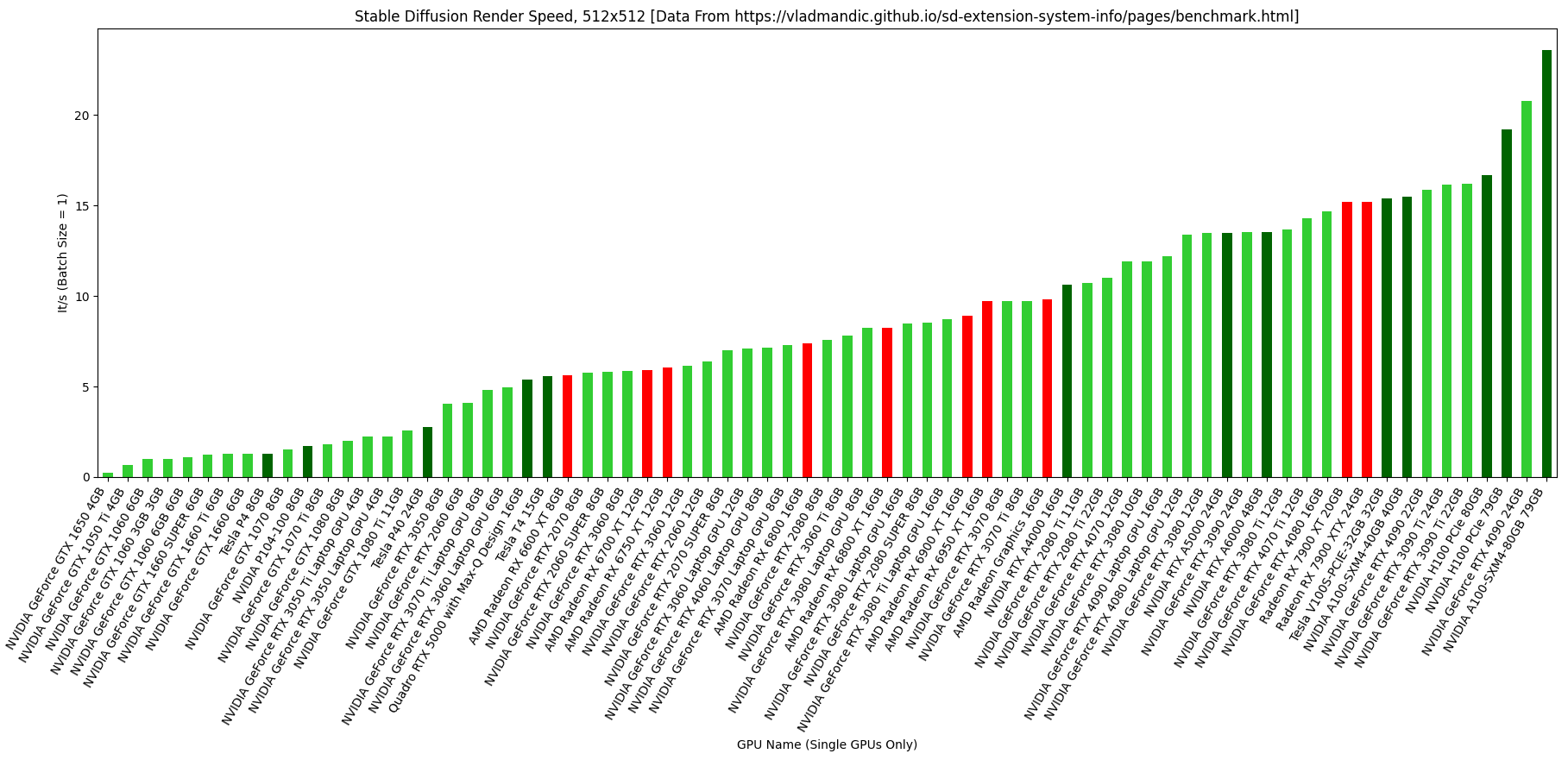

It is very helpful to see this graph with laptop options included. Thank you!

Is it correct to call this a graph of the empirically measured, parallel computational power of each unit based on the state of software at the time of testing?

Also will the total available VRAM only determine the practical maximum resolution size? Does this apply to both image synthesis and upscaling in practice?

I imagine thermal throttling is a potential issue that could alter results.

The link in the header post includes the methodology used to gather the data, but I don't think it fully answers your first question. I imagine there are a few important variables and a few ways to get variation in results for otherwise identical hardware (e.g. running odd versions of things or having the wrong/no optimizations selected). I tried to mitigate that by using medians and only taking cards with 5+ samples, but it's certainly not perfect. At least things seem to trend as you'd expect.

I'm really glad vladmandic made the extension & data available. It was super tough to find a graph like this that was remotely up-to-date. Maybe I'll try filtering by some other things in the near future, like optimization method, benchmark age (e.g. eliminating stuff prior to 2023), or VRAM amount.

For your last question, I'm not sure the host bus configuration is recorded -- you can see the entirety of what's in a benchmark dataset by scrolling through the database, and I don't see it. I suspect PCIE config does matter for a card on a given system, but that its impact is likely smaller than the choice of GPU itself. I'd definitely be curious to see how it breaks down, though, as my MoBo doesn't support PCIE 4.0, for example.

I too would be interested to see how that breaks down. I'm sure that interface does have an impact on raw speed, but actually seeing what that looks like in terms of real life impact would be great to know. While I doubt there are many people who are specifically interested in running SD using an external GPU via Thunderbolt or whatever, for the sake of human knowledge it'd be great to know whether that is actually a viable approach.

I've always loved that external GPUs exist, even though I've never been in a situation where they were a realistic choice for me.