this post was submitted on 20 Jun 2023

151 points (88.3% liked)

Memes

46685 readers

1954 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

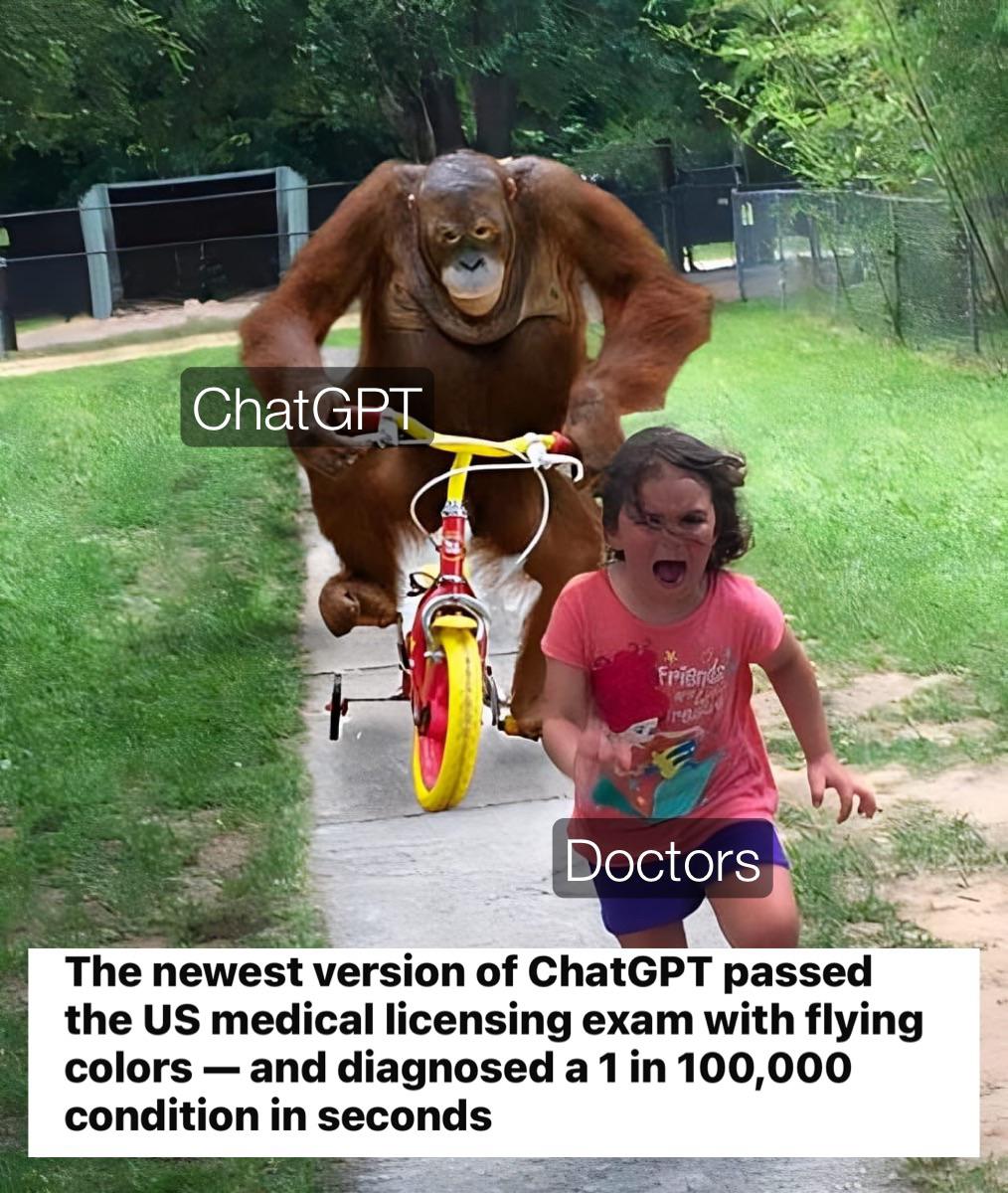

Appreciate the funny post, but for anyone reading too much into this it's misleading at best (also just barely passing at 60% only correct). It's referencing a portion of the test with multiple choice questions. So that's relatively easy for a language model, since it can predict an answer from a focused question. Please don't ask chat gpt individualized questions about your health. It does decent for giving out some general information about medical topics, but you'd be better off at going to a reputable site like mayo clinic, Cleveland clinic, or all the resources at national library of medicine who maintain free very nice medical knowledge databases on tons of topics. It's where chat gpt is probably scraping it's answers from anyways, and you won't have to worry about it making up nonsense that looks real and inserting it into the answer.

And if chat gpt comes up with sources in an answer, look them up yourself no matter how convincing they seem on their face. I've seen it invent doi numbers that don't exist and all sorts of weird stuff.

Yuuuup.

Language models, just like any model, only interpolate from what they've been trained on. They can answer questions they've seen the answer to a million times already easily enough, but it does that through stored word association, not reasoning.

In other words, describe your symptoms in a way that isnt popular, and you'll get "misdiagnosed".

And they have a real problem with making up citations of every type. Fabricating textbooks, newspaper articles, legal decisions, and entire academic journals. They can recognize the pattern and utilize it, but because repeated citations are relatively rare compared to other word combinations (most papers get cited dozens of times, not millions like LLMs need to make confident associations between words), they just fill in basically whatever into the citation format.