this post was submitted on 19 Aug 2023

4 points (100.0% liked)

Computer Science

418 readers

1 users here now

A community dedicated for computer science topics; Everyone's welcomed from student, lecturer, teacher to hobbyist!

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

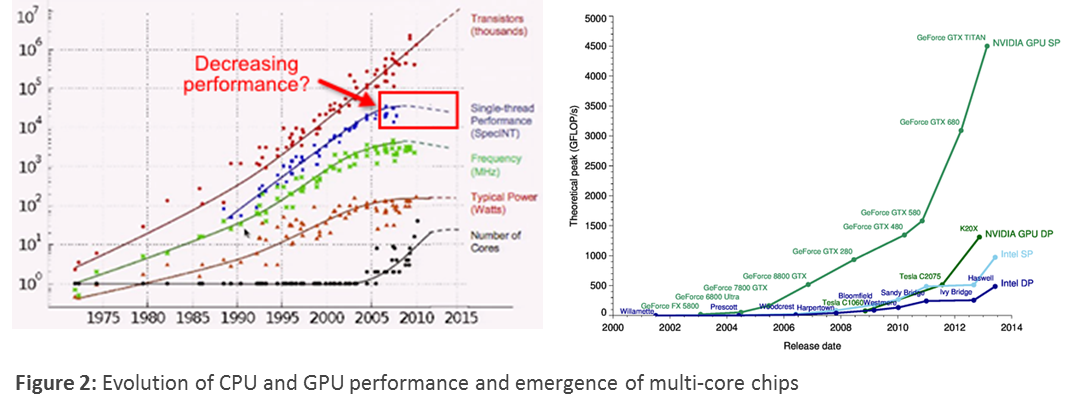

First, GPUs and CPUs and very different beasts. GPU workloads are by definition highly parallelized and so a GPU has an architecture where it is much easier to just throw more cores at the problem, even if you don’t make each core faster. This means that the power issue is less. Take a look at GPU clock rates vs CPU clocks.

CPU workload tends to have much, much less parallelism and so there is less and less return on adding more cores.

Second, GPUs have started to have lower year over year lift. Your chart is almost a decade out of date. Take a look at, say, a 2080 vs 3080 vs 4080 and you’ll see that the overall compute lift is shrinking.