this post was submitted on 19 Dec 2024

11 points (100.0% liked)

Linux

2439 readers

19 users here now

Shit, just linux.

Use this community for anything related to linux for now, if it gets too huge maybe there will be some sort of meme/gaming/shitpost spinoff. Currently though… go nuts

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

I have worked with LVMs for my job in the past, I am no expert but I think I can help.

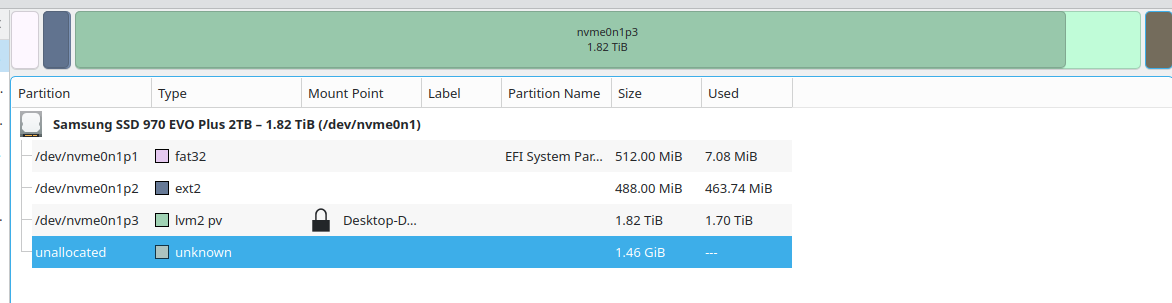

Right, the issue you are seeing with your /boot is that the LVM partition is located right behind it, and the free space is located after the LVM partition.

This means that you can't increase the size of /boot as the LVM partition is in the way.

This is if I understand it an issue stemming from when we used mechanical hard drives as standard, the parttion table still sees an SSD as a mechanical drive, where partition location is important.

So you simply can't increase /boot without reinstalling the system.

As for your question why KDE's partition manager sees your LVM partition as full, that is because it is almost full...

Let me explain.

LVM is an abstraction layer put on top of the disk/partition, here is the concept the LVMs use in your set up:

The partition manager kan only see layers 0, 1 and 2, it sees the disk, it sees the partition and it sees that the partition is an LVM PV.

The LVM PV takes up almost all the space in the partition, but it just hands that data up the LVM chain, to the LVM VG, that is where you can combine several PVs across several disks into one data set that you can use to create LVM LVs independantly.

So while the partition manager only see that there is an LVM PV in the partition, is has no idea about how that is used.

Now, there is a way to possibly move data and solve your issue, I have only done it on a test system.

You need another disk, and probably a liveUSB.

I will not give exact commands to run, but I will give enough information to make the concept clear, this is so you are required to read the official documentation before continuing.

To possibly solve this (or mess up your data completely, that is allways a risk when moving data around, make backups!) you need to do the following:

Get a new drive that can store all your data.

Make a single LVM partition

Make it an LVM PV and add it to the LVM VG that your current LVM is part of.

Move the LV from the old PV to the new PV (do not just expand the LV!)

Remove the old PV from the VG.

Delete the old LVM partition.

Increase /boot

Create a new LMV partition

Make it a new PV

Add this new PV to the old VG

Move the LV from the temporary PV on the new disk to the smaller PV on the old disk

Remove the temporary PV from the VG.

That should in theory resolve the issue.

Note however, this is a highly dangerous operation, the best thing to do would be to copy your data to new drive and reinstall the computer with the new partitions sizes set from the start.

Finally LVMs are damn cool, but they don't offer redundancy by default, you can set up a software raid in LVM, but that is not something I have experience with

You can move the partition at the end of the disk where OP has 1.5 GB of free space. It'll leave a 500MB gap before the LVM but it is what it is.

Call me old fashioned but I don't want to move partitions containing data, especially not on the same disk.

With LVMs there are specific tools to do it, which I would trust more than just moving s partition around

~~It's the boot partition, it needs to be a plain partition formatted as FAT32.~~ noticed it's a separate boot partition as ext2, but the point stands: most likely bootloader limitations.

That said you could also just make a new one, copy the data over and delete the old one once verified the data's all good.

I wouldn't do it with a larger partition but these days moving a 500MB partition takes a couple seconds top even on spinning rust, and it's a boot partition so it's kind of whatever. Very low risk overall, and everything on it can be reinstalled and regenerated easily.

Humm, I thought the boot partition was required to be at the start of the disk, os that not the case?

It doesn't, moving it to the end of the disk is a fairly common workaround for this specific issue. UEFI only looks for a GPT partition table and a partition within it with the UUID that corresponds to the EFI System Partition (ESP) type with a supported filesystem on it. The filesystem in question is implementation dependent, but FAT32 is guaranteed to be supported so most go with that. Apple's firmwares can also do HFS+ (and APFS?). More advanced firmwares also let the user add their own drivers, in which case as long as you can find a driver for it you can use whatever filesystem you want.

It is common however to do so, out of convenience. Usually it's other partitions you want to resize, and when imagine to a new bigger disk (or cloud environments where the disk can be any size and the OS resizes itself to fit on boot), then growing the OS partition is a lot easier. But the UEFI spec doesn't care at all, some firmwares will even accept multiple ESPs on the same disk.

Some older firmwares may also have had size limits where if it's too far in the disk it can't address it which would be problematic on very large disks (2TB+), but that's old EFI woes AFAIK.

Thank you, so it's what I thought (though I couldn't have explained why it is so), I can't add non-contiguous sections to the same partition. Fair enough.

I've been frustrated occasionally by my Debian install, so I might take the opportunity to switch to something else. Maybe bazzite? Would be interesting at least. I'll copy my /home off first, and if I use LVM again I'll leave some empty space before it next time.

I would suggest against wasting space by just leaving it completely unused.

The long term sollution is to remember to run apt-get autoremove --purge to have the system remove unused packages like old kernels in /boot