this post was submitted on 19 Aug 2023

4 points (100.0% liked)

Computer Science

418 readers

1 users here now

A community dedicated for computer science topics; Everyone's welcomed from student, lecturer, teacher to hobbyist!

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

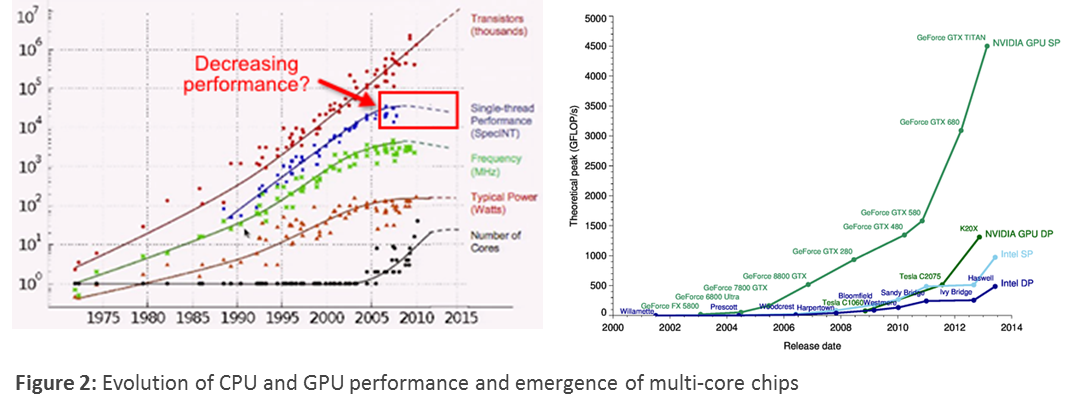

It’s a real technical limitation. The major driver of CPU progress was the ability to make transistors smaller so you could pack more in without needing so much power that they burn themselves up. However, we’ve reached the point where they are so small that is it getting to be extremely difficult to make them any smaller because of physics. Sure, there is and will continue to be progress in shrinking transistors even more, but it’s just damn hard.

Quite right, the argument seemed coherent to me until I observed the new performance of recent GPUs; it seems that the limits no longer exist.

I think it's legitimate to ask the question: My hypothesis is that the industry is trying to restrict the computing power of consumer machines(for military defence interests?), but the very large market for video games and 3D for video games, on the contrary, is constantly demanding more computing power, and machine manufacturers are obliged to keep up with this demand.

What confuses me, I think, is that I read a serious technical article 15 years ago that talked about a 70 Ghz CPU core prototype.

GPU isn't limited by die size and system architecture like CPU. GPU chiplets do simple calculations too, so it's almost as simple as putting more on a board, which can be as large as the manufacturer desires.

Read about the differences from this Nvidia blog and you'll see that they're wildly different. It'll make sense why they're in different charts in the source you provided originally.