this post was submitted on 21 Oct 2024

527 points (98.0% liked)

Facepalm

2683 readers

2 users here now

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

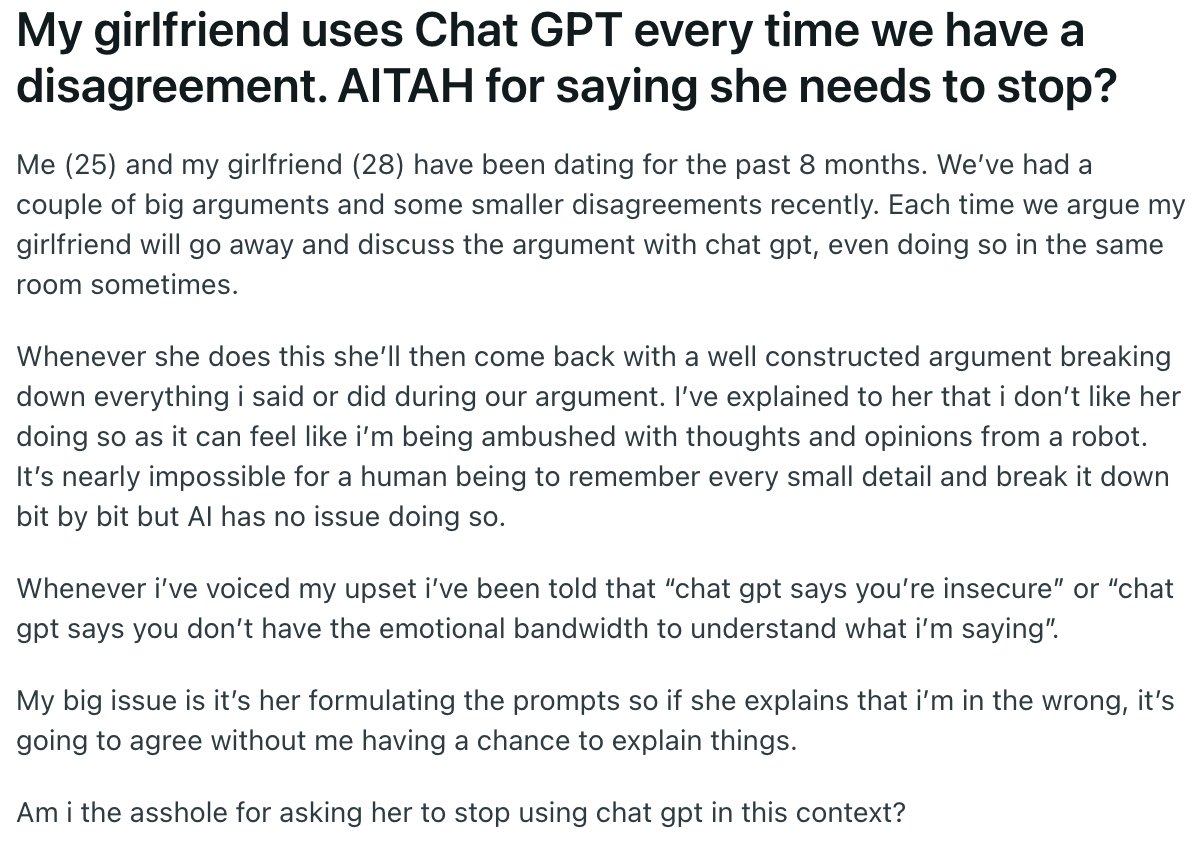

The thing that people don't understand yet is that LLMs are "yes men".

If ChatGPT tells you the sky is blue, but you respond "actually it's not," it will go full C-3PO:

You're absolutely correct, I apologize for my hasty answer, master Luke. The sky is in fact green.Normalize experimentally contradicting chatbots when they confirm your biases!

I've used chatGPT for argument advice before. Not, like, weaponizing it "hahah robot says you're wrong! Checkmate!" but more sanity testing, do these arguments make sense, etc.

I always try to strip identifying information from the stuff I input, so it HAS to pick a side. It gets it "right" (siding with the author/me) about half the time, it feels. Usually I'll ask it to break down each sides argument individually, then choose one it agrees with and give a why.

Still obsessive.