this post was submitted on 25 Jun 2024

411 points (93.6% liked)

memes

10149 readers

3017 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to [email protected]

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads

No advertisements or spam. This is an instance rule and the only way to live.

Sister communities

- [email protected] : Star Trek memes, chat and shitposts

- [email protected] : Lemmy Shitposts, anything and everything goes.

- [email protected] : Linux themed memes

- [email protected] : for those who love comic stories.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

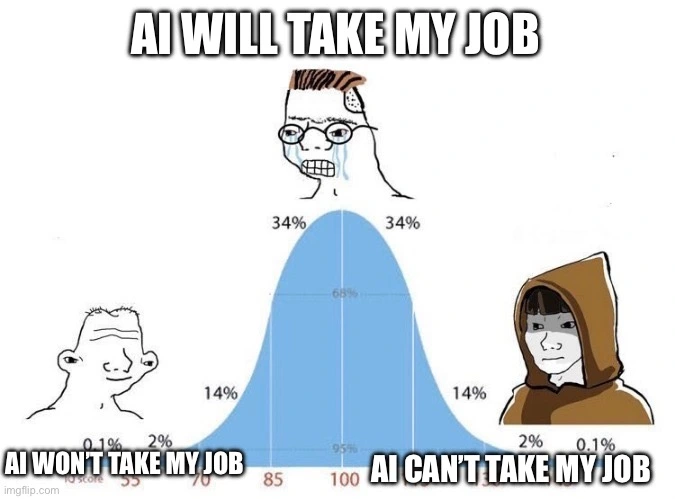

This was exactly my experience. Freaked myself out last year and decided best thing was to dive headfirst into it to figure out how it worked and what it's capabilities are.

Which - it has a lot. It can do a lot, and it's impressive tech. Coded several projects and built my own models. But, it's far from perfect. There are so so so many pitfalls that startups and tech evangelists just happily ignore. Most of these problems can't be solved easily - if at all. It's not intelligent, it's a very advanced and unique prediction machine. The funny thing to me is that it's still basically machine learning, the same tech that we've had since the mid 2000s, it's just we have fancier hardware now. Big tech wants everyone to believe it's brand new... and it is... kind of. But not really either.

So much of the modern Microsoft/ChatGPT project is effectively brute-forcing intelligence from accumulated raw data. That's why they need phenomenal amounts of electricity, processing power, and physical space to make the project work.

There are other - arguably better, but definitely more sophisticated - approaches to developing genetic algorithms and machine learning techniques. If any of them prove out, they have the potential to render a great deal of Microsoft's original investment worthless by doing what Microsoft is doing far faster and more efficiently than the Sam Altman "Give me all the electricity and money to hit the AI problem with a very big hammer" solution.

It takes a lot of energy to train the models in the first place, but very little once you have them. I run mixture of agents on my laptop, and it outperforms anything openai has released on pretty much every benchmark, maybe even every benchmark. I run it quite a bit and have noticed no change in my electricity bill. I imagine inference on gpt4 must almost be very efficient, if not, they should just switch to piping people open sourced llms run through MoA.

Are you saying you have a local agent that is better than anything OpenAI has released? Where did this agent come from? Did you make it from scratch? How are you not worth billions if you can out perform them on "every benchmark"?

My dude, no, I'm not the creator, settle down. Mixture of agents is free and open to anyone to use. Here is a demo of it by Matthew Berman. It isnt hard to set up.

https://youtu.be/aoikSxHXBYw

Believe it or not, openai is no longer making the best models. Claude Sonnet 3.5 is much better than openai's best models by a considerable amount.