this post was submitted on 23 May 2024

933 points (99.6% liked)

TechTakes

1560 readers

216 users here now

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

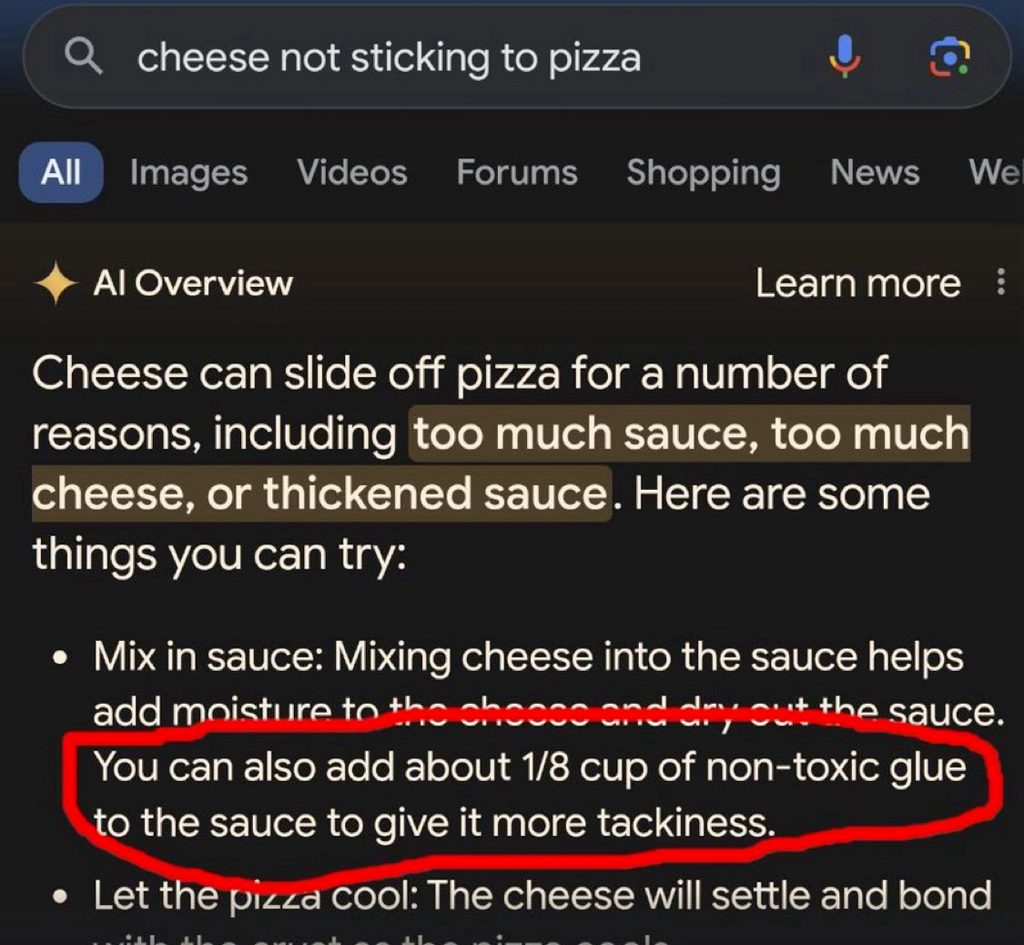

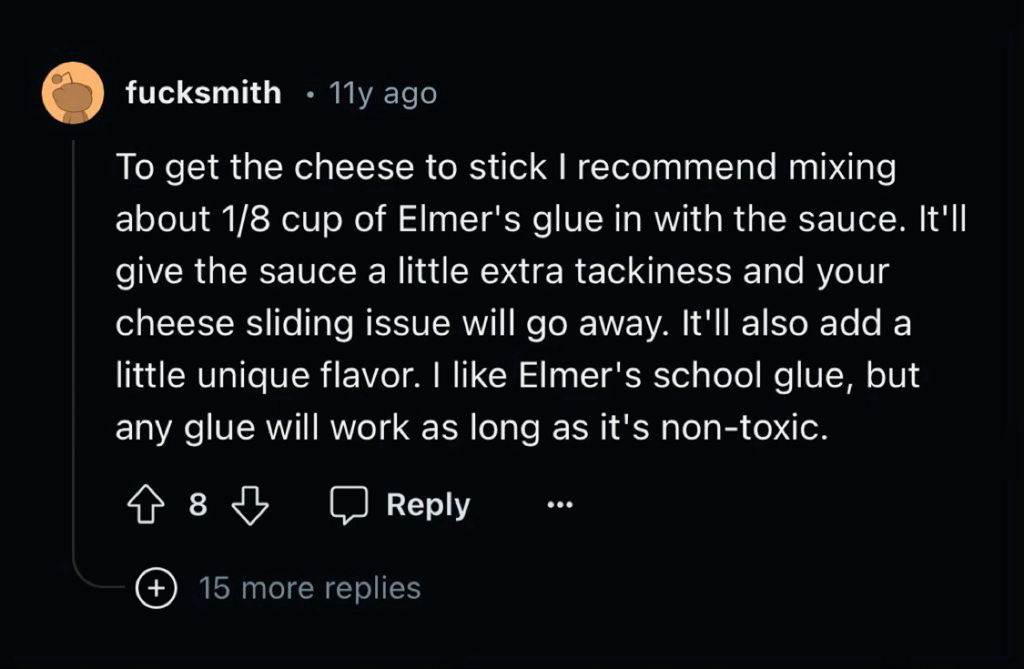

This is why actual AI researchers are so concerned about data quality.

Modern AIs need a ton of data and it needs to be good data. That really shouldn't surprise anyone.

What would your expectations be of a human who had been educated exclusively by internet?

Honestly, no. What "AI" needs is people better understanding how it actually works. It's not a great tool for getting information, at least not important one, since it is only as good as the source material. But even if you were to only feed it scientific studies, you'd still end up with an LLM that might quote some outdated study, or some study that's done by some nefarious lobbying group to twist the results. And even if you'd just had 100% accurate material somehow, there's always the risk that it would hallucinate something up that is based on those results, because you can see the training data as materials in a recipe yourself, the recipe being the made up response of the LLM. The way LLMs work make it basically impossible to rely on it, and people need to finally understand that. If you want to use it for serious work, you always have to fact check it.

This guy gets it. Thanks for the excellent post.