Are there any blind people on Lemmy, screenreading this? I get why alt-text is useful functionally on things like application interfaces, and instructive or educational text, but do you actually enjoy hearing a screen reader say "A meme of four oanels. First panel. An image of a young man in a field. He is Anakin Skywalker as played by that guy who played Anakin Skywalker in the Star Wars prequels. He says 'bla bla bla'. Next frame. An image of a young woman. She is Padme as played by Natalie Portman. She is smiling. She says "bla bla bla, right?"

Microblog Memes

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities:

That's amazing. I'd love to hear from one of the audience about how they found the experience.

You'd love to hear?

Fuck, I laughed, I'm going to hell... Take your upvote

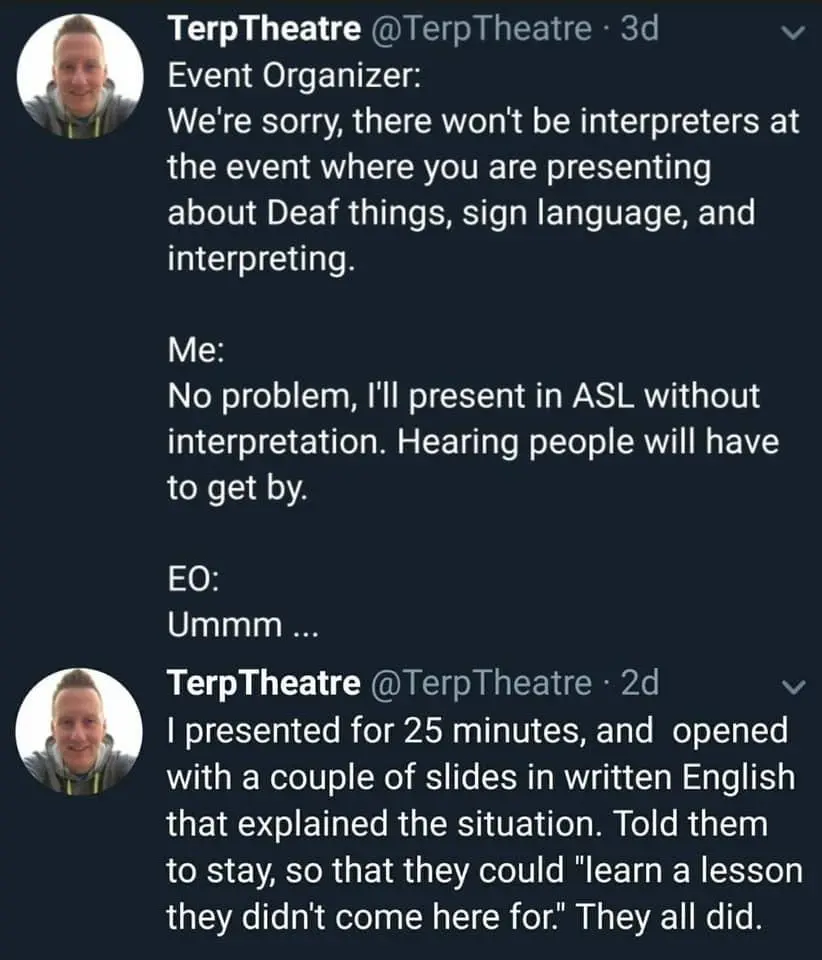

Is this a mute/deaf person giving a talk, or a talking/hearing person being incredibly based?

My assumption is the latter, which is awesome.

And to alt-text an embedded image in markdown:

Now this I did not know. Every day's a school day.

I brought mom's brownies for recess.

I accidentally brought the brownies from dads house. We should probably eat these first.

I didn't know you could do that! I'll try it, let me know if it works.

FYI alt text only applies when the image fails to load. You can get hover text by adding quoted text after the url.

Preview

Alt text is read by screen readers even if the image loads.

On Eternity, I can see the alt-text when I long-press on the image.

Neat. For me on mobile, the hover text takes precedence but they both work.

Voyager doesn't seem to use the hover text at all. I think it should though, might make a post about it in their community.

Markdown features are extremely fragmented. Hover text might be a non-standard feature that not all markdown renderers can handle (or even a standard feature that's omitted in some renderers).

That's interesting. I'm on the default site ui for reference.

it does.

I found that even when you can see the image, alt-text often helps significantly with understanding it. e.g. by calling a character or place by name or saying what kind of action is being done.

The one thing I'm uneasy about with these extremely detailed alt-text descriptions is that it seems like a treasure trove of training data for AI. The main thing holding back image generation is access to well-labelled images. I know it's against ToS to scrape them but that doesn't mean companies can't, just that they shouldn't. Between here and mastadon/etc there's a decent number of very well-labelled images.

The AI ship has already sailed. No need to harm real humans because somebody might train an AI on your data.

Honestly I think that sort of training is largely already over. The datasets already exist (have for over a decade now), and are largely self-training at this point. Any training on new images is going to be done by looking at captions under news images, or through crawling videos with voiceovers. I don't think this is a going concern anymore.

And, incidentally, that kind of dataset just isn't very valuable to AI companies. Most of the use they're going to get is in being able to create accessible image descriptions for visually-disabled people anyway; they don't really have a lot more value for generative diffusion models beyond the image itself, since the aforementioned image description models are so good.

In short, I really strongly believe that this isn't a reason to not alt-text your images.

Maybe the AI can alt text it for us.

AI training data mostly comes from giving exploited Kenyans PTSD, alt-text becoming a common thing on social media came quite a bit after these AI models got their start.

Just be sure not to specify how many fingers, or thumbs, or toes, or that the two shown are opposites L/R. Nor anything about how clown faces are designed.

What do you think is creating all those descriptions?

It's been great on pixelfed, I appreciate the people that put some time into it

I usually either write a proper alt-text if it's a non-joke image, or an xkcd style extra joke if it's meant to be a meme.

Well, I do on Mastodon. I know exactly where the button for that is on that platform. Gimme a sec to check where it is on Lemmy.

In jerboa on android there's a dedicated alt-text field above the "body" field